An Advanced Generative AI Platform for Faster Research

Category: Healthcare, Education

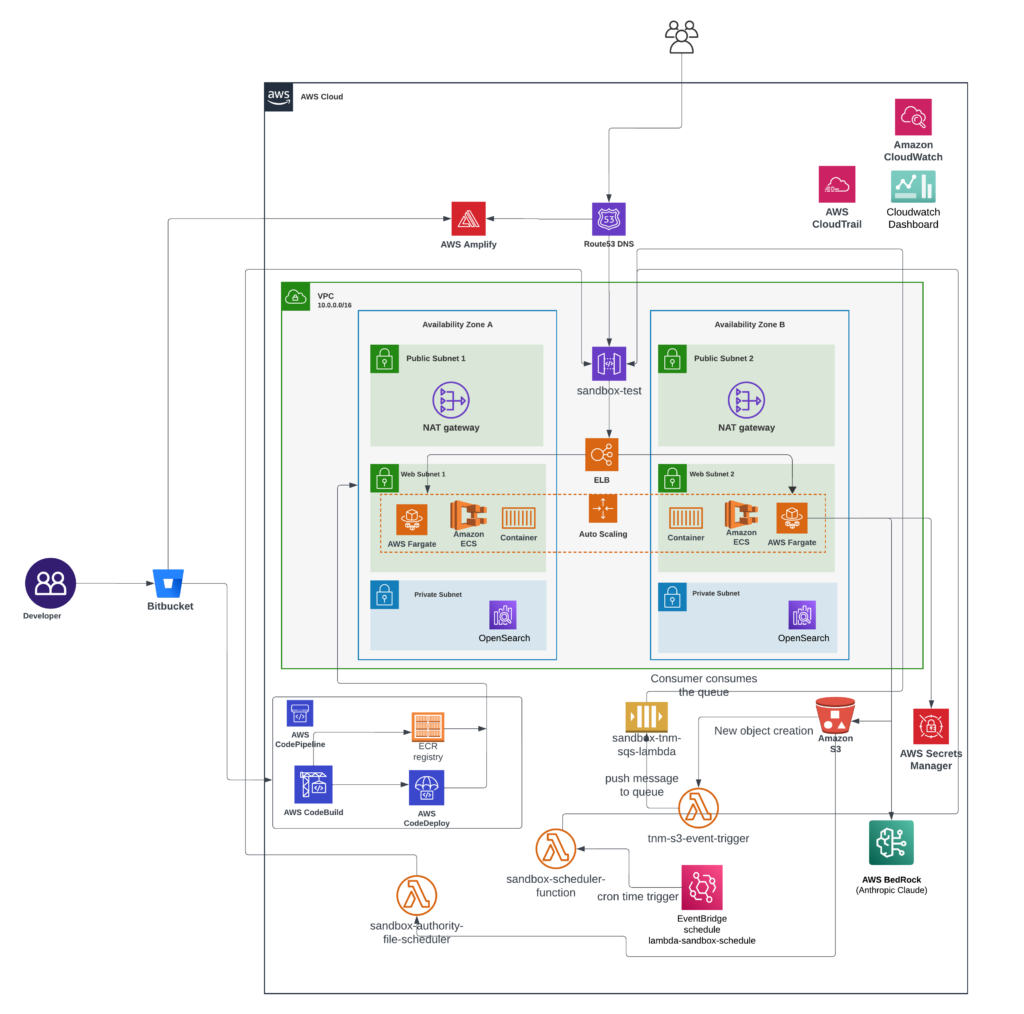

Services: Gen AI Development, Architecture Design and Review, Managed Engineering Teams

Category: Healthcare, Education

Services: Gen AI Development, Architecture Design and Review, Managed Engineering Teams

Our client is a leading psychological research organization in the United States, representing over 157,000 researchers, educators, clinicians, and students. With an extensive knowledge base that spans numerous publications, articles, and psychological research papers, they needed to build a generative AI platform to streamline access to this information. The platform would enable researchers to easily search through a vast amount of resources across multiple languages while ensuring high accuracy and contextual relevance in the search results.