Containers have been the go-to approach for cloud-native development. It provides OS-level virtualization with minimal configuration to run cloud-native apps. In addition, there are many benefits of containerization, like enhanced DevOps, portability, agility, and test automation.

However, deciding whether to go for containers or not can be tricky. Before taking a decision, organizations need to consider many aspects of containerization like,

- Security

- Change management

- Productivity

- Configuration management

- Scalability

- Data management

According to IDC, “improved security” is the most significant driving factor for enterprises choosing containerization. Additionally, organizations consider productivity and scalability as substantial factors while deciding on containerization.

So, should you choose containerization?

Every decision needs a rational reason. You can use the rational choice theory proposed by Adam Smith in “An Inquiry into the Nature and Causes of the Wealth of Nations.” It is an approach where you need to consider the rational actors. These rational actors minimize losses and maximize gains in any scenario.

So, here are the top benefits of containerization based on the challenges of developing applications.

Top benefits of containerization

Knowing the challenges is key to assessing a rational actor. There is no denying that adopting a DevOps culture improves productivity. It also enhances the synchronization of otherwise siloed operation and development teams.

But why do you need containerization then?

DevOps has some caveats too, and the container helps overcome them. Containers also provide quicker deployments, portability, reduced dependencies, etc. Let’s assess some rational actors and the benefits of containerization!

1. Portability of containers for cross-platform development

Businesses like to develop applications for platforms like Android and iOS. However, maintaining native compatibility and quickly adapting to platform changes is challenging. One of the common changes includes security patches that protect against new vulnerabilities and malicious code.

Adapting your application to these changes can take time and effort. For example, in April, the second part of the security patch level for Android devices was rolled out. It included security patches for no less than 30 vulnerabilities in the system, kernel components, and System of Chips(SoCs) like Mediatek.

If you have an application on the Android and iOS platforms, quicker changes are inevitable. Similarly, there can be multiple native changes for your application across platforms.

For example, the design language for apps in Android 12 is Material. Now, adjusting to the changes can be challenging when you have the same reusable code which compiles for each platform.

“Write once, run anywhere” is a crucial benefit of containerization. The portability of containers enables organizations to deploy the application in any environment quickly. In addition, they pack all the dependencies, so you don’t need to configure the app for different environments.

Further, portability allows your application to be free from the host operating system. It will enable you to use different OS environments to develop and deploy applications. Portability is also a key rational actor in the multi-cloud approach. If your apps are portable, moving them from one computing environment to another has minimal hassle.

Containerization is beneficial for businesses developing cross-platform apps with a multi-cloud approach.

CHG improved the portability of the medical staffing app with containerization

CHG is a leading medical staffing company that facilitates 30% of temporary healthcare recruitments in the United States. Managing such a massive staffing company needs several software services deployed across platforms. Therefore, CHG de-siloed teams across platforms for higher native performance.

However, it led to the complexity of deployments across platforms. CHG naturally wanted to standardize deployments without compromising native performance. So, they first used Amazon EC2 instances to implement microservices leveraging the cloud-native approach.

They soon discovered a portable solution and eventually used Amazon EKS to deploy native Kubernetes. Kubernetes is an open-source platform to manage, automate and scale containerized workloads. On the other hand, EKS provides managed services for Kubernetes on the AWS cloud.

CHG used containerization of workloads to reduce the deployment time across platforms from 3 weeks to a day. However, while portability does improve deployment time, quick update implementation is another critical challenge.

2. Quick update implementation with containerization

Adding new features is critical for competitive advantage. So, you need rapid rollout of features and rollbacks as per need. However, rapid deployment and rollbacks are challenging in a conventional setup due to tightly coupled services. Also, there are multiple configurations, libraries, and dependencies to manage rollout and rollbacks.

Containers are extremely lightweight with minimal configurations and dependencies. In addition, it will allow you to quickly update applications by spinning up a single container or entire cluster as per need.

Adidas used Kubernetes to improve velocity and agility.

Adidas faced the challenge of building a reliable, scalable, and efficient cloud-native platform. The eCommerce front of the sports shoe brand needed to foster rapid innovation cycles and improve UX.

So, they decided to migrate their workloads from on-premise to cloud with an open-source solution. They also wanted to avoid vendor-locking and agility of updates with the solution. Therefore, they chose to containerize workloads using Kubernetes for orchestration.

For Kubernetes implementation, the Adidas team used the Giant Swarm. It facilitates infrastructure, operations, and applications for open source technologies like Kubernetes. The most significant aspect of Giant Swarm is spinning up clusters as per need.

This is important for eCommerce businesses that want to deploy containers per peak demand. Adidas used Giant Swarm and Kubernetes and,

- Set up a CI/CD system for the generation of 100,000 builds per month

- Increased revenue from 40 million Euros to 4 billion Euros between 2012 and 2020 period

3. Cloud-scale automation to cope with testing limitations.

Consistency of UX across platforms needs extensive testing of applications. However, testing apps across devices, platforms, and environments can be challenging. Many organizations use emulators to test applications for specific OS platforms like Android or iOS. It is not always an effective strategy as testing with emulators does not provide comprehensive data.

DevOps culture does help through better synchronization between development and QA teams. Though achieving continuous testing for your apps is challenging due to massive configuration management. You need to configure the app and build test cases for different platforms, devices, scenarios, environments, etc.

Here, containerization can enable cloud-scale automation. You can use fully automated orchestration patterns for common test scenarios. It will help you reduce the complexity and errors of manual testing. Further, it also allows the execution of operations at a cloud scale with higher resilience.

Alm. Brand used containerization for test automation.

Alm. Brand is a financial service company from Denmark. Teams at Alm. Brand wanted to support a fully functional agile software development through automated tests. Manual testing used to take a tedious 120+ hours per regression cycle.

The regression testing suite took considerable time. So, they decided to use parallel testing. Containerization with Docker helped Alm. Brand teams automate test cases and reduce the time for every regressive cycle.

Containerizing test cases and parallel testing helped them achieve faster regression test feedback. It also helped Alm. Brand improve release time for every iteration.

4. Enhanced configurations management

The mobile application development process needs running apps in different environments. For example, you may be using a staging environment during development, a testing environment during the beta stage, and a production environment for the app store. Unfortunately, switching between these environments is a difficult task.

Containerization enables development teams to use a single codebase across multiple environments. You can create containerized clusters for each environment with minimal configurations.

Spotify used containerization to deal with the NxM problem

The NxM problem, according to Spotify’s Evgeny Goldin, is when there are N number of services and M number of hosts. Spotify packages discrete features into microservices using a stream of containers. Spotify’s teams can spin up and down containers as per scaling needs worldwide.

Containerization of workloads helps Spotify provide a seamless experience for users across devices and environments. So, users can use Spotify’s app on any device and use features like playing music, closing the app, turning on or off a specific service, etc.

If a user’s app needs a specific microservice, it requests the Spotify servers. Furthermore, adding each feature or microservice request across the environment is challenging. Therefore, Spotify spins up containers according to additional demand and terminates them after request execution.

Spotify’s team used containerization for optimal service delivery across environments. However, many organizations adopt a DevOps culture for such a high-level deployment. However, the problem with straightforward adoption is a lack of maturity.

5. DevOps optimization with containerization

The maturity of an organization’s team for software development lifecycle(SDLC) can impact product delivery. It also has a massive impact on DevOps adoption. Without a mature SDLC, achieving continuous delivery is challenging.

Enter DevOps, and the software delivery becomes far more efficient with shorter increments. Add Agile to the mix, and it’s a perfect recipe with incremental iterations. However, teams with lower SDLC maturity often find it challenging to cope with the agility of DevOps.

Containerization enables support for Agile and allows the organization to overcome the lack of maturity in SDLC. You don’t need to pack too much with containers and have termination flexibility.

Why does flexibility matter to DevOps adoption?

Flexibility ensures that creating shorter increments is not that difficult despite the paucity of SDLC maturity. However, containerization may not be straightforward for your teams. But, once you have teams trained, the implementation does not take time.

However, containerization alone is not the answer to your DevOps adoption worries. Simply put, you need a mix of agile, containerization, and DevOps to enable continuous delivery.

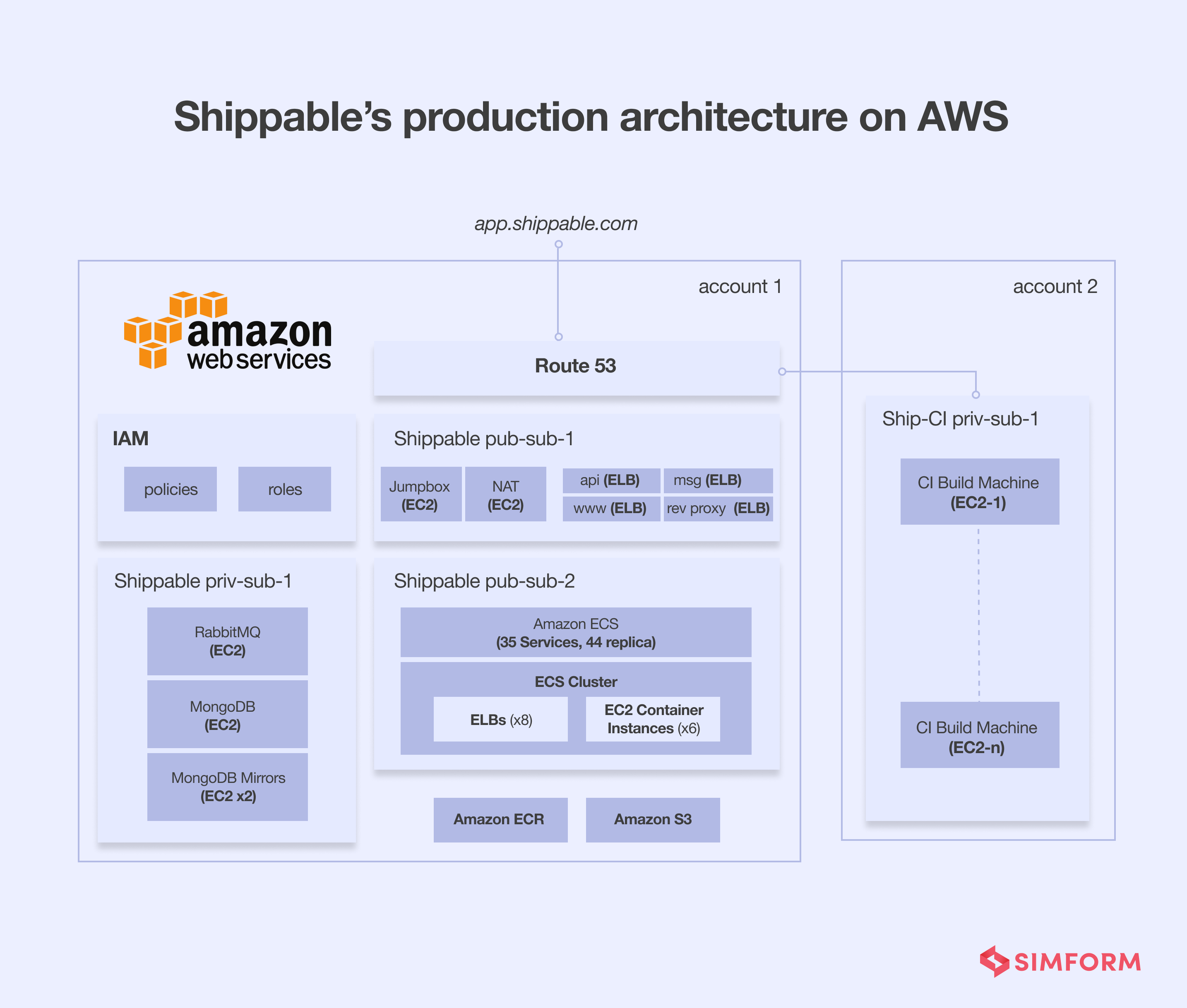

Shippable used Amazon ECS to improve continuous delivery

Shippable provides a platform for hosted testing, continuous integration, and deployment from GitHub and Bitbucket repositories. It has two parts, the first one is continuous integration, and the second is continuous delivery.

Combining different services, the Shippable platform allows developers to build, test, and deploy applications. Shippable built the CI/CD platform with a microservice architecture using Docker containers on Amazon EC2. However, the docker solution was hard to scale and had configuration issues.

The engineering team at Shippable spent 60-80% of their time maintaining the infrastructure and container orchestration. Apart from the infrastructure management, monitoring extensive logs and managing Elasticsearch was time-consuming.

Shippable considered several open-source orchestration tools like Apache Mesos and Kubernetes. However, these tools were not the right fit as they also needed significant engineering time. So, Shippable chose Amazon ECS.

ECS allows rapid and continuous delivery of features for Shippable. In addition, every docker image is stored in the Amazon EC2 Container registry for easy management of containers. Lastly, Shippable reduced the 60-80% of engineering time spent on infrastructure management to 20%.

While containerization can help achieve continuous delivery for your apps, workflow adaptability is another challenge.

6. Higher workflow adaptability with containerized workloads

Organizations go through change management while adopting a DevOps culture. Change management processes are in accordance with the earlier setting where businesses integrate additional resources or integrate new services.

However, with DevOps, the entire scenario changes. You need to adapt to changing role dynamics, processes, architecture, and collaborations. Apart from all these changes, you also need highly adaptable workflows to cope with fast-paced deployment demand.

Containerization allows high adaptability. Complete isolation of processes in containerization enables hassle-free change management. You can simply isolate the process for which changes are to be managed and quickly deploy without affecting the entire system.

Nordstrom leverages Kubernetes to improve cloud-native adaptability.

Nordstrom wanted to enhance the efficiency of its eCommerce platforms and improve operational speed. So, they decided to embrace DevOps to have a cohesive strategy and launch an enhanced CI/CD pipeline.

Now the challenge began when they embraced the DevOps culture. Higher velocity and lower CPU utilization were on top of their checklist. So, they decided to use a cloud-native strategy for development.

First Nordstrom team used docker containers to support cloud-native development. However, they soon realized that their workflows lacked adaptability to the containerization approach. In addition, new environments took a long time to turn up. So, they decided to use Kubernetes to orchestrate containers.

It enabled Nordstrom to reduce the deployment time from three months to thirty minutes. Containerization also helped them reduce CPU utilization by 10%.

Improving adaptability and deployment times needs effective governance.

7. Architecture as code(AaC) advantage

When you begin your DevOps adoption, the initial success on isolated teams seems exciting. However, as you scale it across infrastructure, the lack of governance can lead to failures.

One possible solution that containerization offers for lack of governance is Architecture as Code(AaC). While developing a cloud application, most organizations don’t consider infrastructure first, and it’s the architecture design that takes center stage. The concept of AaC draws inspiration from the same mindset.

At AWS, AaC was envisioned by distributing ECS architectural patterns in the AWS Cloud Development Kit(CDK). AWS CDK is an open-source software development framework that allows the usage of programming languages like Typescript, Python, Java, and C#.

AWS teams build an ECS patterns module combining the lower-level and higher-level abstracted resources. They released three patterns for ECS,

- Load balanced service

- Queue processing service

- Scheduled tasks

These patterns can be reused as infrastructure as code(IaC) components and shared across microservices. Instead of specifying individual infrastructure resources for a new microservice, a high-level reusable template is used.

So each time there is a new microservice deployed, you can make changes to the high-level template. It helps reduce the deployment time and improves the governance through templates.

8. Network as Code(NaC) for NetOps and SecOps synchronization

There are many similar activities between network and security ops like network monitoring, data access analysis, tracking network parameters, etc. However, many organizations have NetOps and SecOps teams working incoherently, which is inefficient.

For example, if SecOps decides to change specific network parameters for security purposes, NetOps needs to review it. Unfortunately, such a review process takes time leading to operational delays and security threats.

Containerization enables organizations to synchronize their NetOps and SecOps through the Network as Code(NaC) approach. It is a method of applying the IaC concept to all things network!

- NaC works on three basic principles,

- Storage of network configuration in source control

- Source control becomes the single source of truth

- Deployment of configurations through APIs

Storage of network configuration in the git repository as code allows organizations to leverage the benefits of IaC. It will enable NetOps teams to monitor changes, identify the source of changes, and roll back configuration versions.

Further, the source control becomes the single source of truth for the entire system. It allows you to have a uniform network configuration across environments. Thus, the environmental variations reduce drastically.

So, the network configuration is consistent, whether it’s a test environment or a production environment. Further, it improves collaboration between NetOps and SecOps through a single source of truth.

The third principle is where containerization comes into play. Deployment of network configurations through programmable APIs helps in achieving IaC benefits. However, containerization is a better approach to deploying networks as code. It allows network engineers and QAs to spin up new containers per deployment needs.

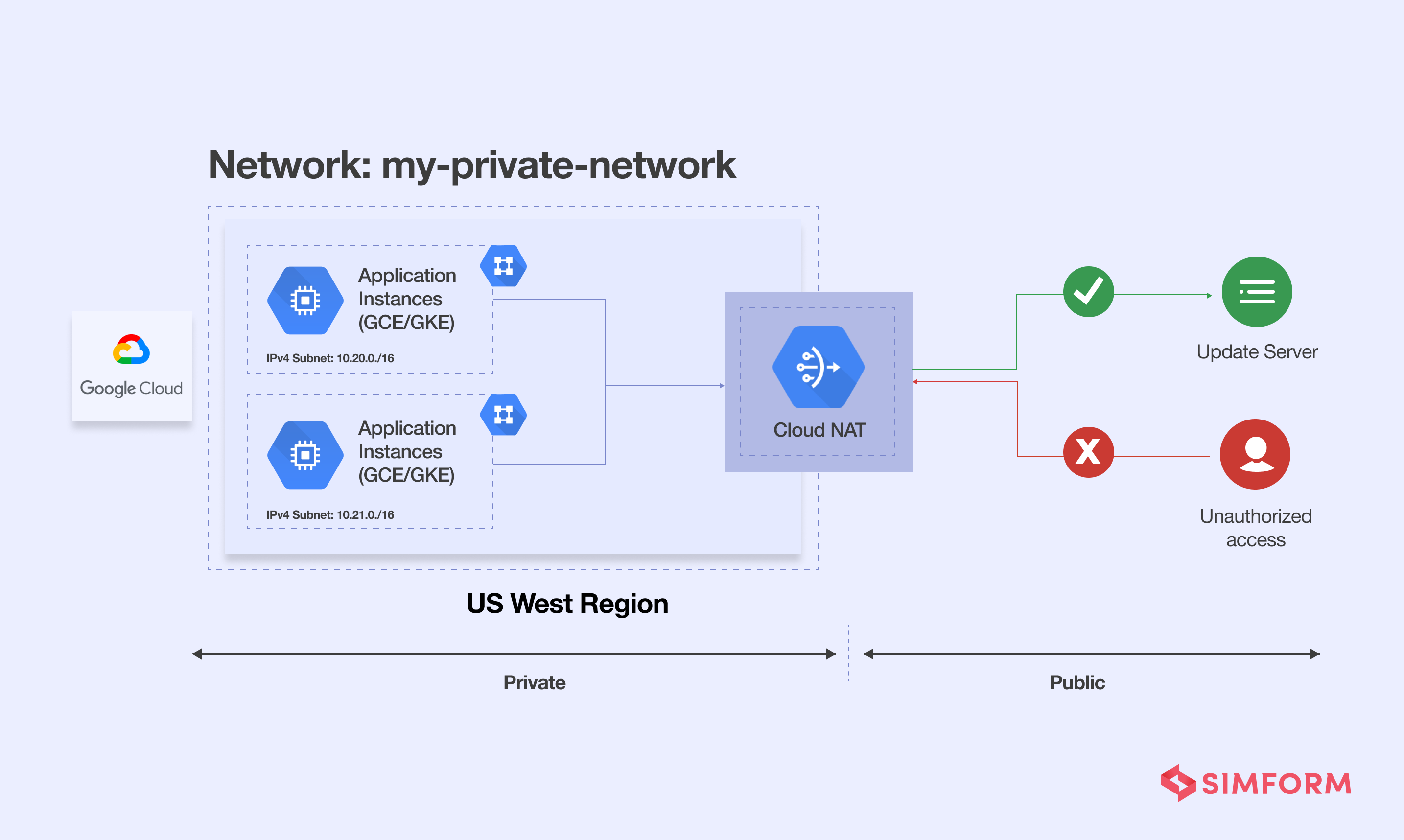

Google Kubernetes Engine introduced NaC-based features with containers.

GKE is a Kubernetes managed service that allows organizations to leverage the containerization approach. In addition, Google also provides Cloud NAT(Network Address Translation) service, which helps manage an organization’s IP addresses for economic and security purposes.

With GKE and NAT services, Google enables organizations to manage network configurations better. However, one of the significant challenges of network admins using Google’s VPC was managing the load on network endpoints. So, the search engine giant introduced a container-native load balancing feature.

It allows network administrators to program load balancers with network endpoints. These endpoints represent containers and IP addresses or Ports through Google abstraction known as Network Endpoint Groups or NEGs.

With NEGs, developers can load balance across cloud networks. Such an approach is ideal for intensive data in transit operations. The transmission and capture of health data from IoT devices is one such example. Enterprise applications can also benefit from NaC and improve network security.

How does Simform help with reaping the benefits of containerization?

A containerization is a virtualization approach that is helping organizations to isolate their processes for higher flexibility. Simform engineers leverage the containerization approach to offer,

- Workload portability

- Enhanced app configurations

- Reliable architecture

- Robust network configuration

- Cloud-native capabilities

- Optimized DevOps implementation

So, if you are looking to improve release cycle time and have the flexibility of instant rollbacks for your applications, get in touch with us for expert containerization and orchestration consultations.