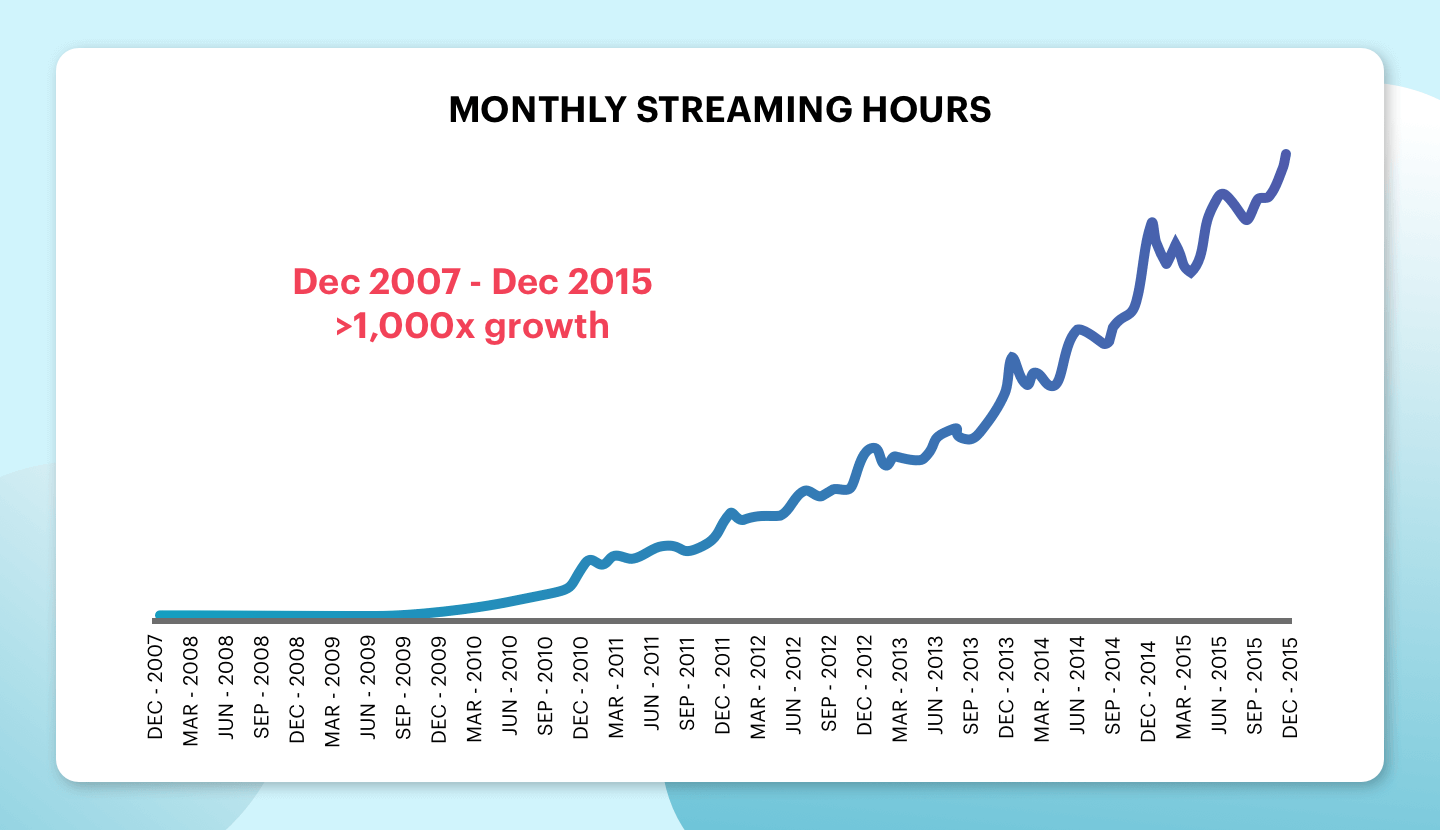

Back in 2008, Netflix was growing fast. It was running relational databases in its own data centers where a data center failure had shut the entire service down.

Since there were millions of customers who were generating enormous quantities of data, the company had to struggle to rack the servers in their own data centers. And, they had to do it fast enough to handle the ever-growing demand.

They made a choice to migrate their database to the cloud, which turned out to be a good decision.

“We have eight times as many streaming members than we did in 2008, and they are much more engaged, with overall viewing growing by three orders of magnitude in eight years,” wrote Yury Izrailevsky, former Vice President of Cloud and Platform Engineering.

Well, it becomes imperative for software businesses to adopt next-generation technologies for enhancing both efficiency and agility. Of all the critical tasks carried out during IT infrastructure upgrade initiatives, one thing that holds a lot of importance is database migration. If you are also planning to move your database, you would be keen to know how database migration works?

Editor’s note – The blog decodes the overview of database migration and if you’re at the stage where you are thinking of migrating your database and need an expert opinion, you can directly explore our custom software development services.

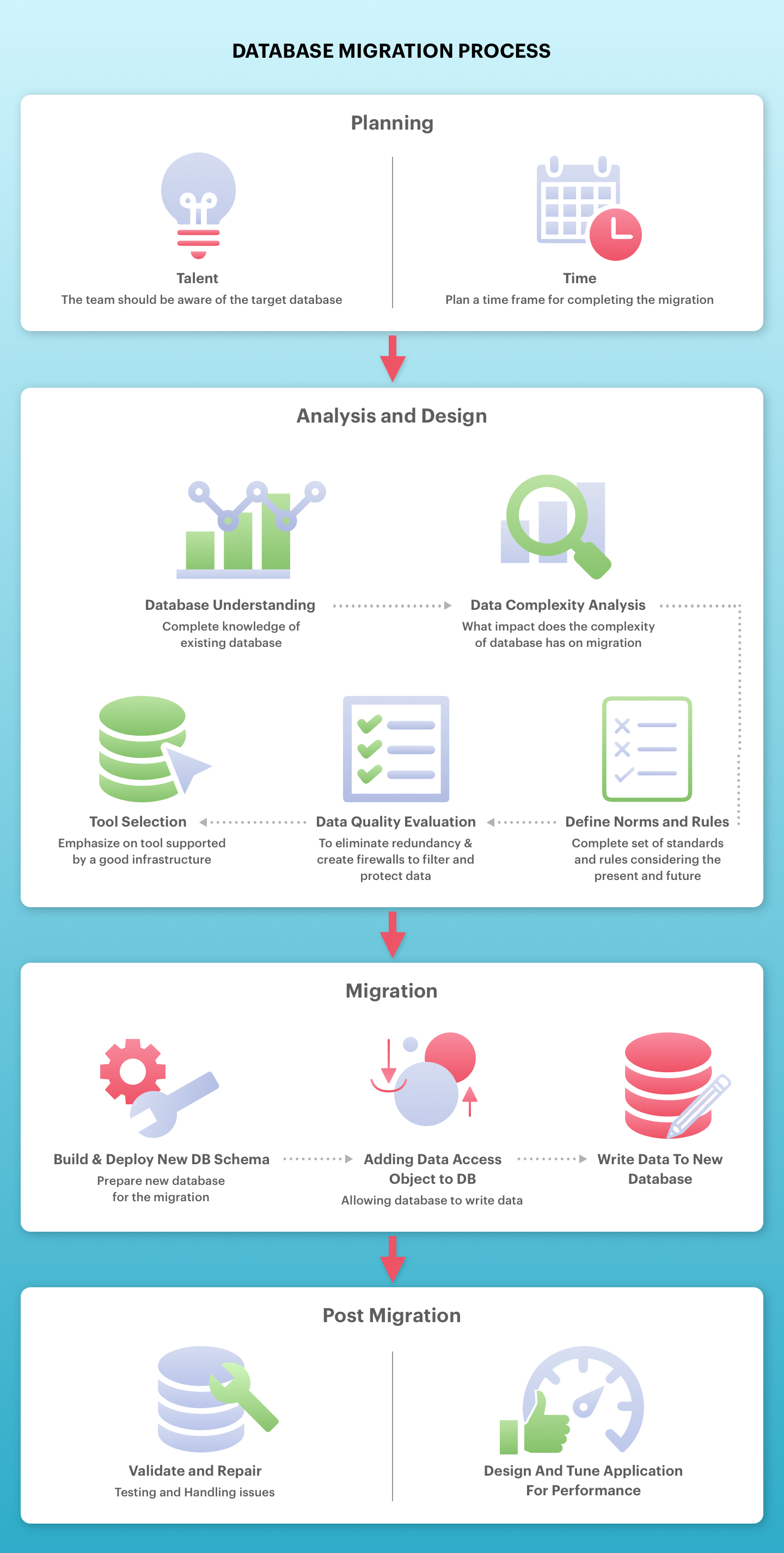

Here’s a quick look at what are the database migration steps

Let me walk you through the database migration process.

1. Pre-migration Steps and Planning

These steps should be taken before actually migrating the database, which will include the basic planning, structuring, understanding the requirements and finalizing the move.

- Plan your talent

Database migration can be a challenging task as there are many moving parts that you need to understand. Whether you are an expert with your current database engine, you are required to be an expert in the target database engine as well.

As you will be crossing the network – moving from one database to another – somebody from your team must be aware of the servers, ports and firewall rules.

As you already know, database migration is usually a part of a bigger application modernization project, which means the database migration will involve changes in the application code as well.

- Plan your time

Database migration usually requires refactoring of application and also the schema. These tasks are iterative and consume a lot of time.

The refactor process can take anywhere from a few weeks to several months depending upon how complex your application and its database are.

Further, you need to be sure about how much you can achieve in the stipulated time of migration. Even if everything is proper and done in a timely manner, data migration might be slower than anticipated. You should also be aware that planning usually takes more time than the actual migration.

2. Database Migration Analysis and Design

- Understanding the database

It is very important to understand the source database for the success of your migration project.

Let us suppose you have an 18-year-old database that is able to support the first version of your application, despite its first version being obsolete years ago. We can assume that your database has thousands of tables and dozens of schemas and still has stored procedures that you wrote for the company about a decade ago.

Well, you are lucky enough that it’s not that bad. But, have you ever tried to know about the size of your database?

You would be surprised to know that most of the customers are not able to answer this question. The size of the database is important when determining the correct approach to use your migration project. It also helps in estimating the time required to copy the whole data.

Complete knowledge of your database can help you define a migration project and help the data copy phase in a speedy manner.

Another important aspect to be taken care of is the size of tables in your database. For migration purposes, tables larger than 200GB and with a huge number of rows might end up being the long tail for the data migration. If the tables are partitioned, there are some tools that can load the data in parallel.

Let’s suppose that you have data about the size, schema and tables of the database to migrate. Now, the challenge arises if you wish to switch the database engines (for instance, from Oracle to PostgreSQL).

- Analyzing data complexity in the environment

One should examine and evaluate different forms of data needed to be migrated. Also, analyze the complexity of data and the impact it has. It can be seen that in such projects, there is an occurrence of unforeseen events.

Hence, success lies in the approach that you choose while dealing with these issues. Find data that needs to be migrated – where are they stored? How do they look when they will be stored at the destination database? How is the existing data and how will they be after the migration?

- Defining the norms

After assessing the data complexity, it would be good to define a complete set of standards. Setting standards will allow you to quickly identify the areas with the issues. This will also help you find out problems that can be avoided beforehand.

Further, with the passage of time, data evolves. This helps in establishing a set of rules and standards which can be very useful in making recommendations across various levels within the organization and supporting data consolidation, thereby, ensuring more successful use of data in the future.

- Define clear rules considering present and future

The rules applicable to your data migration must address the use of your future data after migration.

These rules should also be compatible with various other rules, for validating and managing your information system.

- Evaluation of data quality

It is recommended that a thorough data quality check needs to be carried out before migrating the data from one system to another.

This will help you in evaluating the existing data and creating firewalls to filter and protect the correct data, eliminating redundancy.

- Proper tool selection

It is very important to emphasize on tool selection for database migration. A good tool is supported by a robust infrastructure in which it is integrated.

It should be completely engrossed with the environment in which it shall be expressed. An ideal tool must offer flexibility and scalability, with the minimal technical expertise required. It should be intuitive to allow the technical staff and employees to work collaboratively.

3. Data Migration Implementation

This involves the steps that would let you know how to do the database migration.

- Building and deploying “new” database schema into production, where a new database has been deployed which can be used whenever you are ready. The existing system remains the same.

- Add a new data access object to your app that can write to your database. This might require you to refactor your application in order to have a single (or may be very few) point(s) in which you access the database.

At the point(s) where you access the database you add multi-state feature toggle that would allow you to control the flow of writing to the database. The very first state of this feature toggle is “use old database”. In such a case your code ignores the “new” database and simply uses the “old” one as always.

- Keep the primary database as the “old” one and start writing to the “new” database. This is because we are not 100% sure whether writing to two databases could be success or failure at the same time.

When your code performs a write operation, it first writes to the “old” database.

If it succeeds, it writes to the new database also. It should be noted in this step that the “old” database is in a consistent state whereas that of the “new” database can be inconsistent. This is because the writes to it can probably fail while the “old” database write succeeded.

It is good to let this step run for a while (several days or weeks) before moving to the next step. This will let you get an affirmation and confidence that the write path to your new code works as it is expected. Also, the “new” database is configured correctly with all the replications in place.

Further, if at any point of time you find out something is not working, you can simply change the feature toggle back to the previous state and stop writing to the “new” database. Since you still have your old database with all your data intact, you can easily make modifications to the new schema or even drop it if required.

4. Post Migration

Once you have migrated the database, there are certain steps that should be taken care of. These steps should be part of your database migration strategy as migration is a long process and you would not like to have any data losses.

Validation test and repair

It is very important to validate the migrated data. It is suggested to prepare precise test scenarios. This will help you to properly test the migrated data. Also, ensure that you document each step of the migration process and maintain a clear audit document to comply with regulatory compliance. This allows you to test the data in a proper manner.

Designing and tuning for performance

This step is very crucial for uncovering performance issues with the workload and reconcile any data accuracy and completeness. For example, when migrating from an older version to a newer version or upgrading the database compatibility level to the latest available, a workload may be exposed to the risk of performance regression.

How Big Companies Perform Database Migration?

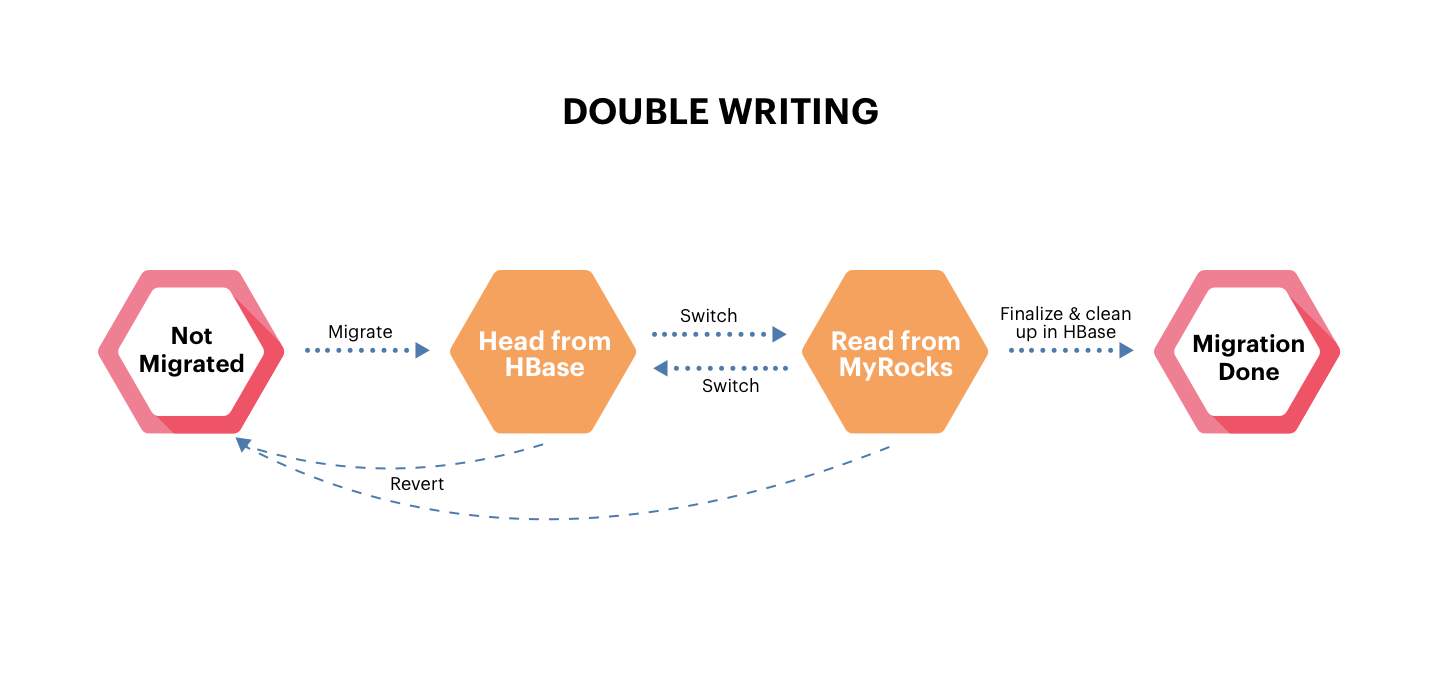

Facebook messenger’s database migration from HBase to MyRocks is a good example for understanding the complete process.

Messenger was originally designed to be a direct messaging tool similar to email. It gradually evolved as a mobile-first, communications system used by both the businesses and the individuals. This evolution involved several changes that are as follows:

- Data schema was redesigned, a new source of truth index was created from the existing data, and consistent invariants were made to make sure that the data is formatted correctly.

- Data was moved from HBase to MyRocks, which is Facebooks’ open-source database project that integrates RocksDB as a MySQL storage engine.

- Also, they were moved from storing the database on spinning disks to flash on the new lightning server

It was a big challenge to perform data migration between the storage systems while still keeping the Messenger up and running for a large number of accounts. Further, ensuring that the people using Messenger would have an uninterrupted experience during the migration process.

It was noticed that business users had many Messenger chat windows active simultaneously around the clock for tasks such as customer service, online orders from customers, updating the customers based on their requests or requirements.

This meant that the developers had to make changes in the code to support new features both in old and new systems. And this should not hamper the users’ activities when their accounts were moved to the new database.

Since data schema was to be changed, existing data needs to be carefully parsed such that people see exact same messages, videos, and photos as before.

Moreover, Facebook engineers, along with the migration, were also developing the service – designing and manufacturing Lightning flash servers at the same time. In that scenario, rolling out the new service would require fixing software, kernel, firmware, and even physical power path bugs.

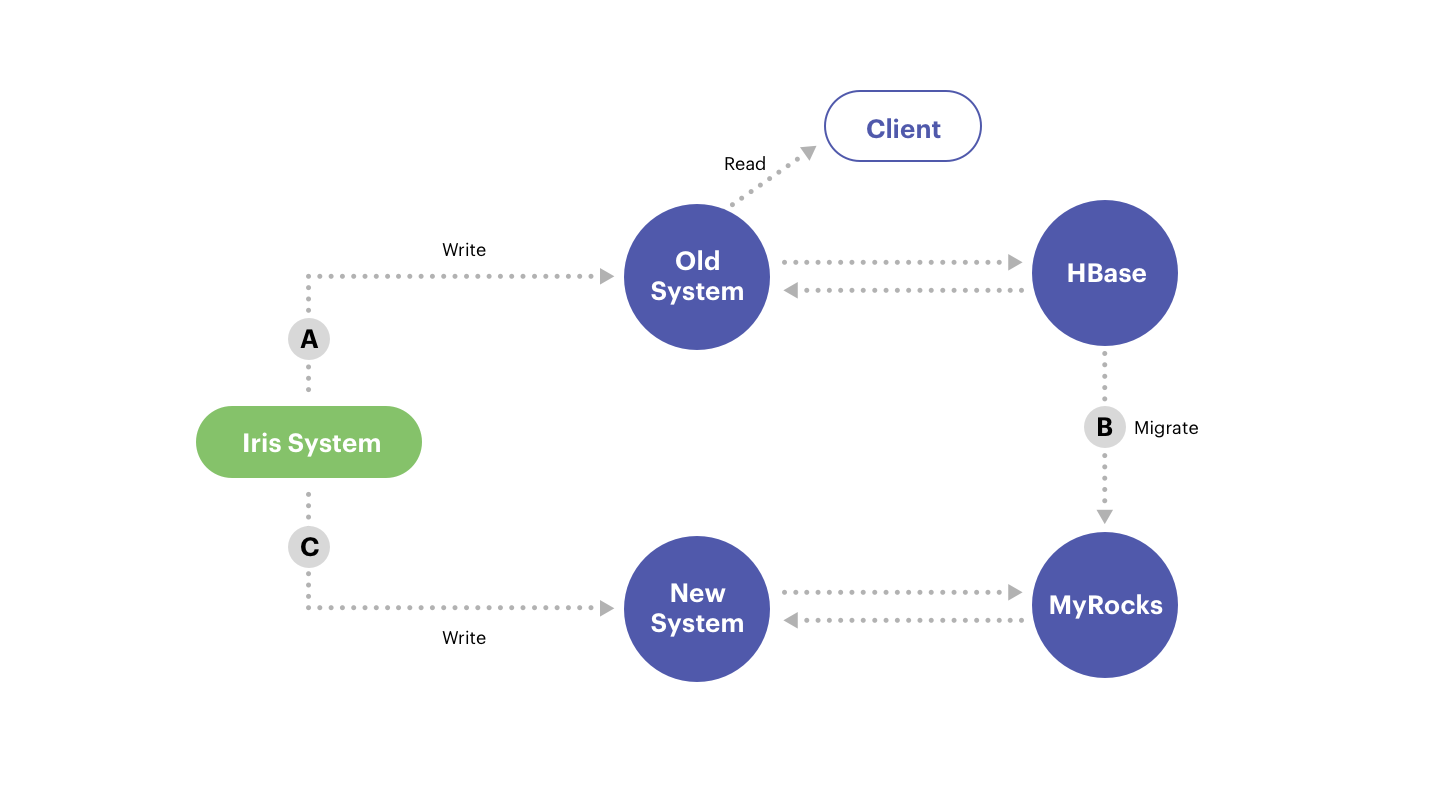

In order to address those challenges, two migration flows were designed for all the messenger accounts – normal flow and buffered migration flow. The normal flow covered 99.9% of all the accounts and the buffered flow covered the remaining, hard-to-migrate accounts.

The engineers did a thorough data validation, made a revert plan (in case of discrepancies, if any) and performed an accounting job to verify that they are not missing anything. When it was confirmed that all the accounts have been migrated to the new system, the old system was made offline.

Let’s see what happened when the actual migration took place

Normal Migration

Facebook engineers defined a state machine & developed monitoring tools. At any time for each account the state machine puts the account in any of the three states

– Static not migrated

– double write and done

– dynamic state i.e. actively undergoing migration

Migration process – data position in the old system was logged and migration to the new system started. The data was checked for its movement. If it didn’t move, the account was allowed to write the data to the new system and flagged as double write state. Else, flagged as fail.

The double-write step included data and API validation in order to ensure security.

The final step included account verification for successful migration. In case of issues there was a revert plan to rollback any account to non-migrated state.

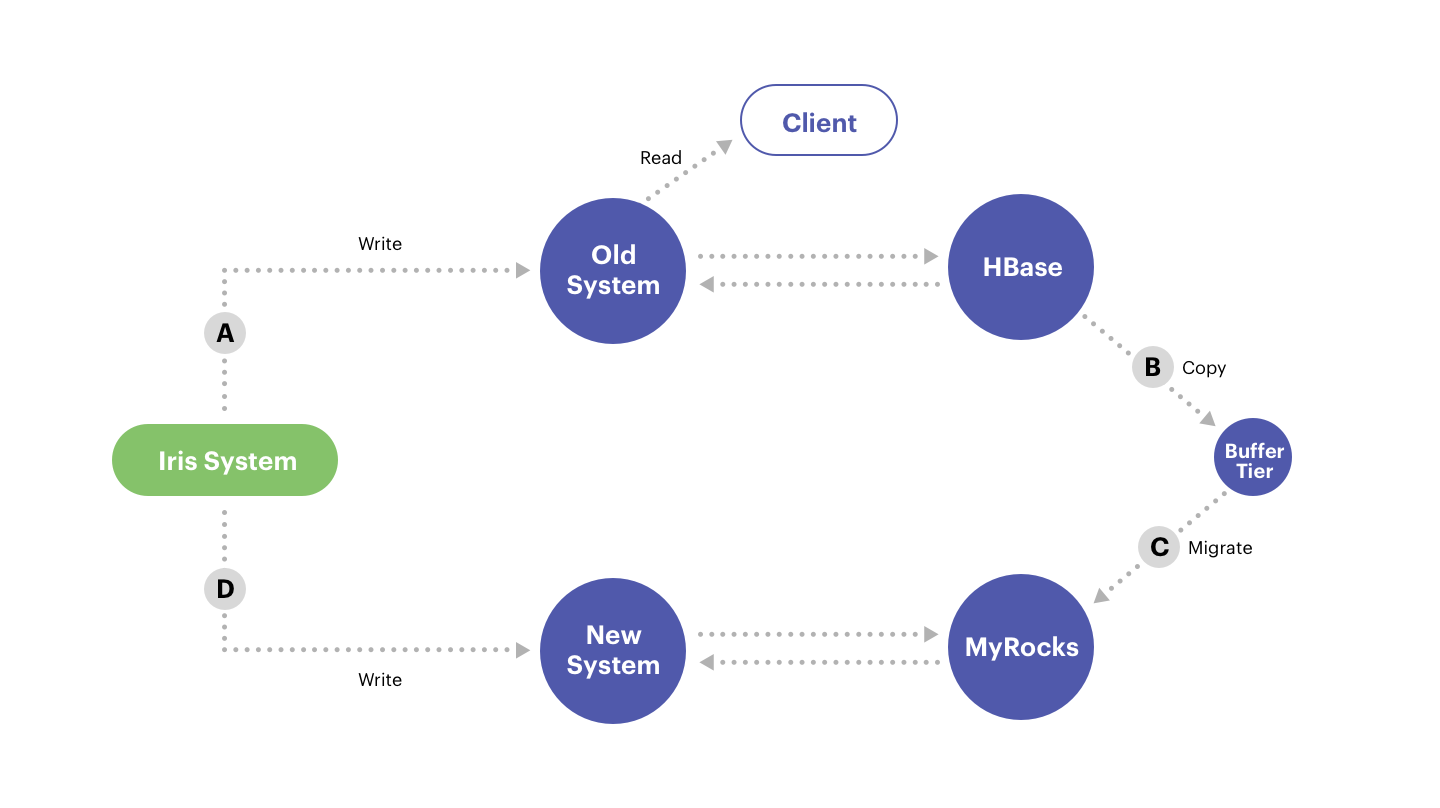

Buffered Migration Flow

Some of the accounts can’t be migrated in a normal way. For instance, a large business might be using a Messenger bot to serve its customers. Well, there is no window of time to perform the migration when new messages are coming in.

Further, these accounts might be much larger than is typical and therefore, the different flow was developed to migrate these users. Also, a cutoff time was set, called migration start time and then took a snapshot of accounts’ data at that moment. Then copied the snapshot to a buffer tier (usually taking 10% of the migration time). Then the data was migrated from the buffer tier to MyRocks.

Database Migration Best Practices

After understanding the nitty-gritty of database migration process in detail, let me tell you some of the best practices while migrating a database.

Back up before execution

This should be made a necessary step for the implementation goes wrong at any point in time, you have your data backed up. You can’t afford to lose your valuable data. Further, ensure that there are tested backup resources.

Cleaning the Old Database

It is good to clean up the old database so that you don’t have to carry the clutter to the new database. Transfer only those things that are required and not the junk to save your time.

Appointing Project Managers

To streamline the process, it is good to appoint a project manager. The project manager will help in the creation and implementation of the project plan. His work would also include designing materials so that he can update the departments about the activities.

Data Prioritization

It is a good practice to rank the priority of data on scale from must have to would be nice to have to no longer needed. This will help you migrate in stages as taking too long in the migration will hurt the business.

Following the above practices will reduce the pain of managing a complex data migration and give your project the best chance of success.

Conclusion

When it comes to actually performing database migration, it can be a very complicated task. Since there could be shards of the database across multiple servers, it might be difficult to migrate all the data at once. You might want to perform one step at a time in order to test the process. You could experience many bugs while performing the migration.

In my career, I have seen companies losing data because of making wrong choices in crucial matters like database migration.

We, at Simform, can help you with the database migration. We have skilled professionals dedicatedly serving the firms, which need assistance with database migration process. You can have everything at a single place – starting from consultation to analyzing the data to implementing migration and in the end performing data validation.

Database Migration becomes really easy if we have the right resources with us.

“Making good decisions is a crucial skill at every level.”

Your thoughts are welcome in the comments below.