Finally, the operations team is finding some solace from listening to “but it works in my environment”! How? Well, with the grand entry of containers, which simplified the deployment of applications on multiple environments and became a mainstay in application development. The DevOps environment also naturally welcomed the resource efficiency and speed that containers brought along.

However, containerization is easier said than done when there are a large number of containerized applications. And as the number of containers increases, so does the complexity of managing them effectively. It is where container orchestration comes into play.

In this article, you’ll learn the fundamentals of container orchestration, its key benefits, best practices for optimization, popular tools your organizations can consider for scaling your business, and some example use cases.

We might frequently be taking Kubernetes as an example throughout the article, as Kubernetes is undoubtedly one of the most used container orchestration platforms.

What is container orchestration?

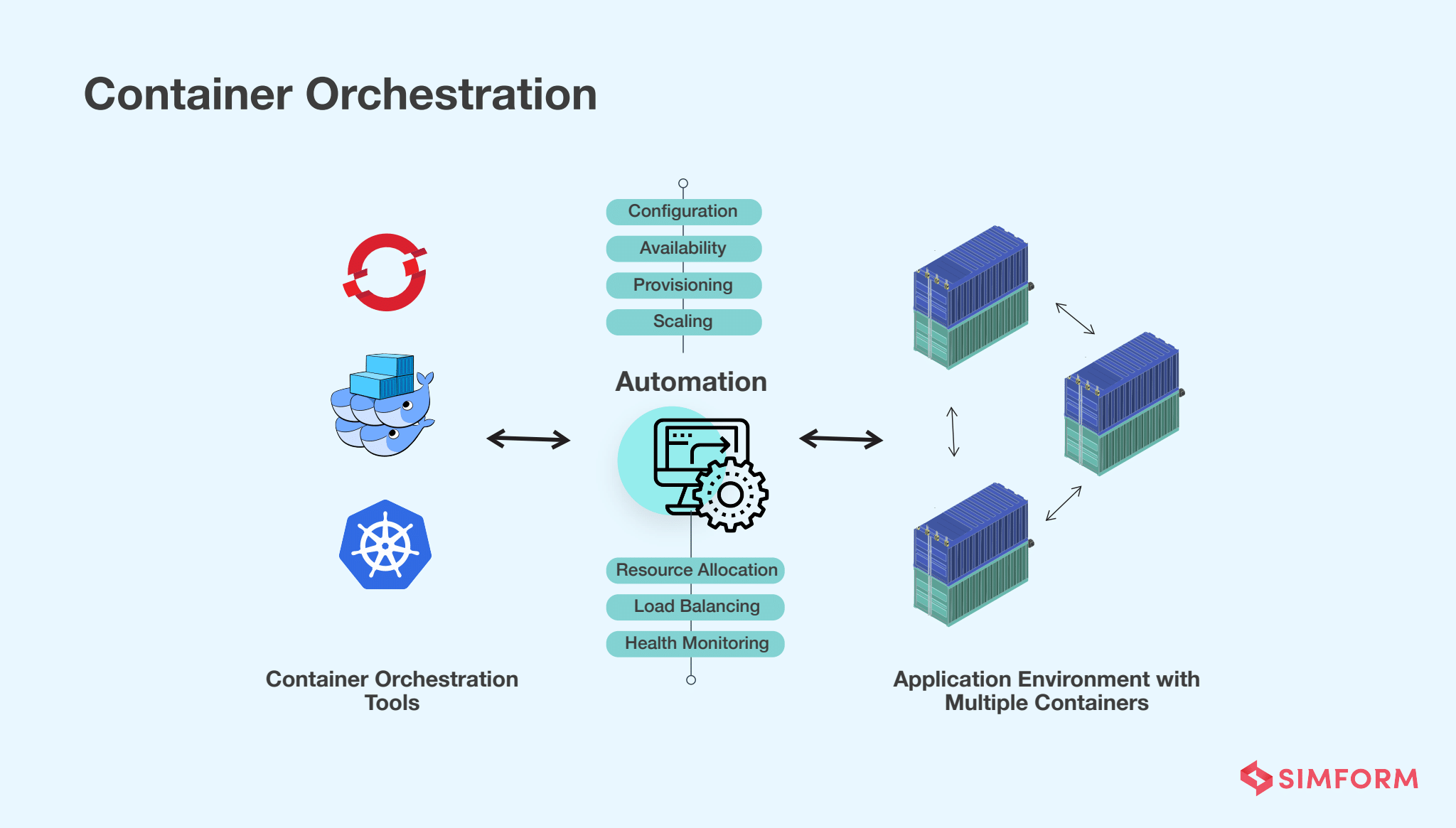

Container orchestration is the process of automating the operational effort required to run containerized workloads and services. It automates various aspects of the containers’ lifecycle, including provisioning, deployment, scaling, networking, load balancing, traffic routing, and more.

By automating these tasks, container orchestration platforms simplify the management of containers at scale and ensure their efficient operation within a distributed environment. These platforms can also automatically spin containers up, suspend them, or shut them down when needed.

Check out 14 containerization use cases with examples for businesses of all sizes

Container orchestration plays a vital role in enabling automation and efficiency in various scenarios, including multi-cloud and microservices architecture.

Multi-cloud container orchestration

Increasing business requirements are driving more and more companies to adopt the multi-cloud approach for taking advantage of diversified services. Now, there should be a mechanism to enable the deployment and portability of apps across different cloud platforms with great reliability. And that’s what containers do while serving as the key to unlocking efficiencies. This new requirement prompts more powerful resource orchestration mechanisms that can deal with the heterogeneity of the underlying cloud resources and services.

Container orchestration can also enable the operation of containers across multi-cloud infrastructure environments. Therefore, we can say that container orchestration perfectly complements a multi-cloud environment and accelerates digital transformation initiatives.

Container orchestration with microservices

A microservices architecture does not call for the use of containers explicitly. However, most organizations with microservices architectures will find containers more appropriate to implement their applications.

Two characteristics of containers help reduce overheads if your organization runs microservices applications in cloud environments.

- Fine-grained execution environments

- Ability to accumulate different application components in the same OS (operating system) instance

We can say that microservices architecture and containers go hand in hand as

- Microservices provide structure to divide and package a larger application into singular services.

- Meanwhile, containers enable the assembling and releasing of microservices.

Why do we need container orchestration?

Suppose you are the administrator managing the deployment, scaling, and security of ten applications running on a single server. It might not be too difficult if all the applications are written in the same language and originally developed on the same OS. However, what would happen if you had to scale to hundreds or thousands of deployments? And move them between local servers and cloud providers?

You wouldn’t know which hosts are overutilized, nor can you implement rollback and updates easily to all of your applications. Without something like orchestrators, you’d have to create your own load balancers, manage your own services, and service discovery.

A single, small application is likely to have dozens of containers in the real world. And an enterprise might deploy thousands of containers across its apps and services. More containers, the more time and resources you must spend managing them. A container orchestrator can perform the critical life cycle management task with little human intervention in a fraction of the time.

Container orchestration mediates between the apps or services and the container runtimes and performs services management, resource management, and scheduling.

Here are some of the prime benefits.

Business benefits of container orchestration

Automation, health monitoring of containers, and container lifecycle management are the clear advantages of container orchestration that we’ve already seen. Let’s see some business benefits first.

1) Efficient resource management

With container orchestration, systems can expand and contract when required. What you get is increased efficiency with optimized processing and memory resources.

2) Cost savings

Container-based systems can build and manage complex systems with less human capital and time, saving costs.

3) Greater agility

Effortless and rapid management and deployment of applications enable organizations to respond to evolving conditions or requirements quickly.

4) Simplified deployments

Modular design and easily repeatable building blocks of container-based systems enable rapid deployment, which is especially beneficial for larger organizations.

5) Interoperability

Containerized applications do not have to be rewritten while having a major overhaul of your development environment, like deploying on AWS’s (Amazon Web Services) public cloud first and, down the road, running some services in a private cloud environment.

6) Easy scaling of applications and infrastructure

As all the details related to the application reside within containers, application installation is simple. And so is the scaling with container orchestration allowing easy setup of new instances.

7) Ideal for microservices architecture

As discussed earlier, the combination of microservices with containers and orchestrators is like a match made in heaven. Container orchestration provides a perfect framework for managing large, dynamic environments comprising many microservices.

8) Improved governance and security

Containers enable applications to run in an isolated manner, independently from other architectures of the host machines, naturally reducing application security risks and improving governance.

How container orchestration works?

An orchestrator tool ultimately aims to achieve – automating every aspect of application management – right from initial placement to deployment, from scaling to health monitoring. Despite every tool having different methodologies and capabilities to carry out the tasks, the container orchestration system generally follows three basic steps.

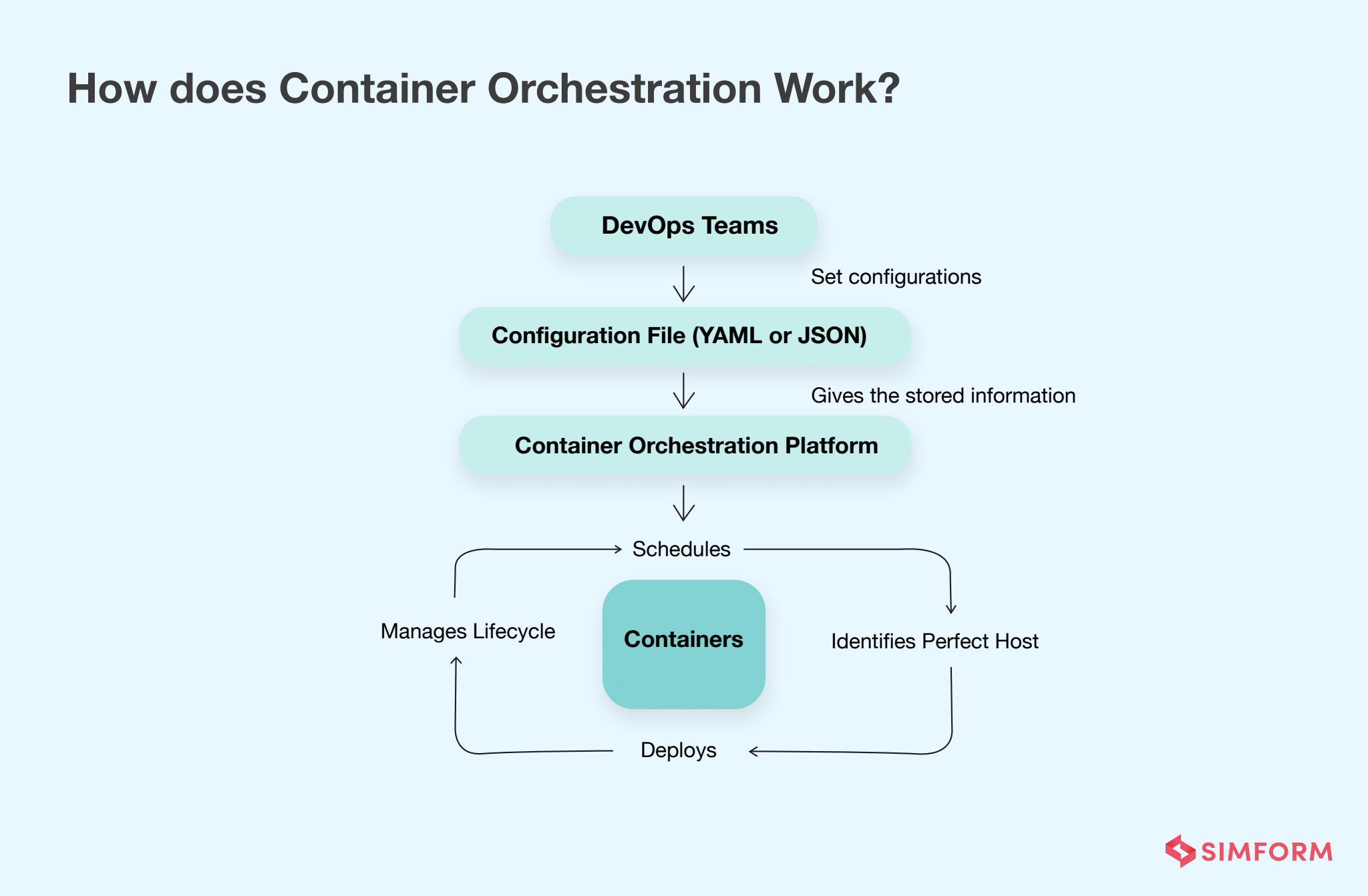

1) Set configurations

Most container orchestration platforms support a declarative configuration model. The orchestrator would naturally want to know the exact location of container images in the system. DevOps teams can declare the blueprint for an application configuration and workloads in a standard schema, using languages like YAML or a JSON file.

This configuration file generally carries essential information about how to mount storage volumes, container networking (ports), and logs that support the workload. And so, this configuration file essentially

- Defines the desired configuration state

- Provides storage to the containers

- Defines which container images make up the application, and their location (pertaining to the registry)

- Establishes secured network connections between containers

- Specifies versioning for phased and canary rollouts

The configuration files are branched and version-controlled. So, the orchestration tool can apply the exact configuration multiple times, yielding the same result always on the target system.

This step is also essential for enabling the teams to deploy the same application across different development and test environments before it goes into production.

2) Deploy containers

The next step is about deploying the containers to their respective hosts. Let’s take the example of Kubernetes here. Now, a pod is the smallest unit of deployment in Kubernetes. A set number of pod replicas are running at any given time to increase the application resiliency and improve its ability to sustain inevitable failures and be functioning for the end-users.

When there is a requirement for a new container for cluster deployment, the container orchestration platform schedules an event. And then, it identifies the appropriate host depending on requirements or constraints specified in the configuration file. Many conditions can be predefined, like the placement of containers based on memory availability, metadata, user-defined labels, or available CPU capacity.

3) Manage the lifecycle of containers

After your container is up and running, the container orchestration tool will manage its lifecycle based on the specifications defined in the configurations in the compose file.

This includes –

- Vertical or horizontal scaling to spread the same application load across host infrastructure and allocating resources among containers. Load balancing is crucial for peak performance and traffic management, particularly for container-based applications built on microservices architecture.

- Moving containers from one host to another if a host dies or there is an outage or shortage of resources. This ensures high availability and performance.

- Assembling and storing log data and telemetry that are used to monitor the performance and health of the application.

Best practices for optimizing container orchestration

Many organizations consider containerization technology and container orchestration as the logical next steps after DevOps implementation. However, despite containers being lightweight and portable, they are not always easy to use. Instead, containerized workloads are quite challenging to implement due to networking issues, security issues, and the use of CI/CD pipelines to generate container images.

Here are 4 best practices that must be a part of your container management strategy to tackle the challenges when deploying containers in production environments.

1) Define a clear “development-to-production” path

Understanding the path from development to production is of utmost importance for any enterprise dealing with containers. While working with container orchestration, you ought to have a staging platform – an almost replica of a production environment, to test codes and updates and ensure quality before application deployment.

Implementing DevOps best practices can help build monitoring and give developers

- Access to pre-production environments

- And running automation tests in build environments.

Once the containers are proved stable, they can be promoted from staging to production. And if there are issues with the new deployment, they must be able to roll back, and in most cases, that will be an automatic process.

2) Have proper monitoring solution

Now, when the development and operations are linked via DevOps practices, it is even more essential to have automatic reports of issues regarding containers going into production. With reports, the technical team can resolve the problems on time.

Several monitoring & management tools are available to monitor containers, whether on-premises or in the cloud. With insight into metrics, logs, and traces, operators of containerized platforms get many benefits. These monitoring tools enable businesses to collect detailed data and identify weak points or trends in the container management process. Moreover, they can also take automatic actions depending on their findings. For instance, in case of a network error, the tool can shut down the hub, originating the errors, and avoid a total outage.

3) Prioritize backup and recovery

Containers generally store data in the same container where the app runs until the application is popular. The increase in the application’s user base prompts the use of external databases that might be or not be container-based. However, it is crucial to have copies in secondary and independent storage systems regardless of where the data resides.

Even though public clouds mostly have an inbuilt disaster recovery mechanism, there might be a corruption of data or accidental removal. So, there have to be well-defined, workable, and adequately tested data recovery mechanisms. And security controls must also be established for appropriate access (based on the customer’s policies).

4) Create a roadmap for large-scale container terminals

Planning capacity requirements for production is a key practice for on-premises and public cloud-based systems. The development team needs to consider the following recommendations when planning for production capacity.

- Learn everything about current containers in production and also access the growth potential for the next five years to figure out capacity issues.

- Model capacity requirements for the required infrastructure, including servers, network, storage, databases, etc., for the present-day, near future & long-term.

- Understand how containers, container orchestration, and other supporting systems like databases are interrelated and their impact on capacity.

Read about docker use cases for various industry verticals and businesses

Container orchestration platforms: Who are the market leaders?

A container orchestration platform is a fundamental entity that provides you with tools that orchestrate containers and reduce operational workload. Also, these platforms have a number of pluggable points where you can use key open-source technologies like Prometheus and Istio. And this way, you can do things like logging and analytics and see the entire service mesh to see how your services communicate with one another.

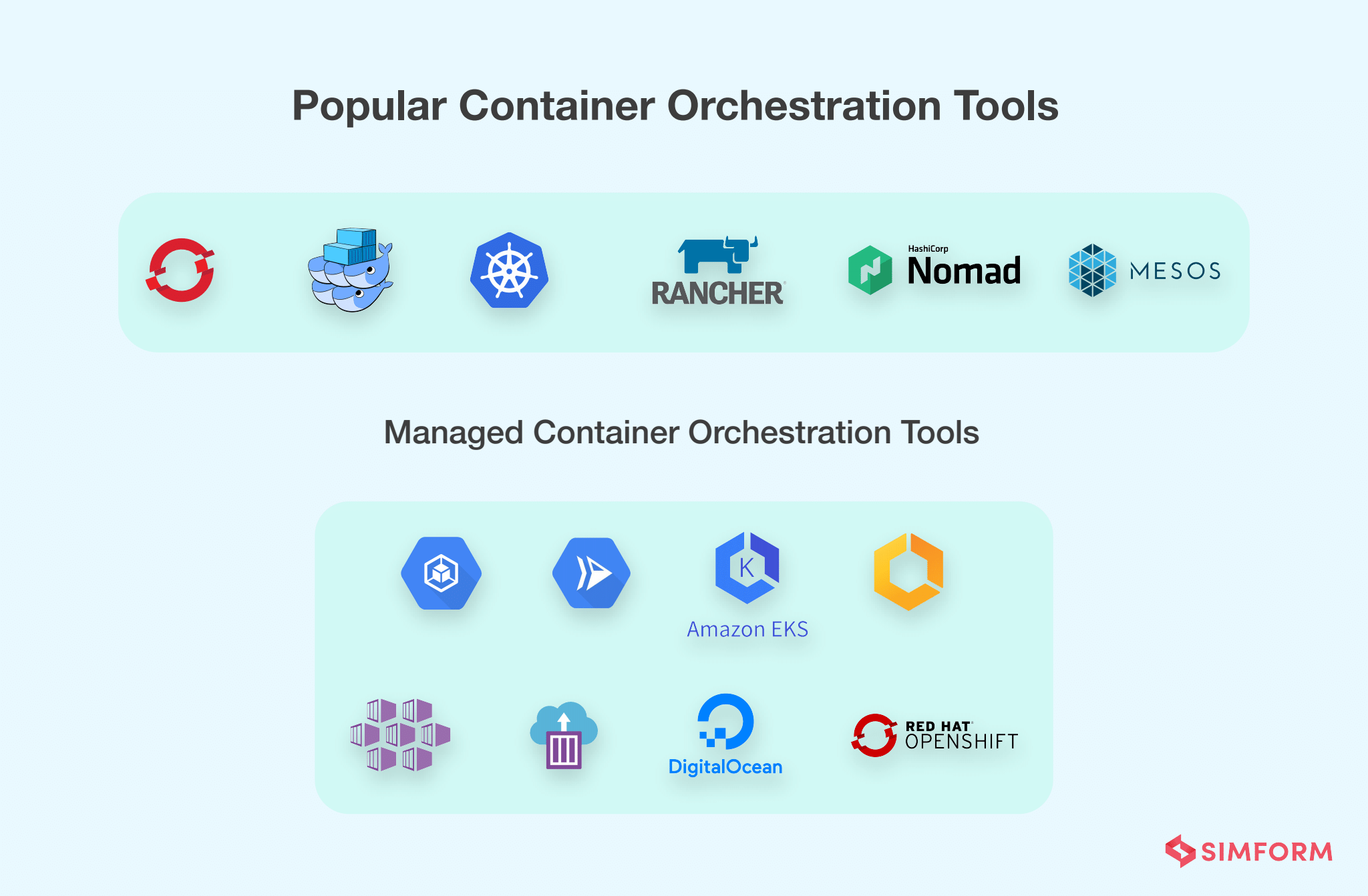

There are two options generally with container orchestration platforms.

The first option is self-built, where you build a container orchestration platform from scratch all by yourself. There are open-source platforms that you can install and configure, enjoying complete control over the platform and customizing it to your specific needs.

Another is to use one of the managed platforms, where the cloud provider manages installation and operations while you use its capabilities to manage and run containers. Some examples of such platforms that provide Kubernetes-as-a-Service offerings are Google GKE, Azure Kubernetes Service (AKS), Amazon Elastic Kubernetes Service (EKS), IBM Cloud Kubernetes Service, and Red Hat OpenShift container platform.

Every container orchestration platform will have a varied list of key features. However, apart from selecting the platform, you might have to consider a few additional components for creating a complete infrastructure. For instance, you’d need some image registry to store your container images, or implement a load balancer, when not managed by the platform.

Many container orchestration tools are available, where you just need to state the desired outcome, and the platform will fulfill it. Let’s look at the three widely adopted toolchains that solve different things and are rooted in very different contexts.

-

Kubernetes: Leader of the pack

Kubernetes container orchestration, an open-source software, offers a simple and declarative model for building application services with multiple containers, scheduling, scaling, and managing health checks. This Google-backed solution enables developers to declare the desired state through YAML files, as we mentioned earlier.

With Kubernetes, developers and operators can deliver cloud services, either as Infrastructure-as-a-Service (IaaS) or Platform-as-a-Service (PaaS). Kubernetes is supported by major cloud providers like AWS, Microsoft, and IBM. Despite being complex, Kubernetes is widely used for its motility among large enterprises that emphasize a DevOps approach.

Advantages

- Reduced development and release timeframes

- Optimized IT costs

- Flexibility in multi-cloud and hybrid cloud environments

- Exclusive self-healing and auto-scaling capabilities

Challenges

- You can face challenges when managing and scaling containers between cloud providers.

- Other issues related to security, storage, logging, and monitoring containers

Docker Swarm: Easy cluster creation with powerful container scheduling

Docker Swarm is an easy-to-use orchestration tool that is native to the Docker platform and is made by the Docker team and community. Despite being less extensible than Kubernetes, the platform has been quite popular among developers who prefer simplicity with fast deployments. Also, Docker Engine provides the option of integration with Kubernetes, allowing organizations access to more extensive features of Kubernetes.

Advantages

- A low learning curve

- You do not need to re-architect your app to adapt to other container orchestrators. You can use Docker images and configurations with no changes and deploy them at scale.

- Ideal for smaller deployments

- Automated load balancing within the Docker containers

Challenges

- No option to connect containers to storage, less user-friendly when it comes to storage-related issues

- Less robust automation capabilities

Apache Mesos: Complex yet inherently flexible

Apache Mesos, slightly older than Kubernetes, is an open-source cluster manager. Its lightweight interface enables scaling up to more than 10,000 nodes easily and independent evolution of frameworks that run on top of it. Mesos’ APIs support popular languages like C++, Java, and Python.

However, Mesos only provides management of the cluster; it is not a container orchestration system actually. Therefore, many frameworks like DC/OS and Marathon have been built on top of Mesos.

Advantages

- Lightweight interface

- Inherent flexibility and scalability

- Simplified resource allocation by combining abstract data resources into a single pool

- Marathon is designed for long-running apps and can handle persistent containers.

Challenges

- It is a distributed systems kernel upon which you can create custom orchestrators. Its Marathon framework offers container orchestration as a feature, allowing orchestration to run on top of Mesos like other workloads.

- Mesos having a high learning curve entails organizations acquiring technical expertise. It is rather more suited to large-scale enterprises than smaller organizations with limited tech resources.

Points to consider while evaluating container orchestration tools

The number of container orchestration tools and frameworks keeps increasing, and so does the confusion about making the best selection. Know the right questions to ask about your infrastructure, team, overall container management strategy, and growth plan, and you’re almost set! Here are some of the points to consider that will help you ask the right questions.

- Determine your application development speed and scaling requirements from early on.

- Analyze your team’s level of expertise with containers

- Know the kind of code contributions that the vendor is making

- Find out if real customers are using that solution.

- Understand the potential vulnerabilities of the platform.

- Have a clear understanding of your cloud computing strategy. Know where the distribution of the platform runs and where you can use it.

Some suggestions

- There are other alternatives to major container orchestration platforms that might suit some teams. For example, people with a programming background could use shell scripting to customize their requirements. Teams can also subscribe to a Containers-as-a-Service (CaaS) to function at the best efficiency level and save significant capital for operations with minimal maintenance. For instance, Google Kubernetes Engine (GKE) can manage Kubernetes master node for you.

- Adopt Kubernetes while dealing with – a large microservices environment, diverse sets of needs for different system parts, multiple teams, or uncertainty of infrastructure needs.

- Go for Docker Swarm if your use cases are relatively simple and homogeneous.

- Use Marathon for long-running apps and handling persistent containers.

- Take a look at Cloud Foundry to build your own Platform-as-a-Service (PaaS).

Etsy DevOps Case Study: The Secret to 50 Plus Deploys a Day

Real-world success stories of container orchestration

As more than 90% of organizations globally will run containerized applications in production by 2027, the rise in the adoption of container orchestration is also a given. Let’s go through a few remarkable examples to understand its market penetration and see the technology in action.

1) Adidas

Surprised to see a shoe company here? However, Adidas has a large eCommerce business that demands fast and reliable infrastructure.

Despite the Adidas team being content with its software choices from a technology perspective, accessing all the tools had been challenging for them.

Soon, they started looking for ways to reduce the time to get a project up and running. Finally, they found the solution with agile development, containerization, continuous delivery, and a cloud-native platform that included Kubernetes and Prometheus.

Six months into the project, 100% of the Adidas website was running on Kubernetes, reducing the site loading time by half. They also started releasing 3-4 times a day, which earlier was once in 4-6 weeks. With 200 nodes, 4,000 pods, and 80,000 builds per month, Adidas started running 40% of its critical, impactful systems on its cloud-native platform.

Other tech giants like Google, Pinterest, Reddit, Shopify, Tinder, Spotify, and many other companies use Kubernetes.

2) Apple (Siri)

Siri can be considered one of the largest Mesos clusters, spanning thousands of nodes. Let’s understand how it started. Apple acquired Siri in 2010. Back then, it was running on the AWS cloud. The team completely rebuilt its infrastructure to runn applications on Mesos to get it running on Apple’s in-house architecture.

Many leading technology companies like Oracle, PayPal, HubSpot, Uber, eBay, and Netflix adopted Mesos to support everything from big data, microservices, and real-time analytics, to elastic scaling.

3) BMW

Red Hat OpenShift drives IT innovation at the BMW Group, a world leader in automotive engineering.

OpenShift is an enterprise open-source container orchestration platform that has the main architecture components of Kubernetes with added features for productivity and security.

Almost all cars that the BMW Group ships are delivered with the company’s digital product – BMW ConnectedDrive, which connects the driver and vehicle to various services and apps. ConnectedDrive required a highly advanced delivery system to deliver those applications and updates to more than 12 million vehicles and support nearly a billion weekly IT service requests.

In 2016, BMW started migrating its full application suite to OpenShift to support over 1,000 web-based apps for its customers, vehicles, dealerships, factories, and the complete production and sales process.

Have a smooth sailing!

Despite various challenges and complexity, container orchestration significantly impacts the agility, efficiency, and velocity with which today’s developers deploy applications on the cloud. The essential team skills for successful container orchestration are – problem-solving, logic, effective communication, analytical thinking, and solid troubleshooting skills. Now, where would you find that!?

We at Simform have engineers who love to stay on top of the latest trends regarding containerization, container orchestration, and other tech innovations transforming entire industries. Let us be your technology partner in solving your enterprise’s toughest challenges through disruptive technologies.