“Write once, deploy anywhere, anytime!” Every developer’s dream, isn’t it? Thanks to application containers like Docker, DevOps professionals are finally living that dream! These standalone software packages contain everything necessary to run an application, from code to dependencies and binaries to configuration files. And organizations couldn’t be happier as by following containerization best practices, they can have as much business advantage as a technical one.

As containerization technology and its ecosystem are now mature enough, we can understand what approaches and practices will be appropriate and worthy of standardization and automation.

This article provides a comprehensive list of containerization best practices for CTOs and technology architects responsible for key business and technological decisions.

The point to keep in mind here is that every practice cannot be equally important. For instance, you may not require to use some of the approaches to run a successful production workload, while others are fundamental. In particular, implementing security-related practices depends on your environment and constraints. Another point is that we’d often talk about Docker use cases as it is considered the standard for container-based tooling, and you’d need to know Docker best practices too.

Containerization best practices for migrating applications into containers

With time, your application will mature and need more scaling. Accordingly, your development approach will require shifting from traditional, monolith applications to microservices, which generally means you’d need containers. However, most applications were developed before modern, image-based containers came into the picture. So, even if you run a monolithic application in a container, your application will need some modifications.

Containerization of an existing application comes with a different set of considerations and recommendations than creating a containerized app from scratch. Here are the containerization best practices for migrating applications:

Have a long-term vision

Moving to containers just for its sake can introduce more technical challenges based on the applications and the teams running them. However, a clear and long-term vision enables teams to focus on innovation and adopting best practices for the applications.

It is more fruitful to containerize an app that is large or web-scale and has inherent statelessness in the architecture. Also, ensure that its business requirements include a high-quality user experience and a high frequency of releases and updates.

Importance: High

Select the right migration strategy

There are three main strategies for app migration: lift and shift, augment, and rewrite. Often, teams containerize a monolithic application to achieve a “lift-and-shift” from an on-premises environment to a public cloud. But, again, it is better to ensure the proper selection.

Refactoring an application is also a great choice. An application generally consists of smaller units. Packaging those units into something easily consumable makes the migration and containerized deployments rapid and smoother. Again, this ought to bring microservices into the picture.

Importance: High

Consider decomposing part of older applications first

Newer applications might be easier to maintain and update than older ones. Therefore, moving the newer ones first might seem like an easier option. However, there might be a more significant payoff in decomposing parts of older applications that are causing operational pain. Later, you can deploy these new services as containers without revamping the entire application.

Importance: Medium

Containerization best practices for building containers

Your first step while building containers would naturally be to build teams that design, build, innovate, and operate a container management platform. You should consider investing in a DevOps team and site reliability engineers (SREs) depending on your application’s size and complexity.

Here are the best practices for building containers.

A single application per container

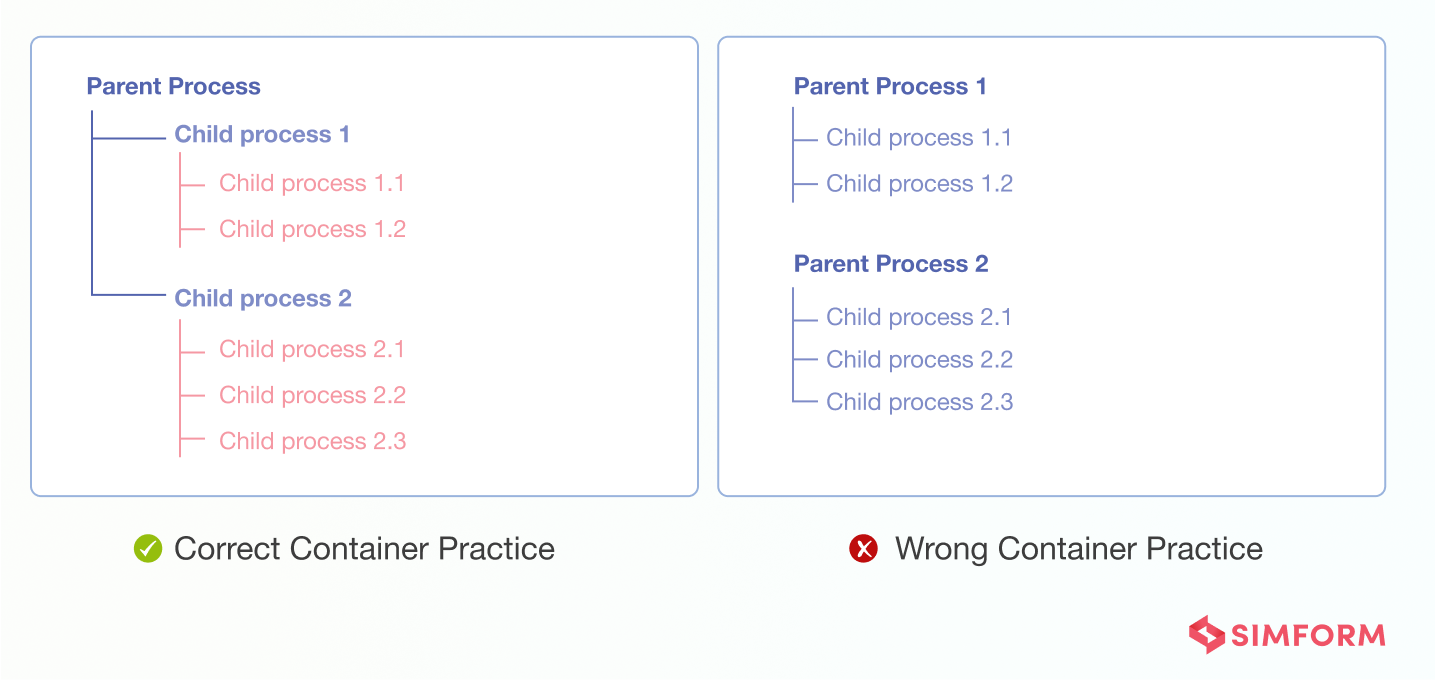

When starting to work with containers, it’s common to treat them as VMs that can run different things simultaneously. A container can work this way, but that won’t give all the advantages. Also, it is tempting to run every component in a single container.

However, as the containers have the same lifecycle as the app that it hosts, each container should contain a single app. So, the app should work when a container starts and stop when the container stops. Here, an “app” must be software with a distinctive parent process and several child processes. This image shows the best practice.

Importance: High

Consider starting with stateless applications

Despite stateless apps having the overhead of the call to the database, they are excellent at horizontally scaling, which is essential for modern apps. Also, A stateless backend eliminates the possibilities of long-running connections and mutable states and enables easy deployment of applications with zero downtime.

Importance: Medium

Optimize your builds for the build cache

Containers like Docker caches the results of the first build of a Dockerfile, saving individual layers of the Docker images that can be reused in subsequent pipeline runs. This reduced build time decreases production cost too, which is particularly helpful when building multiple containers. Hence, Docker layer caching (DLC) is one of Docker’s best practices for speeding up your workflows.

Importance: High

Plan for monitoring from the start

Containers being ephemeral in nature, are difficult to monitor. However, monitoring is quite essential to ensure performance, availability, and security for containerized workloads. With a comprehensive approach to monitoring, you can have greater visibility into issues and events and remediate the problems before they can impact the users.

Importance: High

Find out the best Docker use cases that are revolutionizing the IT world

Containerization best practices for creating container images

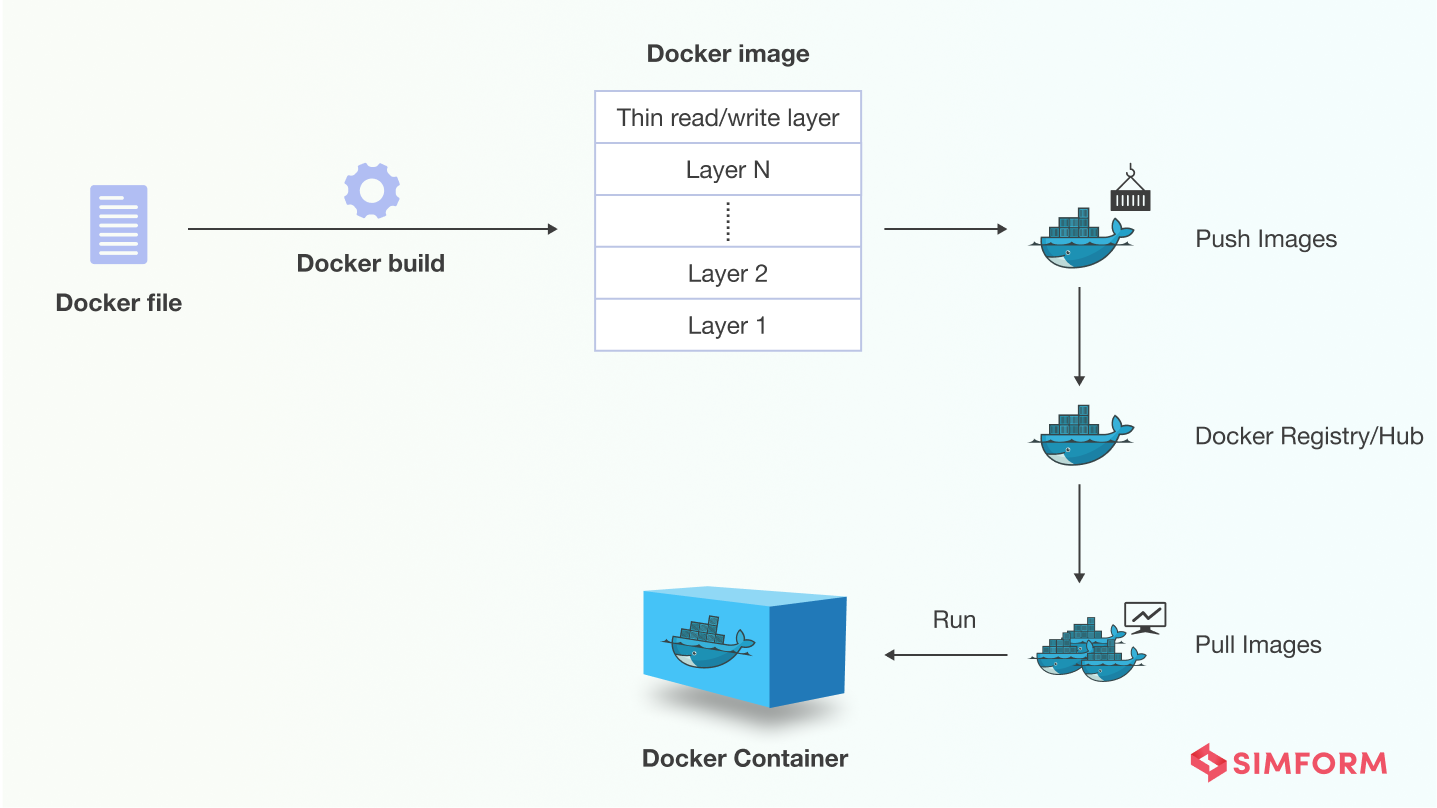

A container image is at the heart of containerized architecture. It is a static, immutable file with executable code, which can create a container on a computing system.

You can build docker container images in three ways,

1. Commit

2. Dockerfile

3. Compose

In Dockerfile, you describe what you want to put in the image. Accordingly, you can create images that are used to run one or more containers, as shown in the image.

Here are the best practices for creating container images.

Keep container images smaller and simpler

Small images can quickly load into memory when starting services or containers and rapidly pull over the network. To keep the image size small, you should

- Begin with an appropriate base image.

- Use multistage builds so that your final image won’t include all the libraries and dependencies pulled in by the build but only the required artifacts and the environment.

- A Docker image has reusable layers, i.e., different images might share some common layers. You can minimize the number of separate RUN commands in Dockerfile and decrease the number of layers in your image.

Importance: High

Smartly tag your images

Docker tags convey essential information regarding a specific image version/variant, helping manage different versions of a Docker image. In addition, tags make it easy to pull and run images. There are two types of tags. Stable tags should be used for maintaining the base image, while unique tags must be used for container deployments.

Importance: High

Take advantage of Docker Registry or Hub

Docker Registry is a highly scalable server-side application for the storage and distribution of Docker images. It allows users to pull images locally and store new ones in the registry. With the container registry, you can:

- Firmly control your images’ storage location

- Completely own your images’ distribution pipeline

- Integrate image storage and distribution soundly into your in-house development workflow

A Docker registry is organized into Docker repositories, which hold all the versions of a specific image. At the same time, Docker Hub provides a free hosted Registry with additional features for organization accounts, automated builds, etc.

Importance: Medium

Containerization best practices for operating containers

Containers create unique challenges: the tradeoffs for the high degree of agility and scalability that containers enable. Here are the best practices that help resolve several challenges that may arise while operating containers.

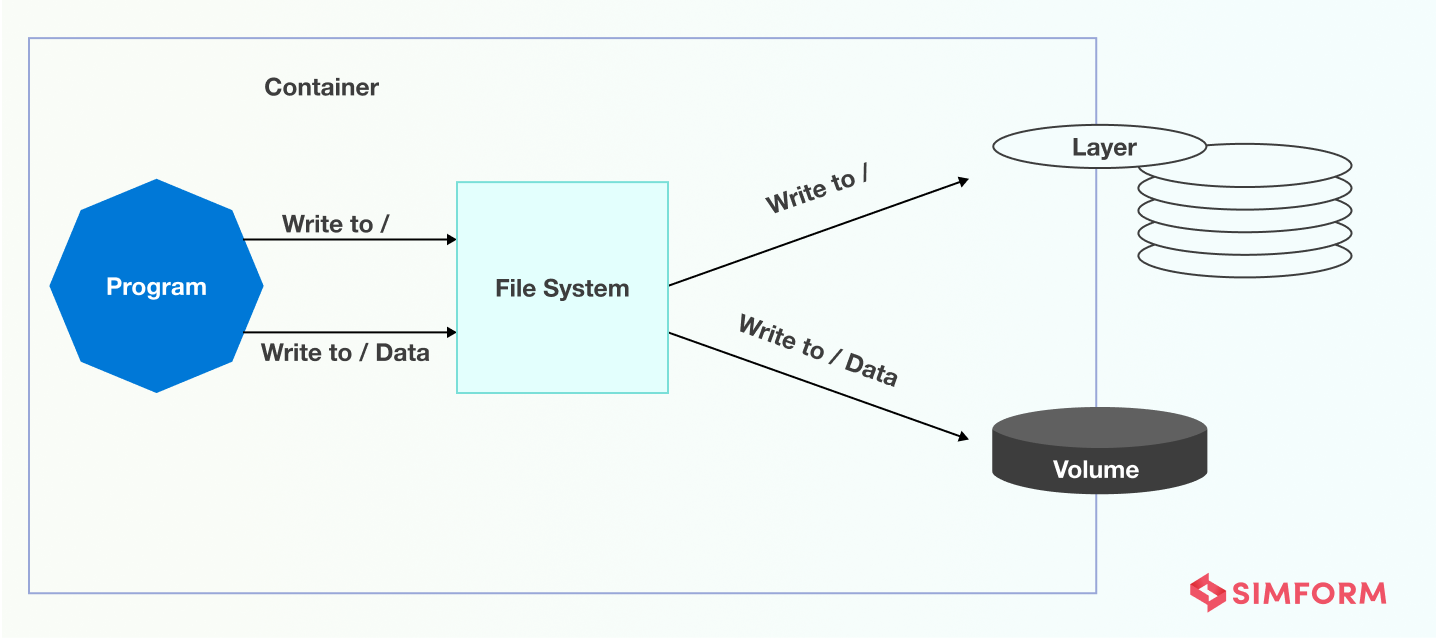

Use persistent data storage

Persistent, or often referred to as nonvolatile storage, pertains to databases—that remain available beyond the life of individual containers. Storing data within a container’s storage layer will result in the container growing exponentially and the unavailability of data when the container goes down. Instead, by storing your data in persistent volumes, you can ensure the size of the containers won’t grow with more data, and the stored data can be accessible by various containers.

Importance: High

Employ CI/CD

Continuous integration/continuous delivery is an essential DevOps practice that has entirely revamped traditional application development. Likewise, containerization and CI/CD combined can bring even more flexibility and benefits to the entire process. Adopting the continuous integration/continuous deployment (CI/CD) approach frees you from manual testing and redeploying everything.

Importance: High

Empower with container orchestration tools

The complexity introduced by containers can quickly become uncontrollable without container orchestration to manage it. Container orchestration tools can boost resilience by automatically restarting or scaling a container or cluster. Automation is a critical characteristic of virtually every aspect of building a containerized application that you want to orchestrate. Container orchestration automates the scheduling, deployment, scaling, networking, health monitoring, and management of containers.

Importance: High

Build an Enterprise Kubernetes Strategies like Spotify, GitHub and other Tech Superpowers

Containerization best practices for ensuring container security

Traditional vulnerability management approaches fall short in securing containers. Container invisibility, its immutable nature, and compromised open-source building blocks make the matter worse. An exploitable vulnerability of a container, combined with a wrong credentials configuration and exposed metadata, can compromise the entire cloud infrastructure.

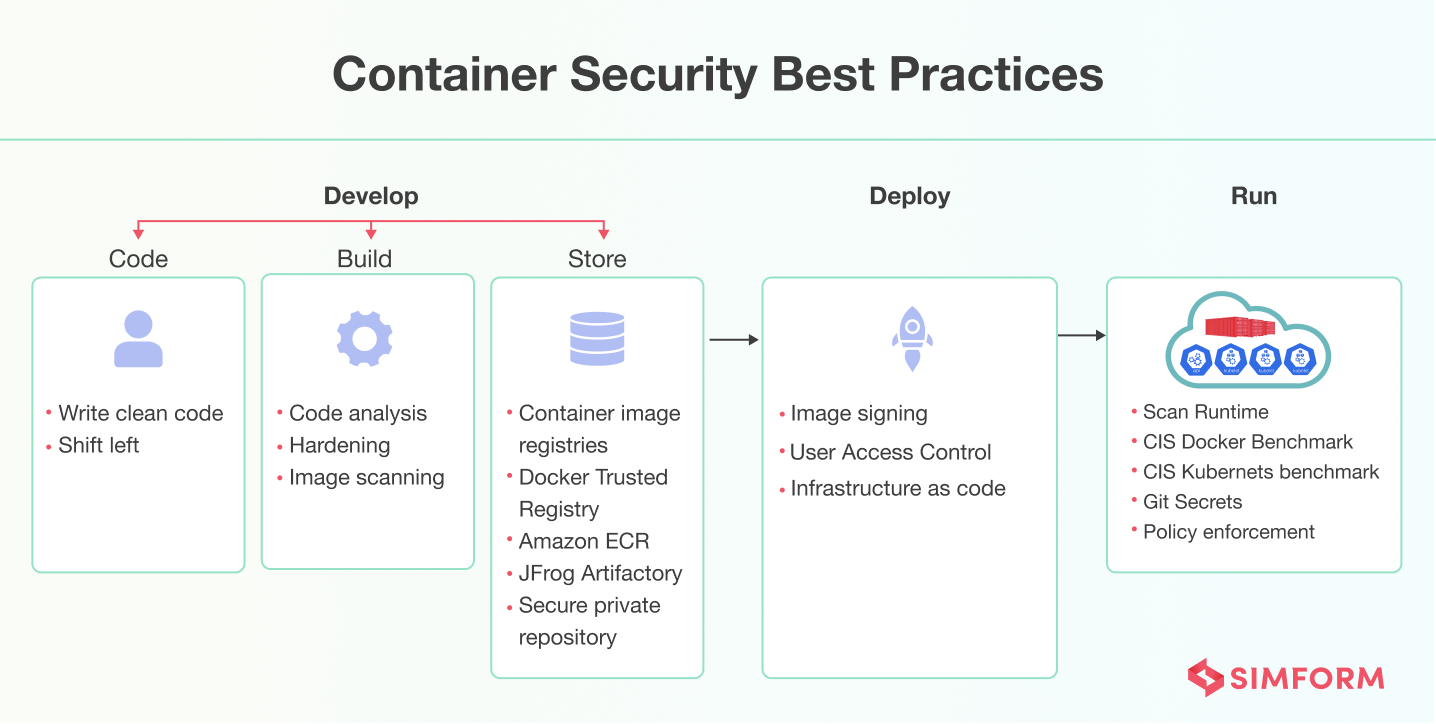

Here are the best container security practices that you should follow.

Protect container runtime

A container runtime can be pretty challenging to secure. By scanning container runtime, you can know which container images are running in production and also view essential container information about image changes, metadata, and runtime vulnerabilities. It’s better to establish behavioral baselines for the container environment in a standard and secure state. This helps detect and prevent potential attacks and anomalies.

Importance: High

Shift processes “to the left”

Shifting left is a key DevOps principle that allows you to test for vulnerabilities in the early stages of software development. However, the shift-left mentality can also be applied to security, and it is best performed by starting early in the delivery pipeline. Containers can play a critical role in facilitating this approach by providing environment parity at every stage of the delivery pipeline, taking the operating system and many environment variables out of the picture, and enabling flexible timing for software delivery processes.

Importance: Medium

Create container security policies

Establish container security policies for different phases of the container lifecycle based on the presence of malware and overall risk scores. These policies should align with business objectives and goals. Also, developers should be instantly notified with layer-specific details when a particular container image exceeds the risk threshold so that they can take direct action for rectification. Policy violations can also trigger alert notifications to block specific images from being deployed as per the organization’s preferences.

You should also use tools and implement security practices to observe container engines, master nodes, and containerized middleware and networking.

Importance: High

In addition to the practices mentioned above, you should

- Scan container images for vulnerabilities.

- Take advantage of industry best practices, like the CIS Docker Benchmark and CIS Kubernetes benchmark, which address configuration, permissions, access, patching, and sprawl.

- Use thoroughly documented APIs from Jenkins, Bamboo, and Travis CI to integrate security testing within your CI/CD build systems.

- Import and connect to container image registries like Docker Trusted Registry, Amazon ECR, and JFrog Artifactory to enable continuous protection of images.

- Avoid writing confidential information in a config file or into the code that is pushed into a repository. Tools like Git Secrets prevent you from entering passwords and other classified information into a Git repository.

- Pull images from trusted sources and store them in your secure private repository that provides the required control for proper access management.

- You can also use Infrastructure as Code (IaC) to ensure your app containers are secure when deployed.

Unlock flexibility, portability, and much more with containers!

The benefits of containers are plenty, from faster configurations to resource utilization, from application portability to efficient delivery cycle. However, the road to containerization has its fair share of lumps and holes. It may not be the right answer for every business. But if it is right for you, you’d need reliable partnership and relevant expertise to implement this revolutionary technology. Certified container experts of Simform can efficiently consult you with Kubernetes and Docker implementations and make the switch smoother. Let’s navigate to your success together!