LLMs have evolved from humble beginnings in neural networks and deep learning to the sophistication of word embeddings, Recurrent Neural Networks (RNNs), and the revolutionary transformer architecture. The latest development in this evolution is the Generative Pre-Trained (GPT) architecture, a breakthrough that has given rise to an era of generative AI.

Since the launch of ChatGPT in November 2022, the tech industry has witnessed an explosion of new open-source LLMs. Businesses of any size can tap into the power of LLMs to create tailored solutions for their needs, whether it’s developing chatbots, generating content, or analyzing data. Read on to find out the 15 most powerful open-source LLMs of 2024, each representing a milestone in the progress of artificial intelligence.

What is an open-source LLM?

A Large Language Model (LLM) is a type of artificial intelligence model designed to understand and generate human-like text. These models are built using deep learning techniques and are trained on massive datasets containing vast amounts of text, mostly from the internet.

There are two main types of LLMs – open-source and proprietary. Unlike proprietary, closed-source models like GPT-3, open-source LLMs make their training datasets, model architectures, and weights publicly available. This transparency promotes reproducibility and decentralization of AI research.

The world needs high performance open source LLM.

— Yann LeCun (@ylecun) April 19, 2023

The main obstacle today is the legal status of the training data. https://t.co/rn474jShRS

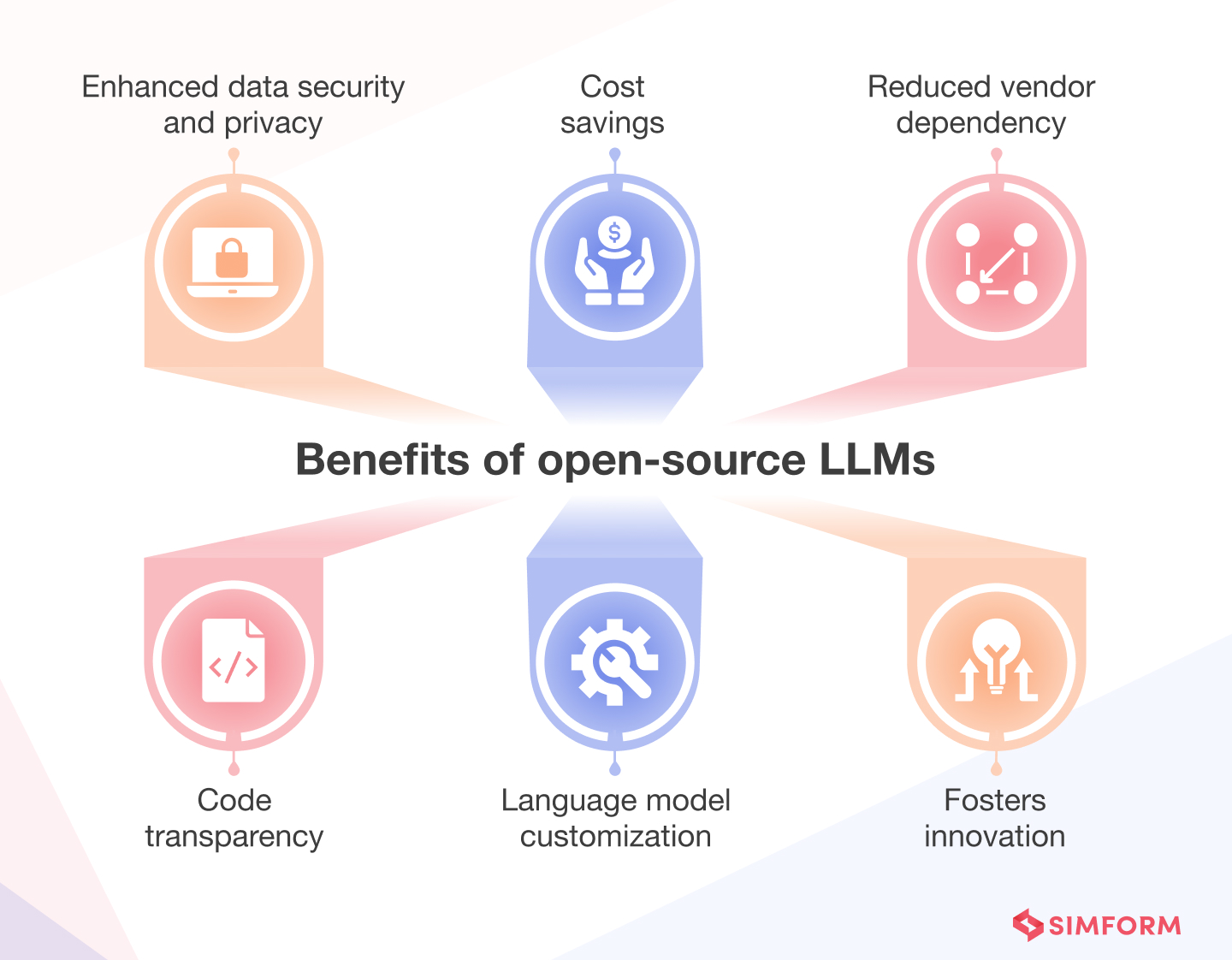

Benefits of open-source LLMs for enterprises and startups

Open-source LLM platforms offer businesses greater flexibility, transparency, and cost savings than closed-source options.

- Enhanced data security and privacy: With open-source LLMs, organizations can deploy the model on their own infrastructure and, thus, have more control over their data.

- Cost savings: Open-source LLMs eliminate licensing fees, which makes them a cost-effective solution for enterprises and startups with tight budgets.

- Reduced vendor dependency: Businesses can reduce reliance on a single vendor and have more control over their models.

- Code transparency: Open-source LLMs offer transparency into their underlying code so that organizations can inspect and validate the model’s functionality.

- Language model customization: Tailoring the model to specific industry or domain needs is more manageable with open-source LLMs. Organizations can fine-tune the model to suit their unique requirements.

- Active community support: Open-source projects often have thriving communities of developers and experts. This means quicker issue resolution, access to helpful resources, and a collaborative environment for problem-solving.

- Fosters innovation: Open-source LLMs encourage innovation by enabling organizations to experiment and build upon existing models. Startups, in particular, can leverage these models as a foundation for creative and unique applications.

While open-source options offer many advantages, they may also require more technical expertise and resources for implementation and maintenance. Thankfully, a few open-source LLMs make the job easier with their robust features. Let’s explore the top options and compare their features.

Open-source LLM: Which are the top models in 2024?

Open-source language models are abundant in 2024 but Hugging Face’s Open LLM Leaderboard makes sifting through popular choices easy. This leaderboard employs a composite LLM score, drawing from diverse benchmarks like ARC for reasoning prowess, HellaSwag for common-sense inference, MMLU for multitasking ability, and Truthful QA for answer veracity.

Using this foundation and our industry knowledge of AI and LLMs, we’ve meticulously curated the top 15 open-source LLMs, each with its key features.

1. GPT-NeoX-20B

With 20 billion parameters, GPT-NeoX-20B, developed by EleutherAI, is among the most prominent open-source large language models. It is trained on the Pile dataset; an open-source 886-gigabyte language modeling dataset split into 22 smaller datasets. The Pile dataset contains diverse text sources such as books, Wikipedia, GitHub, and Reddit.

We've had great results with the GPT-NeoX tokenizer by @AiEleuther, which specifically has tokenization designed to better handle code. Creating your own tokenizer is a very tricky business, and we had a really tough time beating the NeoX tokenizer.

— Jonathan Frankle (@jefrankle) May 29, 2023

This model is built upon the architecture of GPT-3 but introduces innovations like synchronous data parallelism, gradient checkpointing, and more. GPT-NeoX-20B uses autoregressive language modeling, predicting the following word in a text to enhance understanding and generate coherent responses.

Key features of GPT-NeoX-20B

- Large-scale: GPT-NeoX-20B’s extensive parameter count contributes to its ability to produce coherent and contextually relevant content.

- Efficient multi-GPU training: GPT-NeoX-20B is optimized for multi-GPU training, resulting in faster training times and accelerated model convergence.

- Enhanced contextual understanding: The model can grasp the text’s intricate nuances and generate more contextually accurate and coherent responses.

- Multilingual proficiency: GPT-NeoX-20B demonstrates enhanced multilingual capabilities. It can both comprehend and generate content in diverse linguistic contexts.

- Fine-tuning flexibility: You can fine-tune the LLM on specific tasks, which leads to adaptability for various applications, ranging from language translation to question answering.

2. GPT-J

GPT-J is also an advanced language model created by EleutherAI. Trained on the Pile dataset, GPT-J is an autoregressive, decoder-only transformer model designed to solve natural language processing tasks.

GPT-J-6B might be “old” but it’s hardly slowing down. Month after month it’s among the most downloaded GPT-3 style models on @huggingface, and no billion+ param model has ever come close (“gpt2” is the 125M version, not the 1.3B version). pic.twitter.com/EZyrBApLka

— EleutherAI (@AiEleuther) March 26, 2023

It has 6 billion parameters, 30 times smaller than GPT-3’s 175 billion parameters. GPT-J is based on the GPT-2 architecture, with the only significant difference being the parallel decoders. This means that instead of processing tokens one after the other, multiple tokens or chunks of text can be processed simultaneously during training. That way, GPT-J can make better use of distributed computing resources (like multiple GPUs or TPUs) to train the model faster.

Key features of GPT-J

- Powerful text generation: GPT-J is known for its ability to generate high-quality, coherent text across various domains, making it useful for tasks like content creation, story writing, and even code generation.

- Few-shot learning capability: It can understand and mimic abstract patterns in text. With few examples or instructions, it can perform well on natural language processing tasks without extensive training.

- Accessible and easy to use: GPT-J’s straightforward API allows developers to easily integrate the model into their applications, enabling smooth interaction with the model using simple API calls.

- Availability and cost: GPT-J provides an accessible alternative to GPT-3 by enabling researchers and developers to use powerful language models without needing large-scale infrastructure or expensive licenses.

3. LLaMA 2

LLaMA 2, an acronym for Large Language Model Meta AI, is an advanced AI language model developed by Meta AI and Microsoft. It can comprehend and generate text while also understanding images, making it suitable for various multimodal tasks. The model comes in three sizes, each trained on 7, 13, and 70 billion parameters.

Llama 2 is a step forward for commercially available language models and open innovation in AI. These new models were pretrained on 2T tokens, and have double the context length when compared to the original release of Llama.

Download Llama 2 ➡️ https://t.co/rrwbZVUf4n pic.twitter.com/MLL4cSL5Ih

— AI at Meta (@AIatMeta) July 18, 2023

LLaMA 2 was trained on a diverse range of internet text and image data, and its architecture integrates concepts from its predecessor, LLaMA 1, and builds upon them. You can use LLaMA 2 for tasks like generating detailed responses to text and image inputs, facilitating interactive storytelling, answering questions based on images, and more. It also has the potential for content creation, research, and entertainment apps.

Key features of LLaMA 2

- Enhanced contextual understanding: LLaMA 2 excels at understanding conversational nuances, effortlessly grasping shifts in context to provide remarkably accurate responses.

- Expanded knowledge base: With access to diverse sources, LLaMA 2 offers well-rounded insights on various subjects and enriching interactions.

- Adaptive communication: LLaMA 2 adjusts its tone and style based on your preferences.

- Ethical and responsible AI: It promotes safe content generation by minimizing biased or harmful outputs, aligning with Meta’s commitment to user well-being.

- Multi-modal proficiency: LLaMA 2 seamlessly integrates text with other media, and so, it can describe, interpret, and generate content across multiple modalities.

4. OPT-175B

The Open Pre-trained Transformer model is a significant development by Meta AI, democratizing access to large-scale language models. It’s most powerful version, OPT-175B, boasts a colossal size of 175 billion parameters. OPT was trained on unlabeled text data filtered to contain pre-dominantly English sentences, enabling it to comprehend and generate human-like text across various domains.

Today Meta AI is sharing OPT-175B, the first 175-billion-parameter language model to be made available to the broader AI research community. OPT-175B can generate creative text on a vast range of topics. Learn more & request access: https://t.co/3rTMPms1vq pic.twitter.com/DzjAdbPDLx

— AI at Meta (@AIatMeta) May 3, 2022

Built upon the Transformer architecture, OPT hierarchically processes input text, gradually refining its understanding through multiple layers of self-attention and contextual learning. Moreover, it is the human feedback that empowers OPT to generate highly coherent and contextually relevant responses.

Key features of OPT-175B

- Gradient checkpointing: It reduces memory consumption by trading compute for memory, enabling the training of larger models without running out of memory.

- Few-shot learning: OPT excels at few-shot and zero-shot learning. It requires only a few examples to grasp new tasks or languages.

- Automatic mixed precision (AMP): OPT supports mixed precision training, which uses both single and half-precision to accelerate training and minimize memory usage.

- Reduced carbon footprint: It employs a training approach that enhances parameter efficiency, leading to more environmentally friendly AI development.

5. BLOOM

BLOOM, a remarkable achievement by BigScience, is a large-scale, open-access, multilingual language model designed to foster scientific collaboration and breakthroughs. Developed by a diverse global team, BLOOM takes its basis from the GPT-3.5 architecture. With an impressive model size of 176 billion parameters, it outstrips many existing models in scale.

So… the participants of BigScience have voted on the future name of the 176B language model currently being trained and it will be called

🌸 BLOOM 🌸

For the "BigScience Language Open-source Open-access Multilingual", a bit out-of-order but you get the idea 😉

— BigScience Large Model Training (@BigScienceLLM) May 11, 2022

Unlike conventional models, BLOOM relies on 46 natural world languages and 13 programming languages. Its unique architecture allows for fine-tuning a wide array of downstream tasks.

BLOOM aims to support global scientific advancement by facilitating cross-domain research and enabling scholars to harness its capabilities for various applications, thereby democratizing AI-driven research and innovation. This pioneering model could reshape the landscape of scientific exploration and collaboration.

Key features of BLOOM

- Inclusive language: BLOOM actively ensures inclusivity by avoiding biased or offensive language.

- Multilingual competence: It excels in multiple languages and facilitates seamless communication and content generation for global audiences.

- Dynamic contextual understanding: With advanced contextual comprehension, BLOOM can grasp nuanced meanings in text to generate more accurate and relevant responses.

- Ethical communication: BLOOM is programmed to prioritize ethical considerations, fostering responsible AI use and discouraging harmful content.

- Cultural sensitivity: Researchers prioritized respecting cultural norms while training this AI model. As a result, it has low potential for generating culturally insensitive content.

6. Baichuan-13B

China’s pioneering search engine company, Baichuan Inc., has unveiled an open-source large language model named Baichuan-13B, aiming to compete with OpenAI. With a model size of 13 billion parameters, it seeks to empower businesses and researchers with advanced English and Chinese AI language processing and generation capabilities.

Baichuan-13B: China’s Open Source Large Language Model to Rival OpenAI. Both Chinese and English and its open-source nature means developers can freely use and modify it. This makes it an exciting candidate for running on Bittensor.

In an industry dominated by a few tech…

— Andy ττ (@bittingthembits) July 23, 2023

The model’s pre-training dataset involves 1.3 trillion tokens. Baichuan-13B enables text generation, summarization, translation, and more tasks. This initiative comes after Baichuan’s success with Baichuan-7B and aligns with the company’s mission to democratize generative AI for broader practical use.

Key features of Baichuan-13B

- Chinese language proficiency: Tailored for excellence in understanding and generating Chinese content, Baichuan-13B empowers applications spanning sentiment analysis to Mandarin content creation.

- Simplified data interaction: You can streamline your interaction with vast text data effortlessly using Baichuan-13B. It enables research, trend analysis, and information extraction to support informed decision-making.

- Vast linguistic capacity: Baichuan-13B boasts a staggering 13 billion parameters. It excels in comprehending and generating intricate language patterns, thereby facilitating nuanced communication.

- Industry-grade performance: Baichuan-13B aligns with leading language models, guaranteeing competitive results across various applications such as text generation, summarization, and sentiment analysis.

7. CodeGen

CodeGen, a remarkable creation by researchers at Salesforce AI Research, builds upon the foundation of the GPT-3.5 architecture. This innovative model offers a range of sizes, including 350 million, 2 billion, 6 billion, and an impressive 16 billion parameters.

We're not hopping on the artificial intelligence bandwagon, we're driving it!

With our open source CodeGen library, we're empowering developers worldwide to create intelligent, efficient, and scalable AI applications.

Try it out: https://t.co/QdWo1ROjlX pic.twitter.com/Enu9KRy7zU

— Salesforce (@salesforce) May 19, 2023

CodeGen training dataset includes a diverse set of programming languages and frameworks. Additionally, it encompasses code snippets from GitHub and Stack Overflow. This dataset helps CodeGen understand programming concepts and code and natural language relationships. It also enables the model to generate accurate and reliable code solutions when given simple English prompts as input. CodeGen has garnered attention due to its potential to streamline software development processes and enhance developer productivity.

Key features of CodeGen

- Code generation: CodeGen uses its vast training dataset and understanding of programming concepts to generate accurate and reliable code solutions when given simple English prompts as input.

- Language flexibility: CodeGen has been trained in diverse programming languages and frameworks, enabling it to understand and generate code in multiple languages.

- Error handling: CodeGen can identify and handle potential errors and mistakes in the generated code, improving code quality and minimizing potential issues during execution.

8. BERT

BERT (Bidirectional Encoder Representations from Transformers) was created by researchers at Google AI. With a model size of up to 340 million parameters, BERT has been trained on a diverse dataset comprising 3.3 billion words, including BookCorpus and Wikipedia.

Everyone is amazed at OpenAI and ChatGPT, but don’t forget: the transformer was invented by Google, the first LLM was Google’s BERT, and Google made an apparently impressive chatbot (LaMDA) before ChatGPT but didn’t release publicly since they didn’t feel it was safe to do so.

— Noah Giansiracusa (@ProfNoahGian) January 14, 2023

BERT’s innovation lies in its bidirectional context understanding. Unlike previous models that process text sequentially, BERT reads sentences in both directions simultaneously, capturing intricate contextual relationships. During training, BERT masks some words and learns to predict them, thereby developing a deep contextual understanding. It revolutionized various NLP tasks, achieving state-of-the-art results across tasks like question answering, sentiment analysis, etc.

Key features of BERT

- Bidirectional context: BERT comprehends context from both directions in a sentence, enhancing its grasp of nuanced relationships and improving understanding.

- Attention mechanism: It employs attention mechanisms focusing on relevant words, capturing intricate dependencies, and enabling the model to give context-aware responses.

- Masked language model: During training, BERT masks certain words and predicts them using surrounding context, enhancing its ability to infer relationships and meaning.

- Next sentence prediction: BERT also learns to predict whether a sentence follows another in a given text. It enhances BERT’s understanding of sentence relationships, which is beneficial for tasks like question answering and summarization.

- Task agnostic: BERT’s pretraining and fine-tuning approach enables easy adaptation to different tasks. It can achieve remarkable results even with limited task-specific data by fine-tuning the pre-trained model on specific tasks.

9. T5

T5, or Text-To-Text Transfer Transformer, is a versatile pre-trained language model developed by researchers at Google AI. It’s based on the Transformer architecture and designed to handle a wide range of natural language processing tasks through a unified “text-to-text” framework. With 11 different sizes, T5’s models vary from small to extra-large, with the largest having 11 billion parameters.

Announcing T5, a new model that reframes all #NLP tasks as text-to-text, enabling the use of the same model, loss function and hyperparameters on any NLP task. Included is the Colossal Clean Crawled Corpus, a new and extensive NLP pre-training dataset. https://t.co/QE5d0GSedb

— Google AI (@GoogleAI) February 24, 2020

The model’s training was conducted on the Colossal Clean Crawled Corpus (C4) dataset, encompassing English, German, French, and Romanian languages. T5 redefines tasks by casting them into a text-to-text format, facilitating results like translation, summarization, classification, and more by treating each task as a text-generation problem.

Key features of T5

- Encoder-decoder architecture: T5 (Text-To-Text Transfer Transformer) employs an encoder-decoder architecture, treating almost all NLP tasks as a text-to-text problem. This results in enhanced consistency in model design.

- Pre-training for diverse tasks: In T5’s pre-training process, the model generates target text from the source text, which includes various tasks like translation, summarization, classification, and more. This approach results in a versatile and unified model.

- Flexible input-output paradigm: It operates in a “text as input, text as output” paradigm. Framing tasks in this manner reduces complexity and allows fine-tuning for specific objectives.

- Adapter-based architecture: T5 uses a modular architecture that adapts to new tasks through several additional parameters.

- Contextual consistency: T5 maintains coherence in lengthy interactions and produces natural-flowing conversations.

10. Falcon-40B

Falcon-40B functions by predicting the next word in a sequence. With its remarkable scale and capabilities, Falcon-40B holds promise for revolutionizing various natural language processing tasks.

Falcon 40B – our game-changing AI model is now open source for research and commercial use.

We are also providing access to the model’s weights to give researchers and developers a chance to use it to bring their innovative ideas to life. pic.twitter.com/fApOKYjIPU

— Technology Innovation Institute (@TIIuae) May 25, 2023

Falcon-40B functions by predicting the next word in a sequence. With its remarkable scale and capabilities, Falcon-40B holds promise for revolutionizing various natural language processing tasks, elevating the potential for language generation and understanding.

Key features of Falcon-40B

- High-quality data pipeline: It has a robust data pipeline that ensures the utilization of diverse and reliable training data, enhancing the model’s overall performance.

- Autoregressive decoder-only model: Falcon-40B captures dependencies between elements in a sequence effectively. So, it excels in tasks where the order of elements matters, like text generation, machine translation, and speech synthesis.

- Advanced language comprehension: It deeply understands language nuances, allowing it to comprehend intricate prompts and produce coherent responses that align more closely with human-like interaction.

- Robust safety features: Rest easy with Falcon-40B’s comprehensive safety measures, including collision avoidance and real-time monitoring.

11. Vicuna-33B

Vicuna-33B was developed by Large Model Systems (LMSys), a prominent AI research organization. With a model size of 33 billion parameters, researchers trained Vicuna-33B by fine-tuning LLaMA on user-shared conversations collected from ShareGPT.com. Vicuna-33B is built upon a novel hybrid architecture, seamlessly integrating transformer-based and biological neural network components.

Thrilled to see Vicuna-33B top on the AlpacaEval leaderboard!

Nonetheless, it's crucial to recognize that open models are still lagging behind in some areas, such as math, coding, and extraction as per our latest MT-bench study [2, 3]. Plus, GPT-4 may occasionally misjudge,… pic.twitter.com/L0vGjWGIPW

— lmarena.ai (formerly lmsys.org) (@lmarena_ai) July 11, 2023

This unique blend allows it to mimic human-like language understanding while harnessing the efficiency of transformer models. Vicuna-33B’s hybrid architecture initially processes input through the transformer module, which captures syntactical nuances. The output then undergoes refinement via the biological neural network, mimicking semantic comprehension akin to human cognition. This approach leads to a comprehensive and contextually rich text generation, making Vicuna-33B a powerful tool for natural language processing.

Key features of Vicuna-33B

- Fine-grained contextual understanding: The model captures intricate nuances of context and can produce more accurate and contextually relevant responses to user queries.

- Cross-domain versatility: Vicuna-33B has been trained on diverse text sources, making it proficient in understanding and generating text across various domains.

- Rapid inference speed: Despite its size, Vicuna-33B is optimized for efficiency. It can deliver fast responses to user queries without compromising accuracy.

- Long-term context retention: The model can retain context over longer stretches of text, allowing it to handle complex and multi-turn conversations easily.

- Low-resource adaptability: Vicuna-33B can adapt well to low-resource languages, making it a valuable resource for language-related tasks in regions with limited linguistic resources.

12. MPT-30B

MPT-30B is an innovative open-source language model developed by MosaicML, a leader in AI research. With 30 billion parameters, it is built on the foundation of the GPT architecture, refining it for enhanced performance. Its unique training approach includes a “mosaic” of data, which includes 1 trillion tokens of English text and code, combining supervised, unsupervised, and reinforcement learning.

🔥@MosaicML Destroy GPT-3 with MPT-30B🔥

Smallest model ever to beat GPT-3:

– Trillion tokens.

– 8K Ctx window.

– The best architecture today (my opinion)

– Open source (for real!)– Try it (chat): https://t.co/17gCgewJx6

– Model: https://t.co/J1Mc5Hw9FR

– Instruct model:… pic.twitter.com/fBkdT4hVdg— Yam Peleg (@Yampeleg) June 22, 2023

MPT-30B’s commercial applications span content creation, code generation, and more. MosaicML’s commitment to open-source innovation empowers developers and enterprises to harness MPT-30B’s capabilities for diverse linguistic tasks.

Key features of MPT-30B

- 8k token context window: MPT-30B excels in processing long-range dependencies with its expansive 8k token context window. This enables a deeper understanding of context and enhances the quality of the generated text.

- Support for attention with linear biases (ALiBi): This innovation refines attention mechanisms by incorporating linear biases, resulting in more focused and contextually relevant responses.

- Efficient inference: MPT-30B optimizes inference speed and delivers rapid and accurate results. It’s ideal for real-time applications that demand swift responses without compromising quality.

- Ease of training and deployment: Its user-friendly design simplifies the training and deployment process, enabling developers to harness the power of advanced language models effectively.

- Coding capabilities: The capabilities of MPT-30B go beyond text generation, demonstrating a remarkable aptitude for code-related tasks.

13. Dolly 2.0

Dolly 2.0 is an innovative LLM-developed alternative to commercial offerings like ChatGPT. Databricks, a prominent AI player, created Dolly 2.0, representing a significant leap in language generation technology. Dolly 2.0 boasts a 12 billion parameter count, and it was trained on databricks-dolly-15k, a dataset created by Databricks employees, a 100% original, human-generated 15,000 prompt and response pairs.

Open-source ML is at it again!

Databricks just released Dolly 2.0!

Here's what you need to know:

– This model is a 12B parameter language model based on EleutherAI Pythia model family.

– It's fine-tuned on 15K high-quality human-generated prompt/response pairs (crowdsourced…

— elvis (@omarsar0) April 12, 2023

With its foundation in GPT-3.5 architecture, Dolly 2.0 is trained on diverse datasets, empowering it to comprehend and generate high-quality text. Its functionality arises from a two-step training process: it first undergoes pre-training on extensive text corpora and then engages in fine-tuning through a pioneering “instruction tuning” approach. Dolly 2.0’s release signals a new era for open-source LLM, providing a commercially viable alternative to proprietary models.

Key features of Dolly 2.0

- Instruction tuning: Dolly 2.0 introduces a pioneering technique called “instruction tuning.” This approach involves fine-tuning the model using specific instructions, resulting in more controlled and accurate text generation across various contexts.

- Commercial viability: It’s commercially viable for various applications. The open-source nature and high-quality performance make it attractive for businesses seeking cost-effective language generation solutions.

- Coding assistant: Dolly 2.0 is an adept coding companion, providing real-time suggestions and auto-completions as you code.

Code review: It meticulously analyzes your code, identifying potential errors, bugs, and inefficiencies. - Project management: You can manage your web development projects through Dolly 2.0’s intuitive interface, facilitating task tracking, progress monitoring, and seamless collaboration among team members.

14. Platypus 2

Platypus 2 has emerged as a significant player amongst other large language models (LLMs). Crafted by Cole Hunter & Ariel Lee, Platypus 2 boasts a model size of 70 billion parameters, propelling it to the forefront of Hugging Face’s Open LLM leaderboard. The developers meticulously trained Platypus 2 on the Open-Platypus dataset, consisting of tens of thousands of finely tuned and merged LLMs.

Platypus, a new open-source LLM at the top of the leaderboard: https://t.co/fRKUDPmWkY

Key points are

1) a curated dataset: removing similar & duplicate questions

2) finetuning and merging Low Rank Approximation (LoRA) modules: focusing on the non-attention modules pic.twitter.com/PQJ4Gv6ddZ— Sebastian Raschka (@rasbt) August 17, 2023

Built upon LLaMA and LLaMa 2 transformer architectures, Platypus 2 combines the efficiency of Qlora and LLaMA 2. Its capability to generate coherent and contextually rich content across various domains sets it apart. Its ability to generate high-quality text, combined with its substantial parameter size, shows its pivotal role in the future of AI-driven applications, spanning from natural language understanding to high-quality content creation.

Key features of Platypus 2

- Preventing data leaks: Through advanced encryption and access controls, Platypus 2 ensures that sensitive information remains safeguarded throughout training and subsequent interactions.

- Clearing biases: Platypus 2 uses LoRA (Low-Rank Adaption) and PEFT (Parameter Efficient Fine-tuning, to mitigate biases in the learned representations. It leads to more neutral, unbiased, and balanced outcomes.

- Minimizing data redundancy: The model strategically selects a diverse subset of training data, ensuring optimal representation and reducing overfitting, enhancing efficiency and generalization capabilities.

- Fast and cost-effective: Platypus 2 integrates Qlora and LLaMA 2. Qlora enables quick model adaptation, minimizing the need for fine-tuning. LLaMA 2 enhances the training process, reducing training time and associated costs. This amalgamation of technologies allows Platypus 2 to provide rapid and budget-friendly solutions.

Enhanced contextual understanding: With its colossal 70 billion parameters, Platypus 2 crafts more coherent and contextually relevant content, catering to various applications such as content creation, summarization, and nuanced language understanding.

15. Stable Beluga 2

Stable Beluga 2 is an auto-regressive LLM derived from the LLamA-2 model developed by Meta AI. The brainchild of Stability AI, Stable Beluga 2, can tackle complex language tasks with a higher level of accuracy and understanding.

For an unknown limited time (probably until some crashing bug), I am hosting Stable Beluga 2 70B (a tune of Llama 2 70B) which is a little more "guide-able" than Llama 2 via prompting.

It's a streaming-chat Gradio app hosted on @LambdaAPI cloud.

http://209.20.159.223:7860/ pic.twitter.com/Ecf2SvzorL

— Harrison Kinsley (@Sentdex) August 3, 2023

Trained on a diverse and internal Orca-style dataset, Stable Beluga 2 leverages Supervised Fine Tuning (SFT) to refine its performance. This process involves exposing the model to a large corpus of carefully curated examples and guiding it toward better predictions, increasing its precision and versatility. It also enables the model to comprehend context, generate coherent text, and provide valuable insights across numerous apps, including text generation, summarization, and more.

Key features of Stable Beluga 2

- Remarkable reasoning abilities: Stable Beluga 2 exhibits exceptional reasoning skills, enabling you to receive insightful and contextually accurate responses.

- High-class performance: Through supervised fine-tuning, Stable Beluga 2 enhances its performance on specific tasks by learning from labeled data. It can provide more precise outputs for your targeted requirements.

- Harmlessness: One of Stable Beluga 2’s distinctive qualities is its commitment to producing content that is free from harm. It prioritizes generating safe, non-offensive, and respectful responses, promoting a positive and inclusive user experience.

- Responsible sourcing: With its emphasis on responsible AI practices, Stable Beluga 2 draws from ethical and reputable sources for its information. This feature fosters trustworthy interactions and prevents the dissemination of misinformation.

- Privacy assurance: Stable Beluga 2 operates within stringent privacy guidelines, safeguarding user information and ensuring confidential interactions.

Now that you’re familiar with the workings and features of the top open-source LLMs, let’s do a comparative analysis to understand their strengths, weaknesses, and unique attributes.

Comparative analysis of top open-source LLMs

In this comparative analysis, we’ll find out essential details such as creators, parameters, model sizes, architecture types, and training datasets of these open-source LLM tools. To guide our exploration, we’ll utilize Hugging Face’s Open LLM Leaderboard as our foundation.

| LLM | Created By | Parameters | Architecture Type | Dataset Used for Training | Overall Open LLM Score

(Out of 100) |

| GPT-NeoX-20B | EleutherAI | 20 Billion | Autoregressive transformer decoder model | Pile dataset | 43.95 |

| GPT-J | EleutherAI | 6 Billion | Decoder-only transformer model | Pile dataset | 42.88 |

| LLaMA 2 | Meta AI and Microsoft | 70 Billion | Generative pretrained transformer model | English CommonCrawl, C4 dataset, GitHub repositories, Wikipedia dumps, Books3 corpora, arXiv scientific data, and Stack Exchange | 66.8 |

| OPT | Meta AI | 125 to 175 Billion | Decoder-only transformer model | Unlabeled text data that has been filtered to contain pre-dominantly English sentences | 46.25 |

| BLOOM | BigScience | 176 Billion | Decoder-only transformer model | ROOTS Corpus | 42.07 |

| Baichuan | Baichan Intelligence | 13 Billion | Transformer model | Chinese and English language | 36.23 |

| CodeGen | Salesforce | 16 Billion | Autoregressive language model | The Pile, BigQuery, and BigPython | 46.23 |

| BERT | 110 Million and 340 Millon | Transformer model | BookCorpus and English Wikipedia | NA | |

| T5 | Google AI | 11 Billion | Transformer model | Colossal Clean Crawled Corpus (C4) | NA |

| Falcon-40B | Technology Innovation Institute (TII) | 40 Billion | Decoder-only model | 1,000B tokens of RefinedWeb | 61.48 |

| Vicuna-33B | LMSys.org | 33 Billion | Autoregressive language model | 125K conversations collected from ShareGPT.com | 65.12 |

| MPT-30B | MosaicML | 30 Billion | Modified transformer architecture | 1 trillion tokens of English text and cod | 56.15 |

| Dolly 2.0 | Databricks | 12 Billion | EleutherAI pythia model | databricks-dolly-15k | 43.67 |

| Platypus 2 | Cole Hunter and Ariel Lee | 70 Billion | Autoregressive language model | Open-Platypus | 73.13 |

| Stabl Beluga 2 | Stability AI | 70 Billion | Autoregressive language model | Orca-style dataset | 71.42 |

If you further break down the overall LLM score into Reasoning Ability, Common Sense, Mullti-Tasking, and Truthfulness, you will know which LLM exhibits a particular ability more than others.

| LLM | Reasoning Ability

(Out of 100) |

Common Sense

(Out of 100) |

Multi-Tasking

(Out of 100) |

Truthfulness

(Out of 100) |

| GPT-NeoX-20B | 45.73 | 73.45 | 25.0 | 31.61 |

| GPT-J | 41.38 | 67.56 | 26.61 | 35.96 |

| LLaMA 2 | 64.59 | 85.88 | 63.91 | 52.8 |

| OPT | 46.33 | 76.25 | 26.99 | 35.43 |

| BLOOM | 41.13 | 62 | 26.25 | 38.9 |

| Baichuan | 47.38 | 40.7 | 69.02 | 43.59 |

| CodeGen | 46.76 | 71.87 | 32.35 | 33.95 |

| BERT | 79.4 | 67.75 | NA | NA |

| T5 | NA | NA | NA | NA |

| Falcon-40B | 61.95 | 85.28 | 56.98 | 41.72 |

| Vicuna-33B | 62.12 | 83 | 59.22 | 56.16 |

| MPT-30B | 55.89 | 82.41 | 47.93 | 38.38 |

| Dolly 2.0 | 42.41 | 72.53 | 25.92 | 33.83 |

| Platypus 2 | 71.84 | 87.94 | 70.48 | 62.26 |

| Stabl Beluga 2 | 71.08 | 86.37 | 68.79 | 59.44 |

Observations:

- If you compare the open-source LLMs based on the average score, Platypus 2 leads the chart, followed by Stable Belgua 2 and LLaMA 2.

- For reasoning ability, BERT is at the top, followed by Platypus 2 and Stable Belgua 2.

- If you analyze the LLM based on its ability to answer common session questions, Platypus 2 leads the way, followed by Stable Belgua 2 and LLaMa 2.

- When you evaluate LLMs based on multitasking, Platypus 2 is the winner, followed by Baichaun and Stable Belgua 2.

- Lastly, if you value getting most of the queries factually correct, Platypus 2 is the leader, followed by Stable Belgua 2 and Vicuna-33B.

Make the most of open-source LLMs with Simform’s expertise

Open-source LLMs undoubtedly hold the key to shaping the future of AI-driven enterprises and startups. Their versatility and adaptability offer many benefits, from enabling innovative solutions in natural language processing to reducing development costs and fostering collaborative innovation.

However, businesses can’t ignore the potential risks, such as privacy concerns, data security, and data leaks that come with LLM implementation. To overcome these challenges, organizations with limited AI expertise need a reliable AI tech partner.

At Simform, we are at the forefront of AI and ML development, offering tailored solutions that align with your business goals. With our expertise, you can confidently make the most of open-source LLMs, leverage their advantages, and stay ahead of the curve. Embrace the future with open-source LLMs, and let Simform be your trusted partner on this transformative journey.