Since you’ve landed on this blog, it’s likely that you’re either using a serverless architecture or exploring the cloud computing model– Serverless. In either case, application security remains a top concern.

While many cloud providers offer powerful serverless services, accompanied by robust security features. serverless applications are not immune to security risks and challenges that come with any web or software applications.

In this article, we will delve into the world of serverless security, covering what it is, the risks and challenges involved, and most importantly, best practices and expert tips for securing your serverless functions.

What is Serverless Security?

It refers to the practice of securing applications and services that utilize serverless computing architectures.

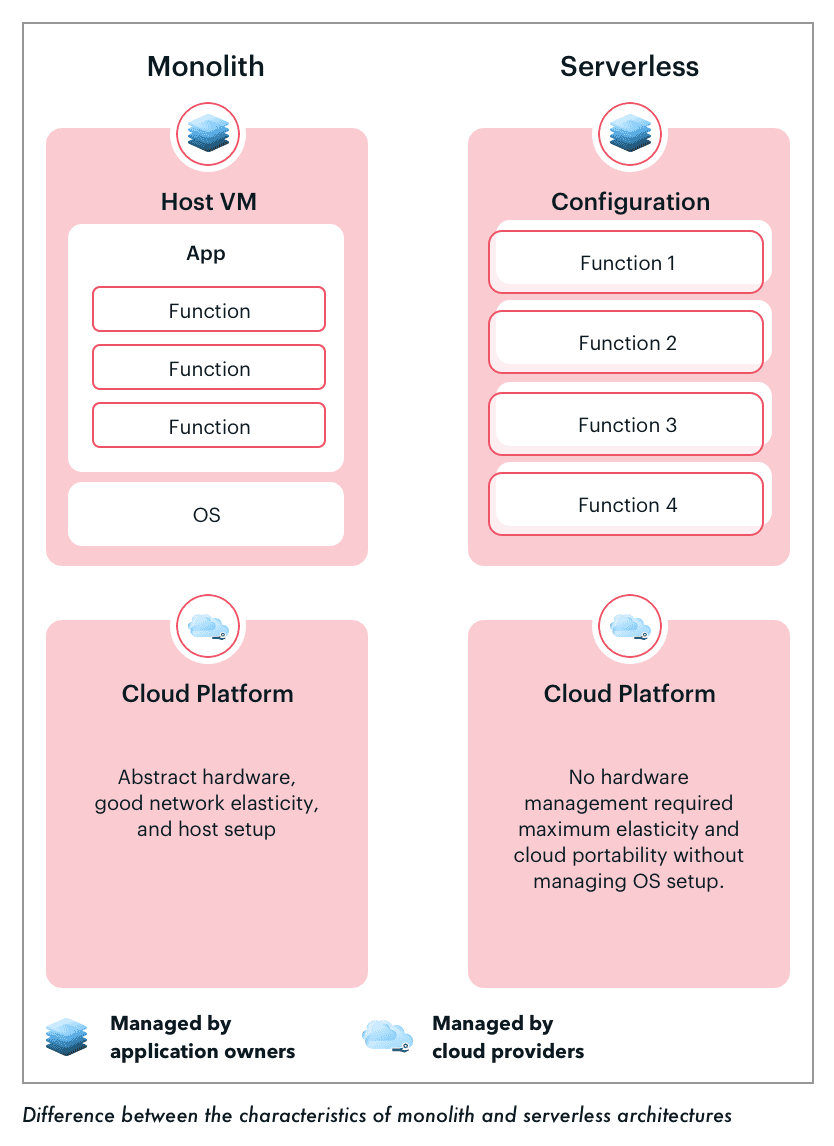

In a serverless computing model, applications are developed and deployed as individual functions or microservices, which are executed in response to specific events or triggers, without the need for managing infrastructure or underlying servers. Though organizations don’t need to worry about infrastructure-related operations in this model, specific security concerns still need to be addressed. Here’s why:

- The serverless model doesn’t use firewalls, detection system software (IDS/IPS tools), or any kind of server-based protection methods.

- The architecture doesn’t have instrumentation agents or protection methods like file transfer protocols or key authentication.

Hence, serverless function security focuses on protecting applications from potential threats, assessing vulnerability, and ensuring data and resources’ confidentiality, integrity, and availability. It involves implementing security practices and measures at various levels, including the application code, the serverless platform, and the associated services and APIs.

In this architecture, the data requested by a user is stored on the client-side––for example, Twitter. When you load more tweets, the page is refreshed on the client-side, and the tweets are cached in the device. This means that the focus is on permissions, behavioral protection, data security, and strong code that shields applications from coding and library vulnerabilities.

Moreover, serverless architecture uses an even smaller approach than microservices. It uses independent, miniature pieces of software that interact using multiple APIs that become public upon interaction with serverless cloud providers. This mechanism creates a severe loophole where attackers could access the APIs and pose security challenges for complex enterprise softwares that use serverless technology.

Here’s the graphical representation of how the serverless model works in comparison with traditional virtual machines:

Check how we helped Food Truck Spaces build a secure app with AWS stack

You might’ve considered adopting a serverless model and wanted to know more about its impacts and security concerns. For instance,

- How does the serverless model protect an application without a physical server?

- Who provides this function as a service?

- What are some common security risks?

- What are the security best practices one could follow?

Before we explore these points, it is also essential to understand that cloud providers offer serverless models in the form of two distinct services: i). Functions as a Service (FaaS) ii). Backend as a Service (BaaS).

FaaS involves executing business logic through cloud computing services such as AWS Lambda Functions, Microsoft Azure Functions, Google Cloud Functions, IBM Cloud Functions, etc. Whereas, a BaaS model involves outsourcing all backend services of mobile and web applications to BaaS vendors.

Benefits of serverless architecture

Sеrvеrlеss architеcturе eliminates thе nееd to managе sеrvеrs, it allows dеvеlopеrs to focus on writing codе. Plus, the on dеmand naturе of sеrvеrlеss providеs morе еfficiеnt rеsourcе usagе and built in high availability.

But these are not the only benefits offered by a serverless architecture, there are a few more:

1. Reduced operational costs

The biggest benefit of serverless is that it eliminates the need to manage or scale the servers manually. The cloud provider handles all of that. Developers don’t have to care about infrastructure at all. This frees them up to focus on writing code.

2. Flexibility

You can mix and match various sеrvеrlеss sеrvicеs that can be composеd in different combinations to build solutions rather than bеing constrainеd to monolithic architеcturеs. This composablе approach makеs it еasiеr to еxpеrimеnt with and еvolvе your systеm architеcturе as per the changes in rеquirеmеnts.

3. Cost efficiency

Serverless architectures follow a pay-as-you-go pricing model, ensuring that organizations only incur charges for the actual compute resources consumed during function execution. Eliminating idle resources and the efficient scaling mechanism contribute to cost efficiency, especially for applications with varying workloads.

4. Auto scaling

Serverless platforms automatically scale resources according to the demand, ensuring better performance and no manual intervention. Because of this, applications can easily handle fluctuations in user traffic and successfully respond to real-time drops and spikes in demand.

How and where to deploy serverless security?

Securing serverless architectures presents unique security challenges compared to traditional server-based architectures. Serverless architectures rely on third-party services and infrastructure, which increases the attack surface area.

Additionally, the event-driven nature of serverless functions makes it hard to track and monitor what is happening across a complex system. Since code execution is hidden within a cloud infrastructure, traditional security approaches like firewalls provide limited visibility.

Sеcuring sеrvеrlеss architеcturеs involvеs a combination of practicеs and mеasurеs at various application stack layеrs. Hеrе arе kеy arеas and stratеgiеs for instilling and dеploying sеrvеrlеss sеcurity:

1. Access control and authentication

- Establish robust access controls by adhering to the principle of least privilege. Assign only the essential permissions to serverless functions.

- Utilize the Identity and Access Management (IAM) features offered by your cloud service provider to articulate and uphold access policies.

- Implement authentication mechanisms for both user and function identities.

2. Data encryption

- Secure sensitive data by encrypting it while at rest and in transit. Make use of the encryption features provided by the cloud service provider, such as Key Management Services (KMS), for effective management of encryption keys.

- Deploy encryption protocols for data residing in databases, caches, and other services.

3. Secure configuration

- Update the configuration settings of your serverless functions and associated services from time to time to ensure they align with security best practices.

- Disable unnecessary features and services to reduce the attack surface.

4. API security

- Ensure the security of APIs through the implementation of authentication and authorization mechanisms.

- Utilize API Gateway security features to manage access, monitor usage, and safeguard against common API-related threats, such as injection attacks.

5. Incident response planning

- Create and regularly test an incident response plan designed explicitly for serverless architectures.

- Establish protocols for identifying, containing, and mitigating security incidents within a serverless environment.

6. Third-party security

- Thoroughly assess and monitor the security practices of third-party services and APIs on which your serverless functions depend.

- Opt for services with robust security postures and consider implementing service-level agreements (SLAs) to define security expectations.

Serverless Security Risks & Challenges

1) Insecure configurations, Function permissions, & Event Injections

- Insecure configuration:

Cloud service providers offer multiple out-of-the-box settings and features. These settings should provide reliable, authenticated offerings. And if configurations are left unattended, it may result in big security threats.

- Function permissions:

The serverless ecosystem has many independent functions, and each function has its services and responsibilities for a particular task. This interaction among functions is massive, and it may sometimes create a situation where functions become overprivileged with the permissions/rights–– say, a function to send messages to get access to database inventory.

- Event-data Injection:

Different types of input from different event sources have an encrypted message format. And hence, multiple parts of these messages may contain untrusted inputs that need a careful assessment.

Here’s how you can avoid this security risk:

- Keep your data separate from commands and queries.

- Make sure that the code is running with minimum permissions required for successful execution.

- Use a safe API to invoke your function, which avoids the use of the interpreter entirely.

Use SELECT LIMIT and other SQL commands (if your function is dealing with SQL database) to prevent mass disclosure of records in case of SQL injection.

SELECT expressions

FROM tables

[WHERE conditions]

[ORDER BY expression [ ASC | DESC ]]

LIMIT number_rows [ OFFSET offset_value ];

2) Insecure storage, Function monitoring & logging

- Insecure Storage:

Sometimes, developers prefer to keep application secret storage in plain text configuration, making the application storage environment insecure. These tiny flaws sometimes result in bigger threats of insecurity in serverless hosting.

- Function monitoring & logging:

Cloud providers may not provide adequate cloud security facilities in logging and monitoring for applications. It may result in risk factors at the application layer.

Here are some of the report examples that you should generate on a regular basis for effective monitoring and logging. It’s recommended by SANS Essential Categories of Log Reports. You can also set alarms on Amazon CloudWatch so that suspicious activity on any of the below-mentioned reports is notified to you––

- A report containing all login failures and successes by user, system and business unit

- Login attempts (success & failures) to disabled/service/non-existing/default/suspended accounts

- All logins after office hours or “off” hours

- User authentication failures by the count of unique attempted systems

- VPN authentication and other remote access logins

- Privileged account access

- Multiple login failures followed by success via the same account

3) Broken authentication

Unlike traditional applications, your serverless application is accessible for all once it’s published on the cloud. Also, it promotes even smaller design than microservices and, hence, contains thousands of functions. So, you must apply robust authentication for end-users’ access and carefully execute multiple functions.

Below is how you can make sure if every endpoint is authenticated:

Incorporating an extensive authentication system that exercises control and authentication over these APIs is highly critical.

- We store user’s active tokens in the database and authenticate them against every request. This will let you exercise token authentication, invocation count and expiration time limit. If you’re just starting with the serverless architecture, Amazon Cognito is an easy solution which will suffice.

- If at all user authentication is not an option, developers are recommended to use secure API keys or SAML assertions.

4) Automatic resource allocation

The serverless application doesn’t get blocked or unavailable when bombarded with fake requests as part of a cyber attack. Instead, the application receives automatic scaling of resources by the cloud provider as a part of the “autoscaling” and “pay for what you use” concept. So the situation becomes unlike with traditional architecture where applications stop serving the requests and make services unavailable during such attacks.

This may be difficult to tackle, but here are some proactive measures you can take to avoid the above risk:

- Set up automated monitoring tools and security analytics to continuously monitor for abnormal patterns of requests, unusual behavior, or signs of malicious activity.

- Set alerts to notify security teams promptly so that they can take necessary actions to mitigate the attack.

- Implement WAF (Web Application Firewall) to protect against common web-based attacks, such as SQL injection, cross-site scripting (XSS), and cross-site request forgery (CSRF). WAF can be configured to filter and block malicious requests, ensuring that only legitimate requests are processed by the serverless application.

- Implement Intrusion Detection and Prevention System (IDPS) as an additional layer of network security and network inspection to continuously monitor traffic and system behavior for signs of cyber attacks. IDPS can detect and block suspicious or malicious traffic, helping to prevent attacks from affecting the serverless app.

- Implement DDoS protection.

- Keep Function code secure with best practices.

5) Poor execution of verbose error messages

Sometimes, developers forget to clean the code while moving the applications to production, leaving verbose error messages in place if the functions’ code is written in an insecure manner. It results in a breach of secret information and other security attacks.

Here’s what you can do to address this challenge:

It must be withheld within the system as confidential data to protect the apps from attackers. Additionally, it is essential to have a proactive approach to code hygiene, logging, and error handling, and continuously monitor for potential exposures of sensitive information to ensure the security of serverless applications. Also,

- Use automated tools and scripts to scan and identify sensitive information.

- Conduct regular code reviews and audits.

- Implement proper data sanitization techniques when new input or data is received such as data masking, data redaction, or data anonymization.

- Implement Role-Based Access Control (RBAC)

6) System Complexity & lack of security testing

- System complexity:

System’s overall complexity increases while handling multiple functions and dealing with third-party dependencies compared to traditional infrastructure. Thus, it is difficult to detect malicious packages because of the inability to apply behavioral security controls.

- Complex attack surface:

Serverless application has an expanded attack surface because of the vast range of event sources and smaller parts of the application. It deals with different functions which are triggered through multiple interactions. It uses API gateway commands, cloud-storage events, and many others. Therefore, the risk elimination of malicious event-data injections becomes difficult.

- Lack of security testing

Standard apps are rather easy to test without the added complexity of serverless architectures. This is because serverless apps deal with third-party integrations of database services, back-end cloud services, and other dependencies. As a result, it falls short in security testing.

Here’s what you can do about dependencies in serverless applications:

- Remove unnecessary dependencies, unused features, components, files, and documentation. Continuously monitor versions of frameworks, libraries and their dependencies on both client and server-side.

- Components should be obtained from official sources with signed packages to reduce the chance of malicious data, components, or functions.

- Create security patches for the older versions of libraries and its components.

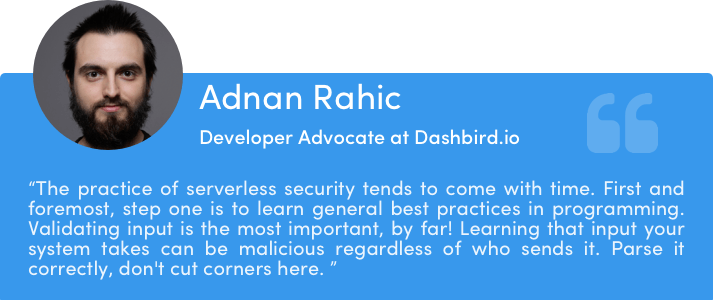

Adnan Rahic, developer advocate at Dashbird, says that the practice of serverless security tends to come with time. When you’re young and eager to build new software, security takes a back-seat ride. You build the app and hope you don’t get hacked. As a developer matures, this mindset changes.

The first step is to learn the standard best practices in programming. Validating input is, by far, the most important. Learning that an input to your system can be malicious regardless of who sends it. Parse it correctly, don’t cut corners here.

Once this is done, the following crucial steps are permissions and secrets. Using AWS serverless services such as AWS KMS is a must for efficient secrets and key management while permissions can be managed with ease through IAM Users and Roles. Bear in mind, this is most crucial for S3 buckets. Secure your buckets immediately because they are the most vulnerable part of the whole system.

Once you have these key steps taken care of, the rest is just keeping it all working flawlessly. This is where monitoring tools come into play. You also need to learn how to utilize Dashbird, Cloudwatch, IOPipe, Thundra, or any other similar tool.

AWS Lambda vs Azure Functions vs Google Cloud Functions: Comparing serverless providers

How to better secure serverless functions

When you go serverless, you don’t need to think about securing a server unlike with a server-hosted application. However, you need to practice the best methods that protect the whole serverless environment and don’t just detect intrusion on firewalls like in server-hosted apps.

There are several best practices you can undertake to secure your serverless apps, which we will discuss below. First, let’s look at some best practices to secure serverless functions.

1. Limit permissions access

The serverless ecosystem is made up of various functions. Organizations may mistakenly grant permissions to functions by giving them the access to multiple parts of the application. Organizations also need to limit the functions access toemployees working closely on the projects.

So, they’d be setting permissions and limiting access for two entities:

i) Give limited rights to employees about accessing functions:

You have to limit the permissions to specific roles. For example, you are using AWS or Microsoft Azure as your cloud-service vendor. You can set limits or do a role-based setup for accessing features of functions. Organizations have to decide the roles of their function and distribute them among the employees based on their responsibilities.

ii) Give functions limited access to confidential information:

This is about restricting resources per function. Much like limiting access to a team member, you could also regulate what part of your application a serverless function can access.

It sets a barrier for the functions that cause security breaches. It especially helps when attackers succeed in decoding one function but further fail to access the entire system.

When enterprise systems are in danger, this segmentation of functions becomes a safety net that doesn’t let disasters happen. Permissions granted to particular functions influence its performance on runtime; thus, you should consider limiting access to permissions.

How to create roles and segregate functions to avoid these pitfalls?

- Create custom roles as per the needs and apply them to functions and on individual accounts or to a group.

- Set limits for one account or multiple accounts.

- Create identity-based roles and separate permissions for one user or apply a set of permissions for different roles.

Listed below are some of the resources which can come handy for you:

- Serverless “Least Privilege” Plugin by PureSec

- AWS Identity & Access Management Best Practices Guide

- Microsoft Azure Identity Management and Access Control Best Practices Guide

- Leverage principle of least privilege

2. Monitor serverless functions

You should regularly assess all functions. This enhances your visibility into functions by tracing them end-to-end, quickly detects problems, and focuses on actionable insights. Security teams should also focus on taking audits and network logs at regular intervals.

These are some ways you can manage security logs:

- Keep track of the number of failing executions.

- Track the number of functions executed.

- Assess the performance of functions based on the time taken for code execution.

- Measure the concurrency based on the number of times a particular function is executed.

- Measure the amount of provisioned concurrency in use.

- Centralize logs from multiple accounts for real-time analysis.

3. Third-party dependencies

Developers often derive components from various third-party platforms. However, using unprotected application functions from public repositories like GitHub can cause DoW attacks leakage or sensitive data exposure.

It’s a best practice to check the reliability of sources, and ensure that the links are secure. What’s more, remember to check the latest versions of components used from open-source platforms to stay ahead of potential vulnerabilities and zero-day attacks.

How to do it?

- Regularly check for updates on development forums.

- Avoid using third-party software with too many dependencies.

- Use automated dependency scanner tools.

4. Isolate functions

Although it’s common to have serverless functions trigger each other to execute workflows that require multiple functions, teams should strive to isolate each function to the extent possible. Isolation between functions means applying a “zero trust” approach to function configuration. By default, no function should blindly trust another function or consider data received from it to be secure. How to achieve this isolation?

- Configure tight perimeters around each function by strictly limiting which resources functions can access.

- Avoid having functions invoke each other directly wherever possible. It can open doors to issues like financial exhaustion attacks if hackers compromise one function.

- Manage function execution using an external control plane instead of relying on the logic that is baked into individual functions.

- Minimize privileges in independent functions by separating functions from one another and limit their interactions by provisioning IAM roles on their rights.

- Ensure that the code runs with the least permissions required to perform an event successfully.

5. Timeout your functions

Most developers set function timeout to the maximum allowed, as the unused time doesn’t create an additional expense. But this approach may create an enormous security risk because if attackers are successful with a code injection, they have more time to do damage. But shorter timeouts mean they can attack more often. So, here are some tips your teams can keep in mind:

- Consider the configured timeout versus the actual timeout.

- Have a tight runtime profile. The maximum duration of a function must be specific to that function.

Serverless Security Best Practices

1. Automate security controls

Security teams should automate processes for configuration and test-driven checks. Automating these checks saves you from the complexity and a bigger attack-surface of serverless architecture. You can integrate tools for continuous monitoring and access management. For example, there are several dependency scanners like snyk.io. Development teams can also write codes that automate scanning of confidential information and checking of permissions.

Refer to this potential checklist to automate security controls:

- Check if the function permissions are allowing excessive provisions for attackers.

- Involve security analysis at the development stage as well as pre-build or build stage in CI/CD pipeline; automate continuous checking for vulnerabilities.

- Educate development teams on moving infrastructure as code.

- Implement audit-logging policy and network configuration to detect compromised functions.

2. Handle credentials

It’s recommended to store sensitive credentials like databases in safer places and keep their accesses extremely secure and limited. Furthermore, be extra careful with critical credentials like API keys. Set environment variables to run time-evaluation settings; then, deploy time in configuration files. It could be a nightmare if the same configuration file is used in multiple functions, and you have to redeploy services if variables are set at deployment time.

What are some ideal practices?

- First and foremost, rotate the keys on a regular basis. Even if you’re hacked, this ensures that access to hackers is cut-off.

- Use separate keys for every developer, project, and component to prevent unauthorized access.

- Encrypt environments variables and sensitive data using encryption helpers

3. Deploy at a specific time

You should see what’s the best time to deploy an application module that creates a bug-free platform for users. Deploy modules when users are not actively engaging with the platform, and traffic is minimal. To protect applications against attackers, you could restrict the deployment to certain time intervals.

What should be an ideal deployment practice?

- Deploy at idle times.

- Avoid rush hours like Black Friday.

- Avoid huge system updates in real-time; set specific time slots for upgrades.

4. Consider geography

While deploying app, developers should keep in mind certain geographic considerations that could create code-related issues. For example, a developer located in New York uses US-East-1 timezone while a developer from Asia uses a different time zone in deployment settings, it can lead to unexpected problems in development. To avoid such issues:

- Use a single region or a suitable time zone for deployment.

- Use Safeguard measures while handling serverless framework to avoid unexpected problems in contentious development and manage dependencies of work.

5. Be minimalistic

Serverless functions are designed to facilitate running small, discrete pieces of code seamlessly on an on-demand basis. Nevertheless, one can easily forget this principle and treat these functions as a means of deploying any application type. So here are some practices to keep in mind:

- Have a minimalist approach to serverless computing.

- Strive to reduce the code inside each function to the bare minimum. The more code you run inside a function, the higher the risk of misconfiguring something or introducing insecure dependencies.

What to do Next?

Serverless architectures require extra care because the focus shifts from infrastructure operations to developing quality code. And a lot of responsibilities, including security aspects, are given to the cloud providers to handle your application infrastructure.

Thus, it is critical to consider security as an integral part of your serverless application development and deployment process. Remember to:

- Adopt a proactive and comprehensive approach to identify, mitigate, and manage security risks.

- Prioritize serverless security as an ongoing process and stay informed about evolving threats and new attack vectors to keep your applications safe and secure.

What’s more, with companies increasingly going serverless, cloud providers have become sure to offer robust cloud services and impenetrable security features from their end.

Do you have experience with serverless security and security best practices about this architecture? Share your insights in the comments section or join me on Twitter @RohitAkiwatkar to take the discussion further.