Data Design: A Smart Data Reporting Solution That Empowers Schools

Category: Education

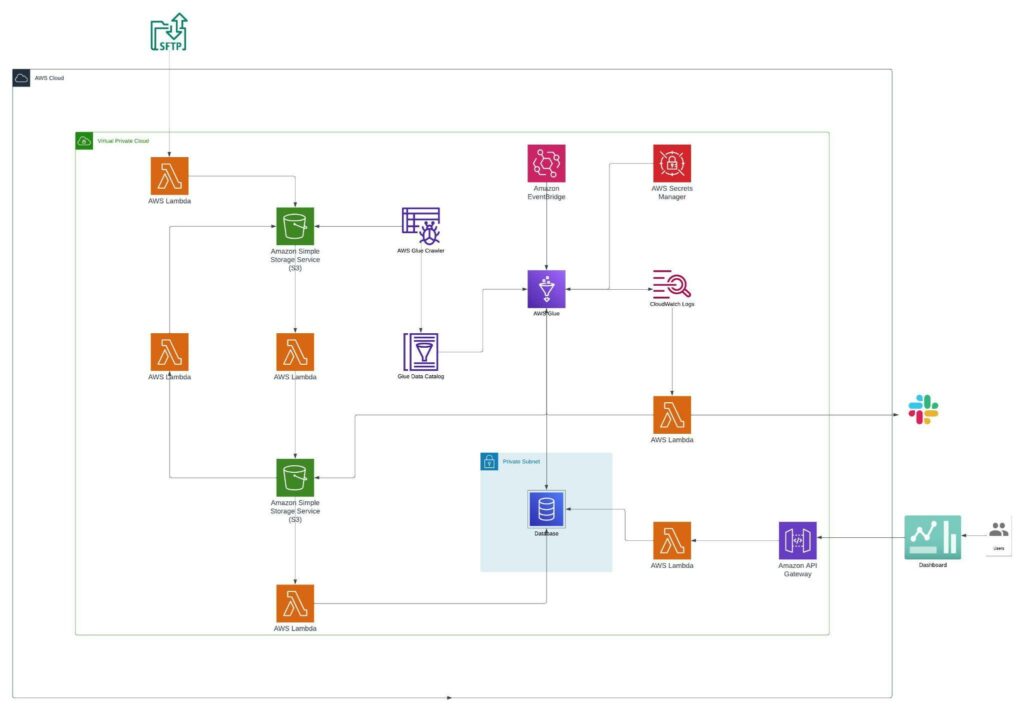

Services: Managed Engineering Teams, Cloud Architecture Design and review, Data Processing, Data Ingestion, and Data Governance

Category: Education

Services: Managed Engineering Teams, Cloud Architecture Design and review, Data Processing, Data Ingestion, and Data Governance

DataDesign.io provides data management and reporting solutions for educational institutions. It streamlines communication between students, parents, and teachers, saving valuable time.

We do not collect any information about users, except for the information contained in cookies. We store cookies on your device, including mobile device, as per your preferences set on our cookie consent manager. Cookies are used to make the website work as intended and to provide a more personalized web experience. By selecting ‘Required cookies only’, you are requesting Simform not to sell or share your personal information. However, you can choose to reject certain types of cookies, which may impact your experience of the website and the personalized experience we are able to offer. We use cookies to analyze the website traffic and differentiate between bots and real humans. We also disclose information about your use of our site with our social media, advertising and analytics partners. Additional details are available in our Privacy Policy.

These cookies are necessary for the website to function and cannot be turned off.

Under the California Consumer Privacy Act, you may choose to opt-out of the optional cookies. These optional cookies include analytics cookies, performance and functionality cookies, and targeting cookies.

Analytics cookies help us understand the traffic source and user behavior, for example the pages they visit, how long they stay on a specific page, etc.

Performance cookies collect information about how our website performs, for example,page responsiveness, loading times, and any technical issues encountered so that we can optimize the speed and performance of our website.

Targeting cookies enable us to build a profile of your interests and show you personalized ads. If you opt out, we will share your personal information to any third parties.