Say your company is facing major database corruption and you moved to cloud services like AWS for reliability, fault-tolerance, and scalability. Eventually, you switched to Serverless architecture, and AWS Lambda became a core part of its ecosystem with many critical functions being handled by it.

Now, just when you thought you’ve solved a problem, you experience a cold start that created a startup latency. It’s a common problem associated with AWS Lambda that takes initial setup time in serverless app development, slowing the Lambda function and disrupting the user experience. This blog discusses how you can prevent this scenario and solutions based on experiments carried out by serverless experts.

Reasons for AWS Lambda Cold Starts

A cold start is a common occurrence when a function is called for the first time after deployment as its container takes time to form. Ergo, this causes a few seconds delay, hampering an application’s productivity. In other cases, programming languages with higher latencies and a number of dependencies associated with Lambda functions affect cold starts. For instance, when you call a function, all its dependencies are imported into the container for Lambda functions which further adds to latency.

Ways to Reduce AWS Lambda Cold Start

1. Avoid HTTP/HTTPS Calls Inside Lambda

HTTPS calls prompt cold starts in serverless applications and increases a function’s invoking time. Simply put, when an HTTPS call happens, SSL (Secure Socket Layer) handshake and other security-related calls also occur simultaneously. These tasks are further limited by CPU power initiating a cold start.

Amazon SDK always ships with a bunch of HTTP client libraries from which you can make an SDK call. All these libraries can do connection pooling, however, Lambda functions can handle multiple requests simultaneously but serve only one request at a time. This makes most HTTP connections useless. Therefore, you should only use the HTTP client available in Amazon SDK.

2. Preload All Dependencies

Most of us may have had that one instance when we imported dependencies post invoking functions and waited for more than we bargained for. Preloading dependencies, for one, is an effective solution to dodge this situation and reduce the chances of cold starts. Fret not if it doesn’t work out for the first time. Use handlers between the invoking service and function to load the dependencies before the invocation.

3. Avoid Colossal Functions

In serverless, large functions simply mean more set-up time required by the vendor. Although the serverless ecosystem breaks the monolithic structure into granular services, you still have to analyze the architecture and find out how to separate the concerns.

Since each serverless architecture is different and has its own requirements, you’d have to monitor it continuously to identify whether the functions/services must be kept together or separated. Software architects approach this with the ‘high cohesion, loose coupling’ principle.

4. Reducing Setup Variables

Whenever you’re dealing with the preparation of a serverless container, setting up static variables has a huge role in it. However, setting up the static variables and their associated components takes a while and so, the more static variables you have, the more the cold start latency will be. Removing unnecessary static variables may lower the chances of a cold start. But, the impact of this move will depend on the initial function size and complexity of the architecture.

So far we’ve seen best practices to reduce/avoid a cold start. However, these aren’t the only techniques. What we’re going to see next are some techniques backed by experiments conducted by serverless experts.

Experimentation-based Techniques to Prevent Cold Start

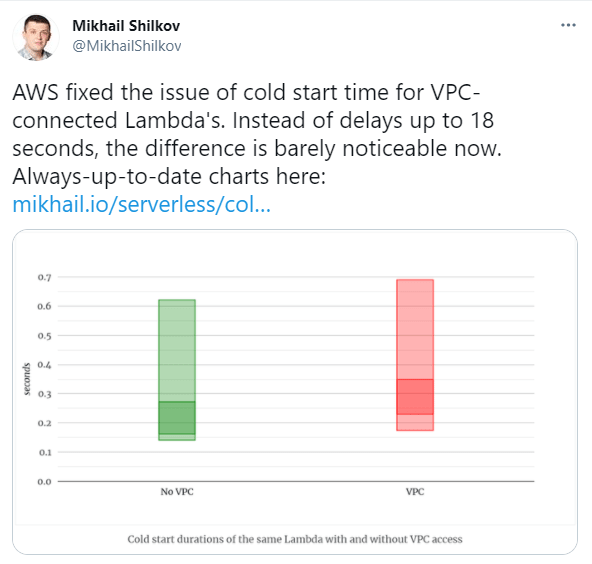

1. Run Lambdas Out of VPC

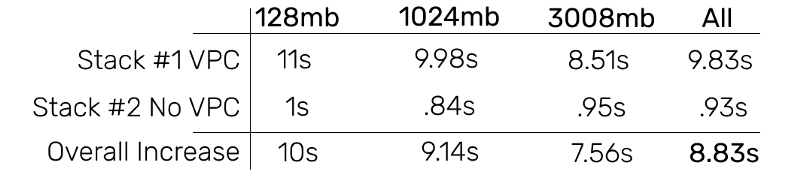

Nathan Mashilev, a software engineer, ran two tests, one with and the other without VPC, to determine the time taken to cold start a single Lambda.

He created two identical CloudFormation stacks, which is an AWS-supported Infrastructure as code service. One stack ran inside VPC and the other outside of VPC. Thereafter, he ran and deployed the Lambda functions with three different RAM sizes: 128 MB, 1536 MB, and 3008 MB.

Source: Freecodecamp.org

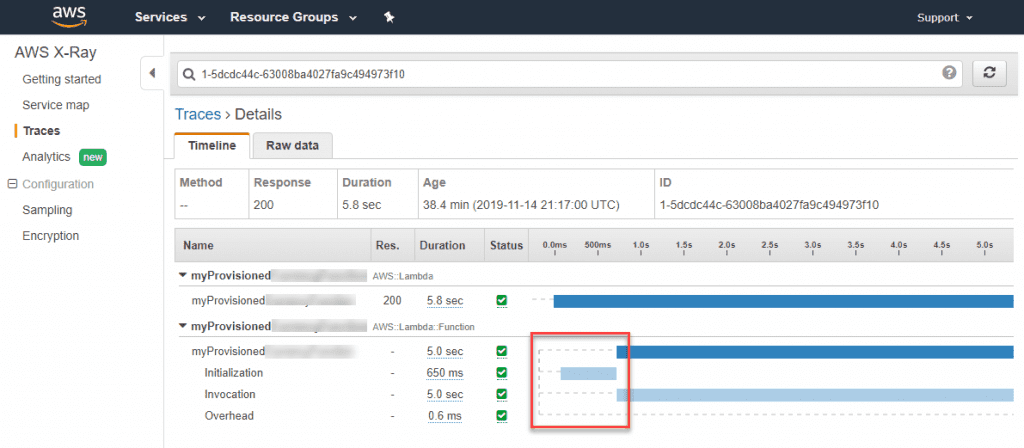

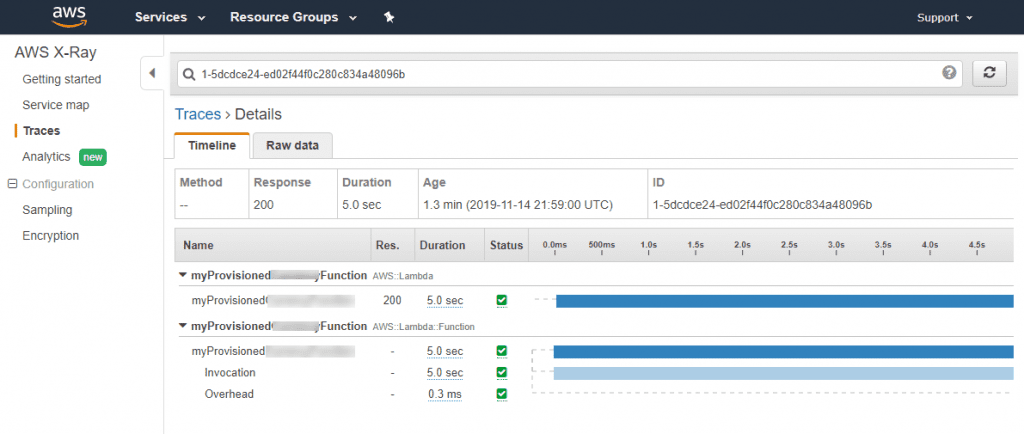

2. Provisional Concurrency

Provisional Concurrency is another way to bring the function start-up latency into predictable territory. James Beswick, Principal Developer Advocate for AWS Serverless, suggests that any cold start consists of two major entities:

(i) execution environment controlled by AWS

(ii) initialization duration controlled by a developer

He further compared the results of Lambda on-demand and Provisioned Concurrency:

Source: Amazon.com

It was observed that the slowest execution in an on-demand Lambda function added latency of 650 ms to the initialization time.

Source: Amazon.com

3. Keeping Your Lambda Functions Warm

When building a payment solution using AWS Lambda, Gonçalo Neves, Product Engineer at Fidel, encountered a series of cold starts. To solve this, he then created serverless-plugin-warmup that warmed the Lambda functions at specific intervals, keeping containers active. What’s more, you can install the plugin via NPM (Node Package Manager).

4. Choosing Right Programming Language

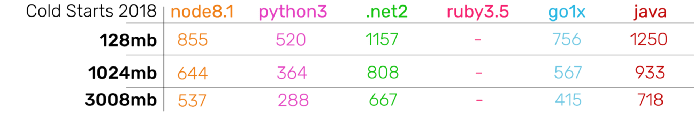

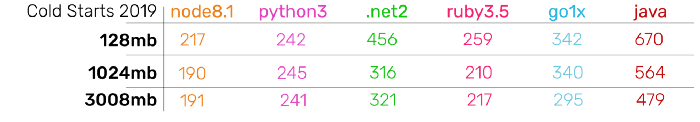

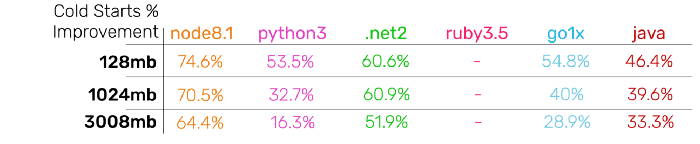

Nathan Malishev, an AWS and serverless expert, measured cold start durations for six different programming languages and three different RAM sizes.

He created three functions for each language, namely Go 1.x, Node.js 8.10, Java 8, Ruby 2.5, Python 3.6, .netcore 2.1, and allocated 128 MB, 1024 MB, and 3008 MB memory. The results extracted for the years 2018 and 2019 were:

Source: Gitconnected.com

Nathan observed:

- Node.js and Python worked well with Lambda functions whereas .Net, Go, and Java had a scope of improvement.

- In 2019, there was a significant improvement in cold start timing for all the programming languages compared to 2018.

- Node.js showed a whopping 74.6% improvement in the cold start timing, making it the most progressive programming language of all.

5. Continuous Monitoring Using Log Data

Every second wasted in a cold start increases cost, for despite the non-productive computing time, you end up paying a hefty amount. But here’s the thing– if you’re able to use log data in various serverless monitoring applications, you’re more likely to dodge that bullet.

You can measure the performance of your Lambda functions using instrumentation tools like Gatling and AWS X-Ray. They track performance efficiency and highlight the areas of improvement, which eventually minimize cold starts. However, monitoring Lambda functions has its own set of challenges. It can be fixed using CloudWatch with other third-party log management tools like Invocation, Performance, and Concurrency metrics to analyze various metrics.

6. Reducing Function Dependencies

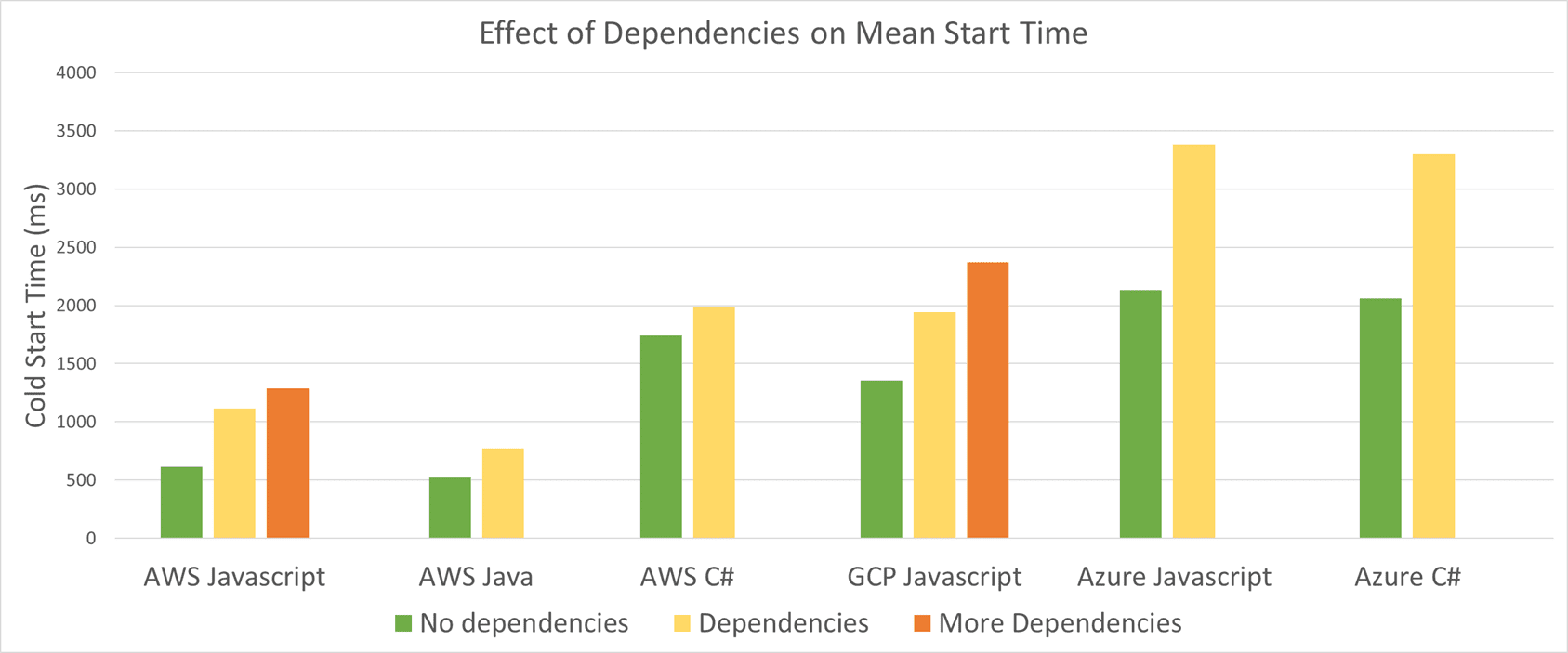

Mikhail Shilkov, a cloud developer and Microsoft Azure MVP, measured cold starts for four different Lambda functions with additional dependencies for AWS, Google Cloud Platform (GCP), and Microsoft Azure:

- Javascript referencing 3 NPM packages — 5MB zipped

- Javascript referencing 38 NPM packages — 35 MB zipped

- C# function referencing 5 NuGet packages — 2 MB zipped

- Java function referencing 5 Maven packages — 15 MB zipped

Source: @MikhailShilkov

7. Optimize Memory and Code Size

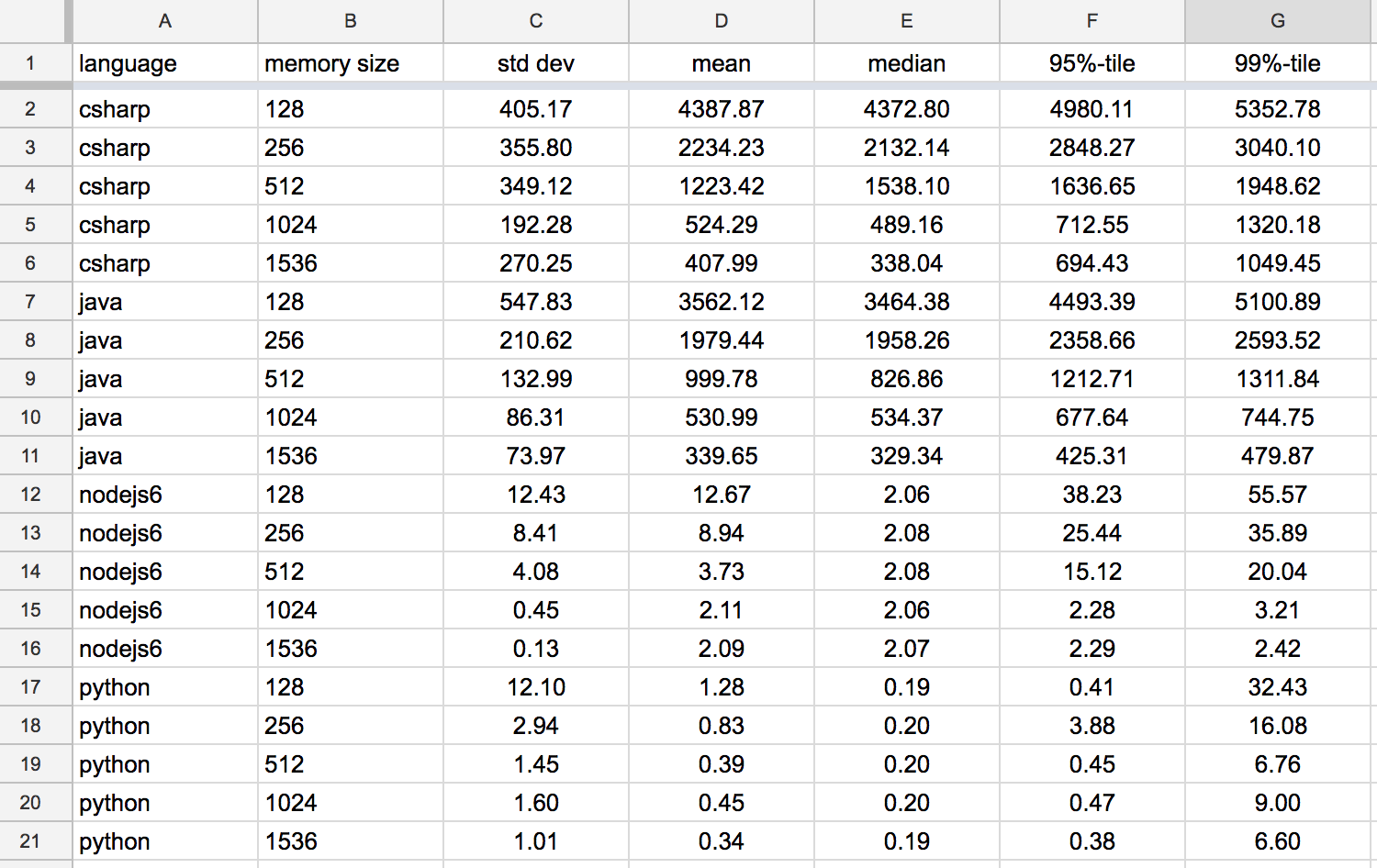

Yan Cui, the Principal Consultant at The Burning Monk, created 20 different functions using C#, Java, Node.js, and Python to determine the effects of memory and code size on cold start that ran over a span of 24 hours on five different memory sizes– 128 MB, 256 MB, 512 MB, 1024 MB, and 1536 MB.

Source: Acloudguru.com

The test revealed that the higher the memory the lower the cold start mean-time, especially for C# and Java.

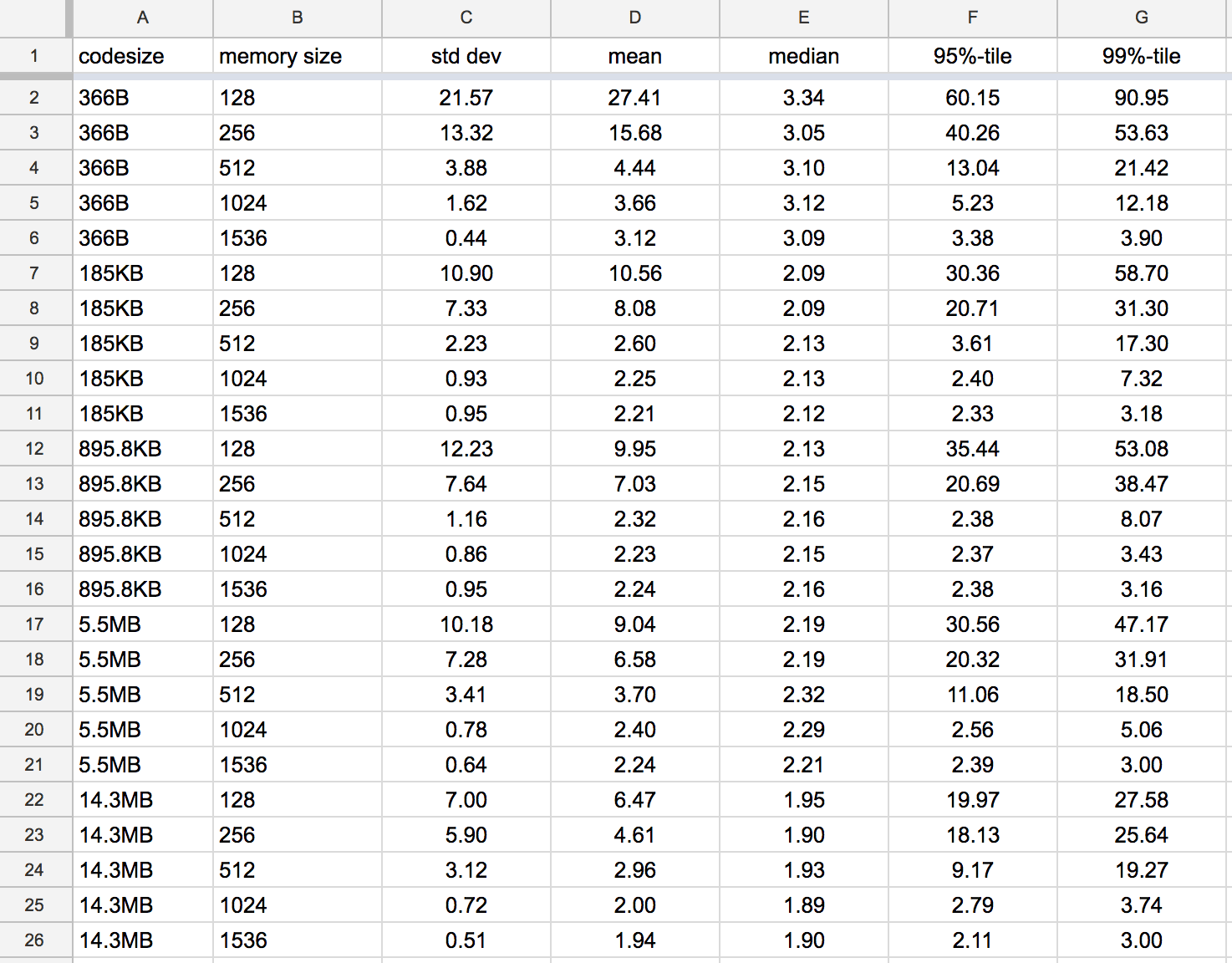

Furthermore, Yan created 25 functions of various code sizes and memory to measure the impact of a code size on the cold start time. Here, Node.js was taken as a base for each function.

Source: Acloudguru.com

Check how we improved web experience for International Hockey Federation (FIH) on the AWS stack

8. Load Less Resources

Felipe Rhode, an AWS Advocate, and Node.js Developer conducted an experiment to shrink the cold start duration by focusing on ‘Loading Less Resources at Memory’. This meant running fewer codes at one time and loading them instantly. Once the code was fully loaded, it was cached for future calls. Follow the link to find the coding snippet.

This technique is used to lazy load the database modules and schemas. With this technique, the modules that are of no significance to Lambda wouldn’t be accessed during AWS Lambda Lifecycle. Now, if fewer modules are loaded during the Lambda lifecycle, then the function invoking won’t take much time which decreases the chance of a cold start.

9. Deployment Artifact Size Matters

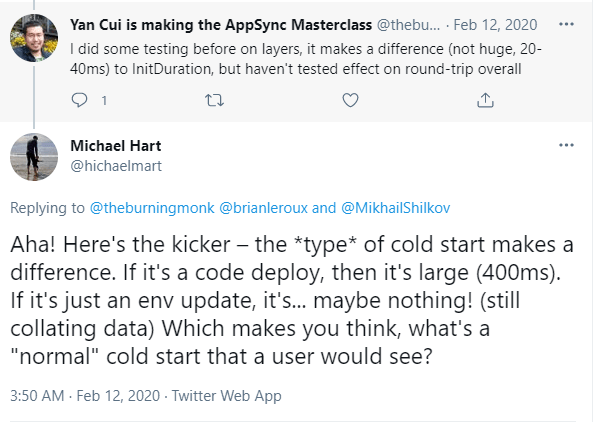

Replying to Yan Cui’s tweet about cold start time, Michael Hart, VP Research Engineering at Bustle, underlined the striking difference between the two major types of cold start:

- Type 1: Cold start that happens after a code change

- Type 2: Cold start that happens when Lambda needs to scale up to the demands of the worker

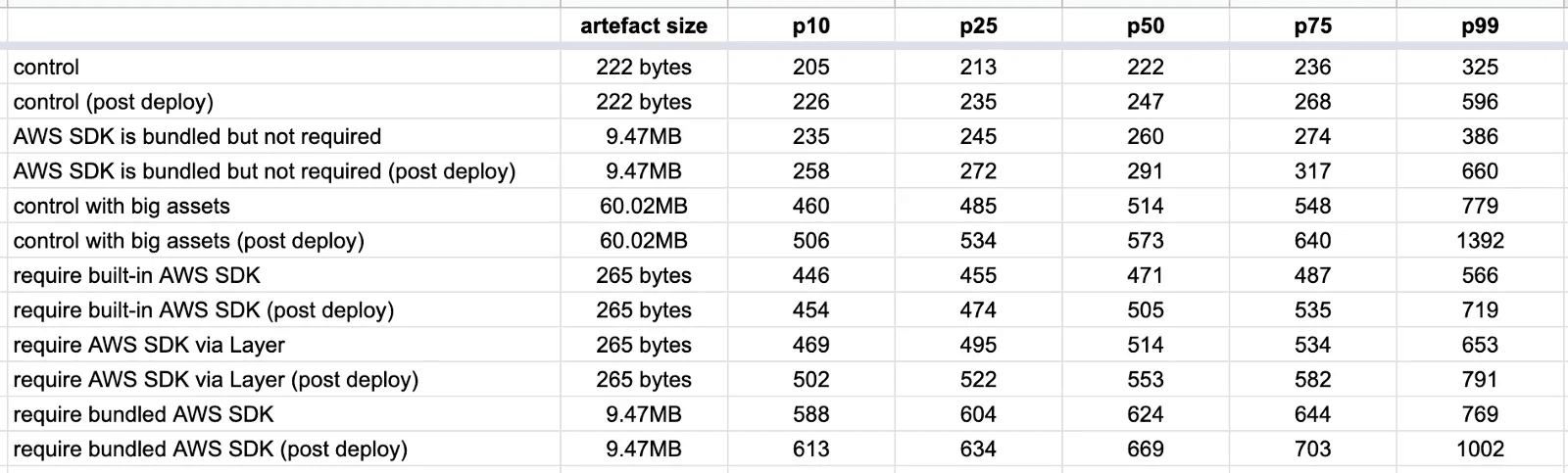

Yan then performed another test that measured the roundtrip duration for various functions. He collected 200 data points for type 1 cold start and 1000 data points for type 2, which produced the following results:

Source: Lumigo.io

He found out that:

- The type 1 cold start was slower than type 2, which meant the deployment artifact size significantly impacted the cold start time.

- The cold start timing for the control function with an artifact size of 222 bytes was 325 ms and 596 ms for p99. However, when the artifact size shot to 9.47 MB, the cold start timing increased by 60 ms. The real difference appeared when the deployment artifact size was 60.02 MB; here, the cold start timing increased by 450 ms.

- The cold start time widens as the deployment artifact size gets bigger. To decrease this effect, you can use the webpack bundler and solve all dependencies before the functions get invoked.

Conclusion

If you’re working with a serverless ecosystem, you have to deal with AWS Lambda day-in and day-out. Lambda functions involve a cold start, and you can’t eliminate that, but it can be reduced or managed effectively with meticulous planning and execution.

Many serverless experts have been able to find some unique solutions to avoid cold start based on their experiments. However, there is no one-size-fits-all kind of solution for a cold start. You need to analyze your requirements and then, carefully pick the one that suits you the best.

If you’re facing a cold start for your serverless application, connect with us. We’re a leading serverless app development firm that provides consultation and offers customized solutions related to the serverless niche.