What is Microservices Testing Strategy?

A microservices testing strategy involves breaking down the application’s functionalities into smaller, independent services and testing each one individually and as part of the larger system.

This approach emphasizes testing the APIs and communication between microservices to ensure proper integration while also focusing on unit testing, contract testing, and end-to-end testing.

Microservices testing strategy helps ensure microservices-based applications’ reliability, scalability, and maintainability by employing techniques like mocking, stubbing, and using specialized tools.

Benefits of Microservices Testing Strategy

Testing microservices differs from traditional monolithic applications due to their distributed nature and autonomy. A clear testing strategy is vital for the following reasons:

- Isolation and Independence: Microservices testing strategies ensure thorough individual testing of microservices without reliance on other services. This speeds up testing and eases bug identification.

- Complex Interactions: Microservices communicate via networks, often with intricate protocols (e.g., HTTP, messaging). A testing strategy detects data inconsistency, communication failures, and integration problems.

- Continuous Deployment: Microservices often adopt continuous integration and continuous deployment (CI/CD) practices. A testing strategy supports these practices with structured, automated testing, enabling the confident deployment of changes.

- Tooling and Automation: An effective testing strategy outlines appropriate testing tools and frameworks for automating various tests, such as unit tests, integration tests, performance tests, and more.

- Documentation and Communication: Acting as a guide for the testing team, the strategy documents testing approaches, methodologies, and processes. This enhances communication and alignment among team members.

How Microservices Interact with Each Other?

Toby Clemson, CTO at B-Social, perfectly summarizes microservices interaction and testing, “By breaking a system up into small well-defined services, additional boundaries are exposed that were previously hidden. These boundaries provide opportunities and flexibility in terms of the level and type of microservices testing strategies that can be employed.”

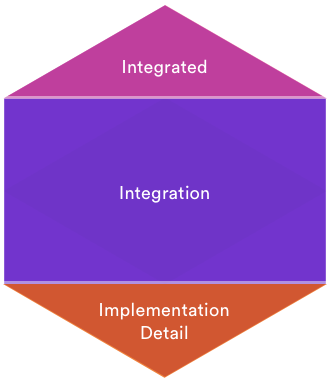

As we move towards understanding how testing works with microservices, keep in mind to make your strategy exhaustive you should aim to provide coverage to each layer and between layers of the service whilst remaining lightweight.

I am not going to talk too much about this, however, a quick reminder of the core concept won’t hurt.

If you want to read more, experts like Robert Martin and Simon Brown (and many others) have covered it extremely well.

Microservices testing

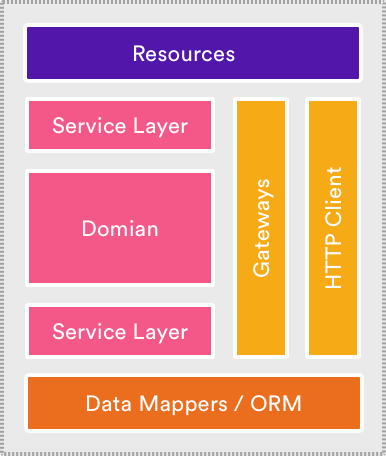

With reference to the above image, Resources act as a mapper between the application protocol, exposed by the service and messages to object representing the domain.

Service logic includes Service layer + Domain + Repositories, which represents the business domain.

When microservice needs to persist objects from the domain between requests, Object Relation Mapping or more lightweight Data mapper comes into play.

Out of many microservices testing strategies, whichever you decide to opt for, should provide coverage for each of these communications at the finest granularity possible.

Microservices Testing Types

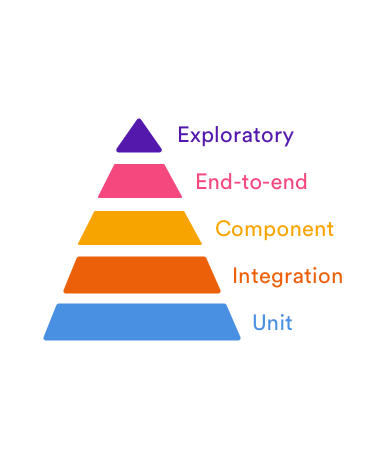

When it comes to microservices testing, the more coarse-grained a test is, the more brittle and time-consuming it becomes to write, execute and maintain it. The concept helps us in understanding the relative number of tests that must be written at each granularity. This is precisely explained by Mike Cohn’s test pyramid.

Microservice Testing Pyramid

Microservice Testing Pyramid

As we move towards the top layers of the pyramid, the scope of the tests increases and the number of tests that must be written decreases.

Let’s explore microservices testing types.

Unit Testing for Microservices

Microservices are itself build on the notion of splitting the smallest unit of business logic. These services then communicate with each other over a network. Therefore Unit testing is all the more important in this context to validate each business logic aka microservices separately.

Do not get confused between unit testing and functional testing. They are a way different beast.

Although the size of the unit under test is not defined anywhere, we at Simform, consider writing them at a class level or at a group of related classes. Consider keeping the testing units as small as possible. It becomes easier to express the behavior if the test is small since the branching complexity of the unit is lower.

But, It’s easier said than done!

The only difficulty in writing unit test highlights when a module is broken down into independent more coherent pieces and tested individually. On the other side, this also makes Unit testing a powerful design tool when combined with Test-Driven Development(TDD).

Toby Clemson describes Unit testing in terms of microservice testing into 2 subgroups:

- Sociable focuses on testing the behavior of the module by observing changes in their state.

- Solitary looks at the interactions and collaborations between an object and its dependencies, which are replaced by the test doubles.

In some situations, the Unit test doesn’t pay off much. There is an inverse relationship between the size of microservices and the complexity of the test suit. As the size of service decreases, the complexity increases. In such a scenario, component testing can provide value. I will be talking about this in detail in the next section.

It’s always suggested to keep the test suit small, focussed and high value. But how will you do that?

If I talk in general, the intention of the Unit tests and testing is to constrain the behavior of the unit under tests. But unfortunately, test also constrains the implementation process. Therefore, I constantly question the value a Unit test provides versus the cost it has in maintenance.

At Simform, to improve the quality of testing, I always avoid false positives by combining microservices with unit testing. Limiting the scope of tests also makes tests run faster. With the dual benefits of focus and speed, unit tests are indispensable to microservices

So, does the Unit test provide a guarantee about the system? It provides the coverage of each module in the system in isolation.

But what about when they work together? Perform more coarse-grained testing to verify that each module correctly interacts with its collaborators. It works every time for us!

Microservice Integration testing

Integration testing verifies the communication path and interactions between the components to detect interface defects. How it’s done?

Integration test collects microservices together to verify that they collaborate as intended to achieve some larger piece of business logic. Also, it tests the communication path through a subsystem to check for any incorrect assumptions each microservices has about how to interact with its peers.

I can write the integration test at any granularity, but for microservice, the granularity is inferred by its usage.

While writing an integration suit it’s important to remember the end goal. I came across many CTO’s getting confused over the integration tests for acceptance tests on the external components. The only goal is to aim for basic success and error paths through the integration module.

According to Martin Fowler an Integration test “exercises communication paths through the subsystem to check for any incorrect assumptions each module has about how to interact with its peers”

One of the most important aspect of service to service testing is- tracing. What exactly happens in the Integration test is that each request would touch multiple services before it circles back to the user with a response. Therefore it becomes imperative to have observability and monitoring of request across service. Tools like Jaegar can help you with tracing.

Some CTO’s are more inclined towards Integration testing than to Unit testing, especially for user-facing features.

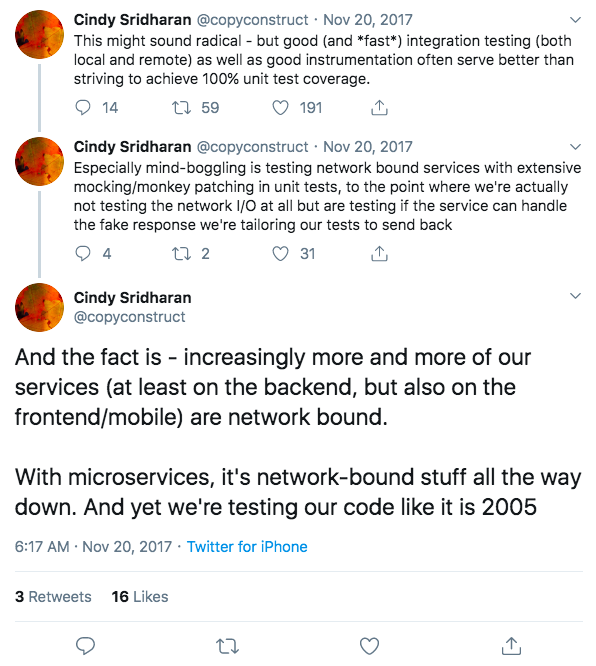

This is what Cindy Shridharan has to say about it.

These are not contradicting views and not at all mutually exclusive. In some scenarios when service talks to a single data store, the unit under test must involve the attendant I/O. While at other times, which is the usual case, when transactions are distributed it becomes a lot trickier to decide what the single unit under test should be.

One of the companies that worked with a highly distributed system is Uber, but testing always remained a challenge for them.

There is a lot of talks about different architectural patterns such as Event Sourcing and Command Query Responsibility Segregation, but the missing part is their testing strategy.

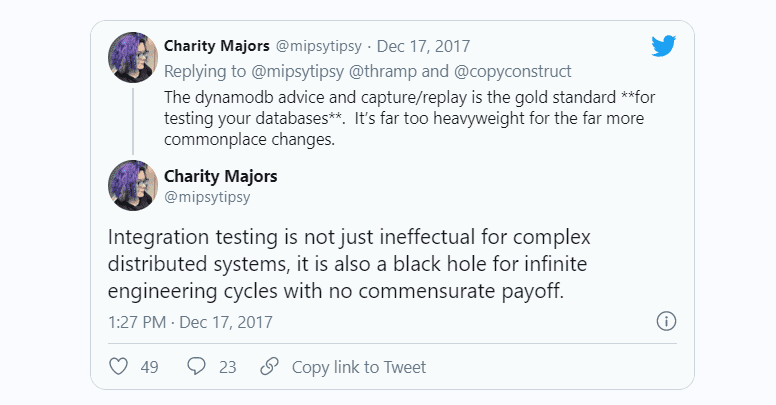

And what I usually believe is the overreliance on integration testing in such cases. This approach not only slows down the feedback cycle but also increases the complexity of the test suit.

In terms of Charity Majors, “Integration testing is not just ineffectual for a complex distributed system, it is also a black hole for infinite engineering cycles with no commensurate payoff.”

With all these constraints and pitfalls, “The Step Up Rule”, a term coined by Cindy, comes as a rescue. She advocates testing at one layer above what’s generally advocated for. In this model Unit test would look like an integration test, Integration test would look like testing against real production and testing in production will more like monitoring and exploration.

The diagram looks like this:

Component Testing in Microservices

A component or microservice is a well-defined coherent and independently replaceable part of a larger system. Once we execute unit tests of all the functions within microservices, it’s time to test microservice itself in isolation.

Component tests should be implemented within each microservice’s code repository. In a typical application, we will have a number of microservices. And hence, to test a single microservice in isolation, we need to mock the other microservices. Isolating the microservices in this way using test doubles avoids any complex behavior they exert on execution.

By writing the test at the granularity of the microservices layer, the API behavior is driven through test from the consumer perspective. At the same time, the component tests will test the interaction of microservices with the database, all as one unit.

However, there may arise a challenge with component tests. When you are ensuring the interfacing of different microservices, is it the same in the testing environment as well?

We, at Simform, have suffered due to this problem a lot. And you will too if your microservices are handled by disparate developers or teams. To overcome this challenge, we started implementing a schema-based interface that both microservices incorporate into their interface implementation. In such cases, schemas should be defined separately in the code repository away from all the microservices, providing a single source of truth when it comes to cross-microservices interactions.

The major benefit of component testing is that it lets you test microservices in isolation which is easier, faster, more reliable and cheap. At the same time, one major drawback is microservices may pass the tests but the application will fail in production. At the same time, it leaves us with a big question- how to make sure that our test doubles always replicate the same behavior of the invoked services.

Contract Testing in Microservices

When some consumer couples to the interface of a component to make use of its behavior, a contract is formed between them.

If its a contract, it should have some exchange in between. What do consumer and component exchange? Its input and output data structures, side effects and performance and concurrency characteristics.

In this case, the component is microservice, so the interface would be API exposed by each consuming service.

One of the basic properties of microservice architecture is that microservice represents a unit of encapsulation. There is a contract through API between each service and Contract testing revels different languages, stack, and patterns between each service.

Being a tester you have to write an independent test suit that verifies only those aspects of producing services that are in use.

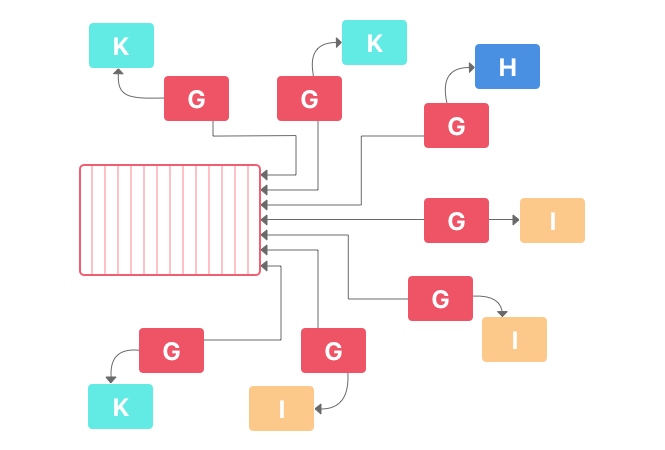

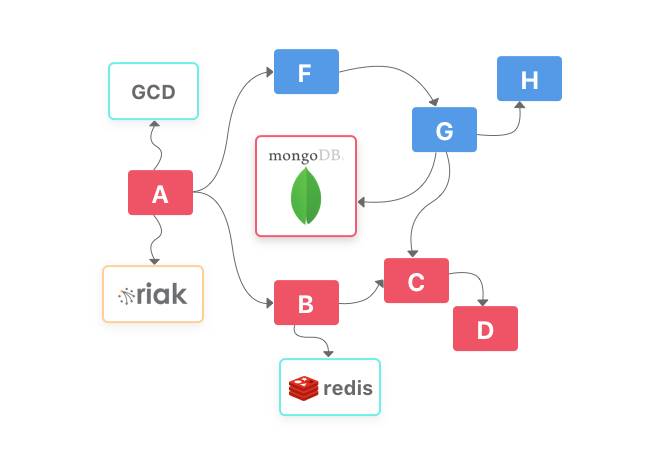

Consider this topology, an example of microservice architecture.

Here service A is talking to Service B, also involves service B talking to Redis and service C.

In contract test, we would only test the smaller unit that is service A’s interaction with service B.

And we will do this by creating a fake for service B and testing A’s interaction with the fake.

Service A also talks with Riak. So in this case, the smallest unit to be tested would be the communication between service A and Riak.

Soundcl has more than 300+ services and they use Contract test for it.

According to Maarten Groeneweg contract testing consist of three steps.

- “As a consumer of an API, you write a “contract”. This contract states what you expect from the provider (of an API). Based on this contract generates a mock of the provider.

- As a consumer, you can test your own application against the mock of the provider.

- The contract can be sent over to the provider who can validate if the actual implementation matches the expectations in the contract.”

He says that the contract test fits well into a microservices workflow and that’s why it’s awesome.

Groeneweg talks about some anti-patterns in contract testing.

These are:

- Contract test is beneficial to best fit the consumer’s wishes but sometimes it moves from consumer-driven to consumer dictated. Brief about it in the next section.

- Writing contract test before having a good face to face discussion is a bad idea. This also applies to doing feature request before contract test.

- Typical scenarios such as zero result or failed authentication are quickly overseen. This should be avoided through proper coverage.

- Only create a contract test for what you need. This gives the provider more freedom to change and optimize his service.

Let’s consider a consumer demands a specific combination of fields in response. For this to work, the provider team has to go across databases, which will make it hard to implement them. Even if they implement it, the solution will be very slow and everyone will be frustrated. This is what is called as consumer dictated contract testing.

The best solution would be to cooperate on the contract and find the solution which works best for everyone. I insist to visit the website for some useful resources on contract testing.

End-to-End Testing in Microservices

We usually treat the system as a black box while performing end to end tests. Because, of course the intention differs from other tests, it verifies that the system as a whole meets business goals irrespective of the component architecture in use.

That’s why, as much as possible I try to keep the test boundary as the fully deployed system, tweaking it through a public interface such as GUIs and service APIs.

How end to end test provides value to microservice architecture? As microservice includes more moving parts for the same behavior, it provides coverage of the gaps between the service. In addition to that, you can test the correctness of message passing between the service.

At Simform we have been creating microservices for 10 years now and what we discovered is that although testing them is hard, it’s even more difficult when it comes to end-to-end testing.

I worked on a project which had seven microservices and had to go a heavy workaround to test each one of them.

It involved

- Ensuring if I was on a correct code branch

- Latest code from the branch should be pulled down

- Even dependencies, ensure they were up to date.

- Run new database migration

- And finally, start the service.

And when I forgot to perform any of the steps, it would take 20 minutes to debug the issue.

If not maintained, these factors can result in “Flakiness, excessive test runtime and additional cost of maintenance of the test suit.”

What’s the solution then?

These are the go-to instruction I follow before writing end-to-end test suit

- Write a few ends to end tests as possible.

- Focus on personas an user journeys

- Choose your end wisely

- Rely on infrastructure as code for repeatability

- Make test data independent

Microservice Performance Testing

Performance testing is the most complex than any other microservices testing strategies. It’s because of the high number of moving parts and supporting services or resources.

I consider Microservice application as a dynamic environment with constant change occurring. And to prove this I had many live examples throughout my career.

At Simform, back in 2012 while testing a Microservice architecture for a TransTMS application, I experienced the most unusual behavior of the application. It was 2 weeks into production and load testing results were varying to a huge margin on every attempt.

After a thorough analysis, what I found was that the caching layer was present in application topology. As not much data was cached yet at an early stage, call data was running slower. So it was making calls to the much slower database. At a later stage, when data was cached, the process got fast enough.

At that stage, we were saved by the load test, because it exposed parts of the application that were not designed to scale.

Therefore I always recommend going for a performance load test, in addition to functional testing.

Now the problem part!

Configuring load test is NOT easy.

In reality, your application may scale at millions of users. But how will you simulate this reality perfectly? Even trying can be hugely expensive and time-consuming and load tests may not give desired results.

What’s the solution then?

The Pareto Principle. The Pareto Principle or the 80/20 rule states that 80% of the effects derive from 20% of the cause.

In simple terms- Don’t try to simulate reality perfectly, your configuration will be a lot simpler.

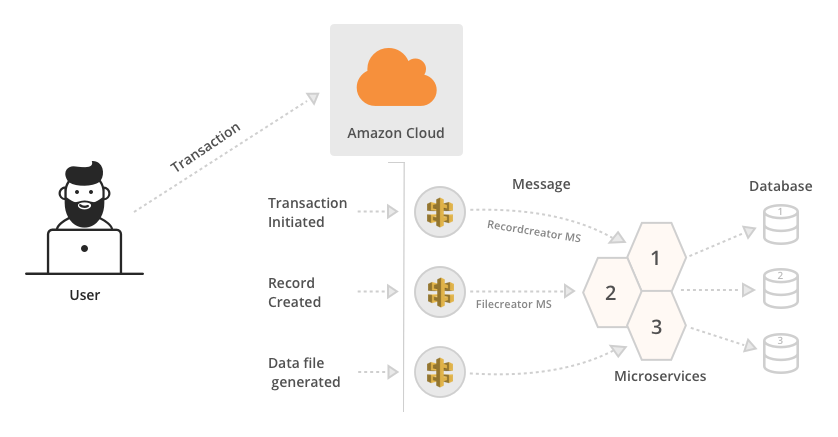

I will show you how we load tested RECORD microservice in one of our recent fintech application projects.

A brief flow of money transfer operation:

- User logs into a web application and perform a transaction.

- A message is initiated and sent to TRANSACTION microservice.

- RECORD microservice, listening to the queue, analyze the message.

- A transaction is created and a message is sent to RECORD microservice.

- FILE microservice picks up the message and generates a data file.

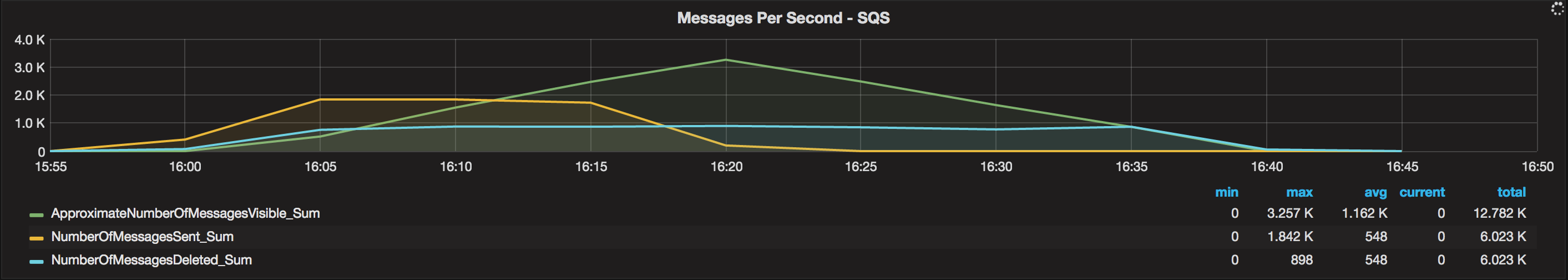

To Load test functional behavior and measure performance, we used JMeter. For visualizing final test data an open source metric analytics visualization tool Grafana is used. Also, we employed AWS cloud resources, cloud watch is used to monitor them.

We sent numerous messages and monitored them through AWS Cloud Watch.

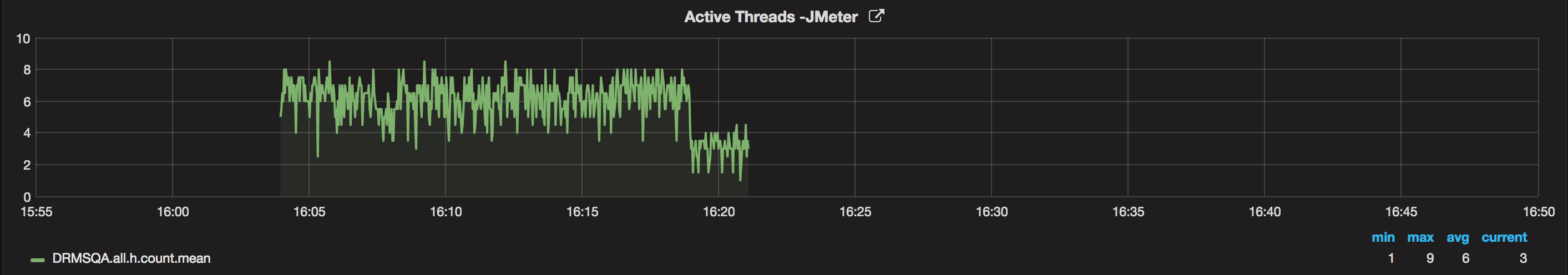

Now, this is the final Grafana panel load test result we got.

We learned a couple of lessons on our journey so far.

- Whenever to test API driven application, say mobile app, do not care about user flow instead try to exercise API endpoint.

- Remember the 80/20 rule!

Take the 1000 concurrent users at a point. If you know how many calls per second API endpoint have at 1000 concurrent users, run a load test that generates that number of requests per second and verifies if your backend can handle it.

- Repeat the same for each endpoint.

- To make the load test simple or should I say absolute simple, hammer a single API endpoint at a time. This will be easy to configure.

Microservices Testing Strategies

Microservices testing strategies have their own set of advantages and disadvantages. But the choice of the right approach depends on the objectives and needs of an enterprise.So to integrate microservices testing strategies successfully, you can tweak them as per your needs.But before that, you need to know them better:

1. Stubbed service strategy

The stubbed service strategy entails developing lightweight, emulated versions of certain microservices for testing purposes. This dummy version functions identically to the production microservice but without any dependencies.

2. Full stack in-a-box strategy

The full stack in-a-box strategy separates your local machine from the test environment. In this approach, you replicate a cloud-based environment on your local system, allowing you to test all components within a virtual environment comprehensively. This enables more authentic system testing, albeit with a longer setup duration.

3. Shared test instance strategy

The shared test instance strategy allows developers to work on their local devices while still accessing a separate, shared microservice instance as a point of reference for their local environment. As a result, testing becomes more efficient and precise.

4. Documentation-first strategy

This strategy includes creating and evaluating your API documentation and test cases for each microservice before testing. This can aid in verifying that the proposed changes meet the criteria and comply with the API standards, ensuring that nothing is damaged.

Microservices Testing Best Practices

The successful implementation of microservices testing strategies requires a revolutionary architectural shift with a strategic approach to development. Below, we’ll explore some recommended approaches for effectively testing microservices:

1. Implement automated testing

Incorporate automated testing within your microservices architecture to save valuable time and ensure the consistency and reliability of your tests. Use the continuous integration and delivery (CI/CD) workflow to automate the testing procedures.

2. Conduct individual testing for microservices

Test each service independently, following a similar approach, to validate their individual efficiency. This involves executing unit tests on every component.

3. Integrate diverse test types

Recognize that different types of tests serve distinct purposes and no single test can provide an entirely dependable output. Consequently, consider amalgamating unit, integration, and end-to-end tests to understand the system comprehensively.

Microservices Testing Example: How Spotify test their microservices

Spotify prefers Integration test over other tests. It verifies the correctness of their services in a more isolated fashion while focusing on the interaction points and making then very explicit.They spin the database, populate it, start the service, and query the actual API in the test. Sometimes many services don’t even have Unit tests, which they claim is not required at all. They save Unit tests for parts of the code that are naturally isolated and have internal complexity of their own. Therefore they have different test pyramid when compared to the traditional ones.

This strategy helps them achieve three goals they aim for:

- Confidence, that the code does what it should.

- Provide feedback that is fast and reliable. They fall short a bit for this, but it’s fast enough.

- Easy maintenance. Since they perform edge testing they can do maintenance really fast.

Microservices Testing Tools

- Cypress is a powerful testing tool with features like real-time reloading, debugging, and snapshotting. Its unique testing architecture runs tests in the web app’s environment, making it easy to replicate production conditions, especially beneficial for microservices.

- Jest is an open-source tool maintained by Facebook, known for simplicity and user-friendliness. It also handles microservices integration testing. Jest offers features like Snapshot testing, code coverage reporting, and parallel execution support.

- InfluxDB is a Go-based, open-source tool for lightweight monitoring, load testing, and complex queries. It seamlessly integrates with Grafana and provides both free and usage-based plans.

- Jaeger is an open-source and end-to-end distributed tracing tool that monitors microservices, identifies performance areas, and offers a user-friendly UI for visualizing distributed tracing data.

- Hoverfly is a lightweight, open-source API tool for integration testing, replacing unreliable systems with API sandboxes, simulating microservice communication to validate expected behavior with Java/Python knowledge, and scalable for performance testing.

- Amazon CloudWatch serves as a monitoring solution, tracking resource utilization for applications or microservices hosted on Amazon Web Services, making it valuable for conducting load tests on microservices.

- Mocha is a flexible, easy-to-use JavaScript testing framework, ideal for unit and integration testing. Its simple syntax and support for various assertion libraries make it great for both beginners and experienced

Conclusion

My goal was not to make an argument for one form of testing over others but to showcase the lessons our team has learnt while implementing microservices testing strategies. There may be things that may not resonate with you because every scenario differs.

In a nutshell, contract testing is one you need to incorporate in your comprehensive release process. Also, performance contract test is a combination of other tests such as automated unit testing, integration testing and load testing combined with the manual. This will provide you the overall quality assurance in the process.

Today most of the BIGs such as Netflix, Amazon, Uber has moved towards microservice, this makes microservice testing more imperative. If you have any suggestions, questions or if you are struggling to execute a successful microservices testing pipeline, we can surely help you out!

mahboub mousavi

thanks a lot. great and easy to learn.