Unify your data landscape for better business outcomes

We design and implement custom data integration solutions that unify data from disparate systems, streamline data flow, and handle diverse data types, enhancing your ability to analyze and act on data effectively.

I'd Like To Know More!

Simform’s capabilities

ETL & ELT solutions

Seamlessly move and transform data across systems to ensure data readiness for complex analytics, reporting, and faster decision-making.

We focus on optimizing data pipelines for speed and accuracy with automated data extraction, robust transformation logic, and efficient loading processes using tools like AWS Glue, Azure Data Factory, and Talend.

Data ingestion to data stores

Build robust pipelines to ingest and centralize data from multiple sources into warehouses or lakes, enabling smooth processing and analysis of large datasets.

We implement data pipelines using tools like Azure Data Explorer, Apache Kafka, AWS Glue, and Google Cloud Dataflow to ensure continuous, reliable data ingestion. We also set up real-time connectors to optimize data flow for speed and accuracy, seamlessly integrating data into your data stores.

Custom integration solutions

Eliminate data silos and enable smooth, cross-platform communication with custom-built integrations that connect legacy systems, ERPs, CRMs, and modern applications.

We develop tailored solutions to meet your specific needs, from custom API connectors to integrating data across cloud environments (AWS, Azure, Google Cloud) and on-premise systems or developing a platform to support all your integration needs.

Advanced integrations with AI/ML

Streamline data preparation for ML models with automated data integration, preparation, and feature engineering, optimizing your ML workflows for faster, more accurate results.

We implement MLOps for data pipelines with automated cleaning, dataset management, and versioning. Using tools like Azure Data Factory, AWS SageMaker, Kubeflow, and Apache Airflow, we create scalable pipelines that prepare high-quality datasets for continuous ML model training and deployment.

Faster data ROI with business-driven engineering

At Simform, we specialize in transforming complex data environments into streamlined, cohesive systems that empower your organization to thrive. Here’s why we’re the ideal partner to elevate your data integration strategy:

Azure Data & AI Solution Partner

As an Azure-recognized data & AI Solution Partner, we leverage our extensive knowledge of Microsoft’s advanced cloud technologies to drive seamless data integration, transformation, and analysis.

Databricks partner

Our partnership with Databricks helps us deliver powerful data pipelines and advanced analytics that accelerate innovation and enable you to harness ML for impactful insights.

Next-gen data engineering

With deep expertise from working with tech and product companies managing massive datasets, we specialize in high-velocity data solutions, moving beyond traditional enterprise platforms.

Integration & streaming data experience

Our rich background in application development allows us to handle complex, real-time data flows for applications that require constant, live updates.

AI/ML readiness

We ensure your data is primed for AI and ML initiatives through automated preparation, cleaning, and feature engineering, supported by our comprehensive MLOps toolkit for efficient model training and deployment.

Expertise in FOSS data toolchain

We leverage cost-efficient, open-source technologies such as Airbyte, DataHub, Dagster, Airflow, Clickhouse, Metabase, and Superset to deliver powerful, scalable, and flexible architectures without breaking your budget.

Trusted by the World's Leading Companies

Case Studies

Discover the many ways in which our clients have embraced the benefits of the Simform way of engineering.

From Our Experts

Let’s talk

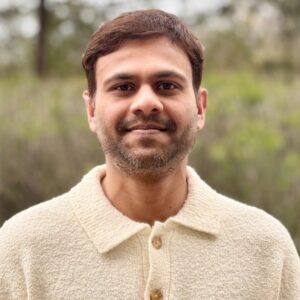

Hiren Dhaduk

Hiren Dhaduk

Creating a tech product roadmap and building scalable apps for your organization.