Download the case study

SolvPath: Scalable Gen AI-Powered Platform for E-Commerce Query Resolution

Category: E-commerce

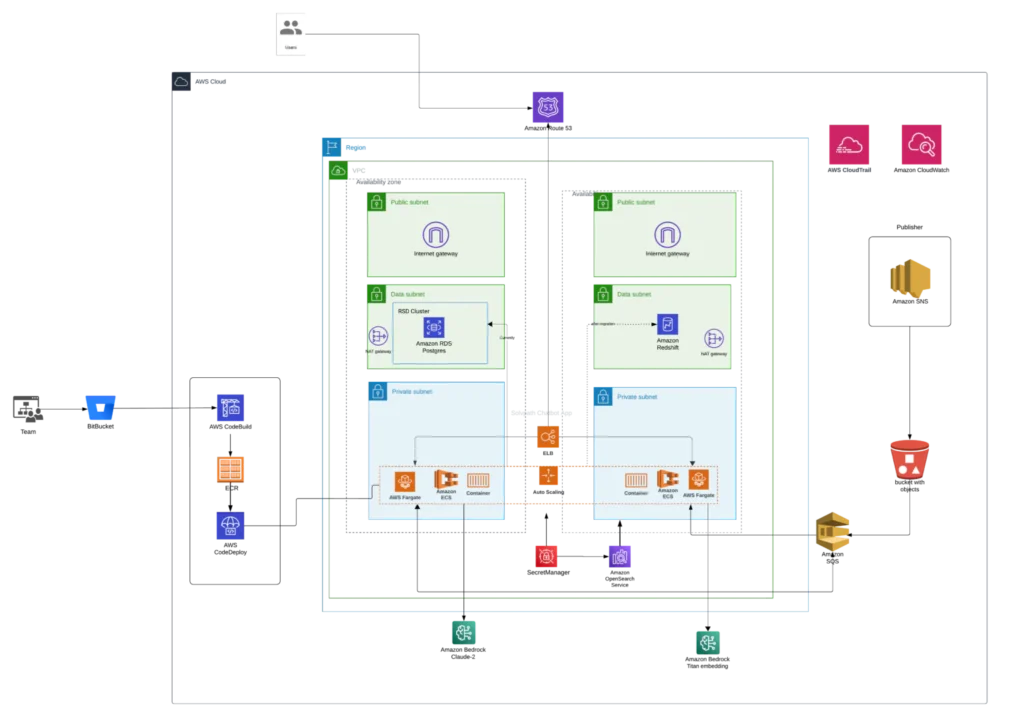

Services: Gen AI Development, Cloud Architecture Design and Review, Managed Engineering Teams

Category: E-commerce

Services: Gen AI Development, Cloud Architecture Design and Review, Managed Engineering Teams

accuracy and contextually relevant responses to user queries using RAG

SolvPath is an advanced AI-driven customer support interface designed to assist E-commerce platforms by delivering accurate, real-time answers to customer queries. It utilizes LLM capabilities to enable seamless natural language understanding, improving customer experience through contextual and accurate responses.

Hiren Dhaduk

Hiren Dhaduk

Creating a tech product roadmap and building scalable apps for your organization.