Whether you’re running a busy marketplace, a growing social network, or a quirky dating app, you’re dealing with a flood of user-generated content. And it’s not just about quantity—threats are getting smarter by the day in this AI-first era.

For example, spammers now have sophisticated tactics to hide affiliate links in seemingly honest product reviews. Misinformation spreads through innocent-looking memes faster than ever. Some users even coordinate campaigns that barely stay within your current policy lines.

To build truly intelligent moderation engines that can combat these issues, you need to implement advanced moderation solutions using AI and machine learning. And the good news is AWS offers powerful services that make it less complex and resource-intensive for businesses to create scalable and efficient content moderation systems.

In this blog post, we will explore how an AI-powered content moderation engine works and how you can build one for your platform using AWS’s robust AI tech stack.

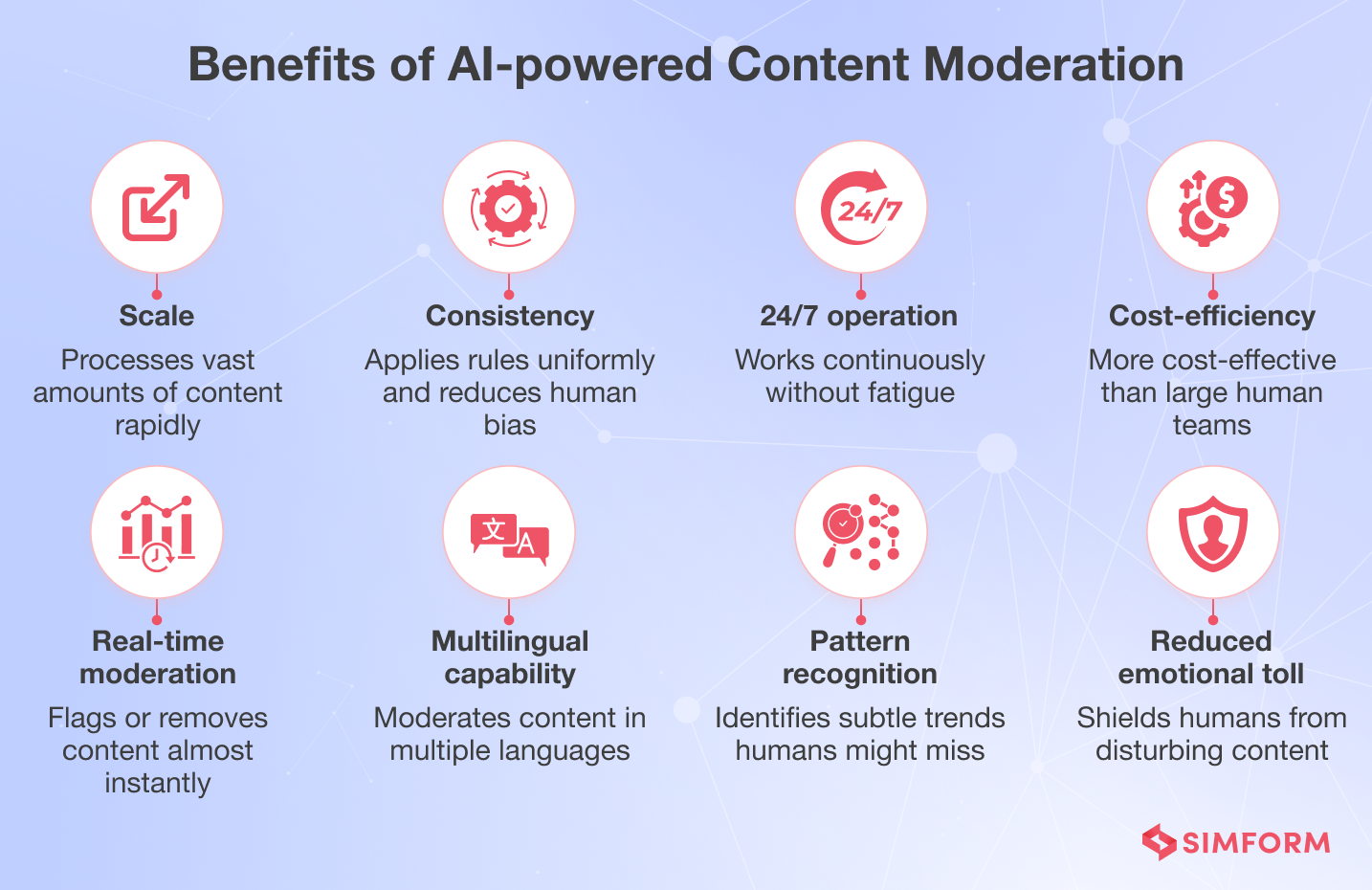

Why use AI for building content moderation engines?

AI-powered moderation engines are a powerful solution to handle the increasing volume and complexity of online content at scale. These systems can rapidly process vast amounts of data, adapt to emerging patterns of harmful content, and work alongside human moderators to create a more efficient and consistent approach to maintaining a healthy online environment.

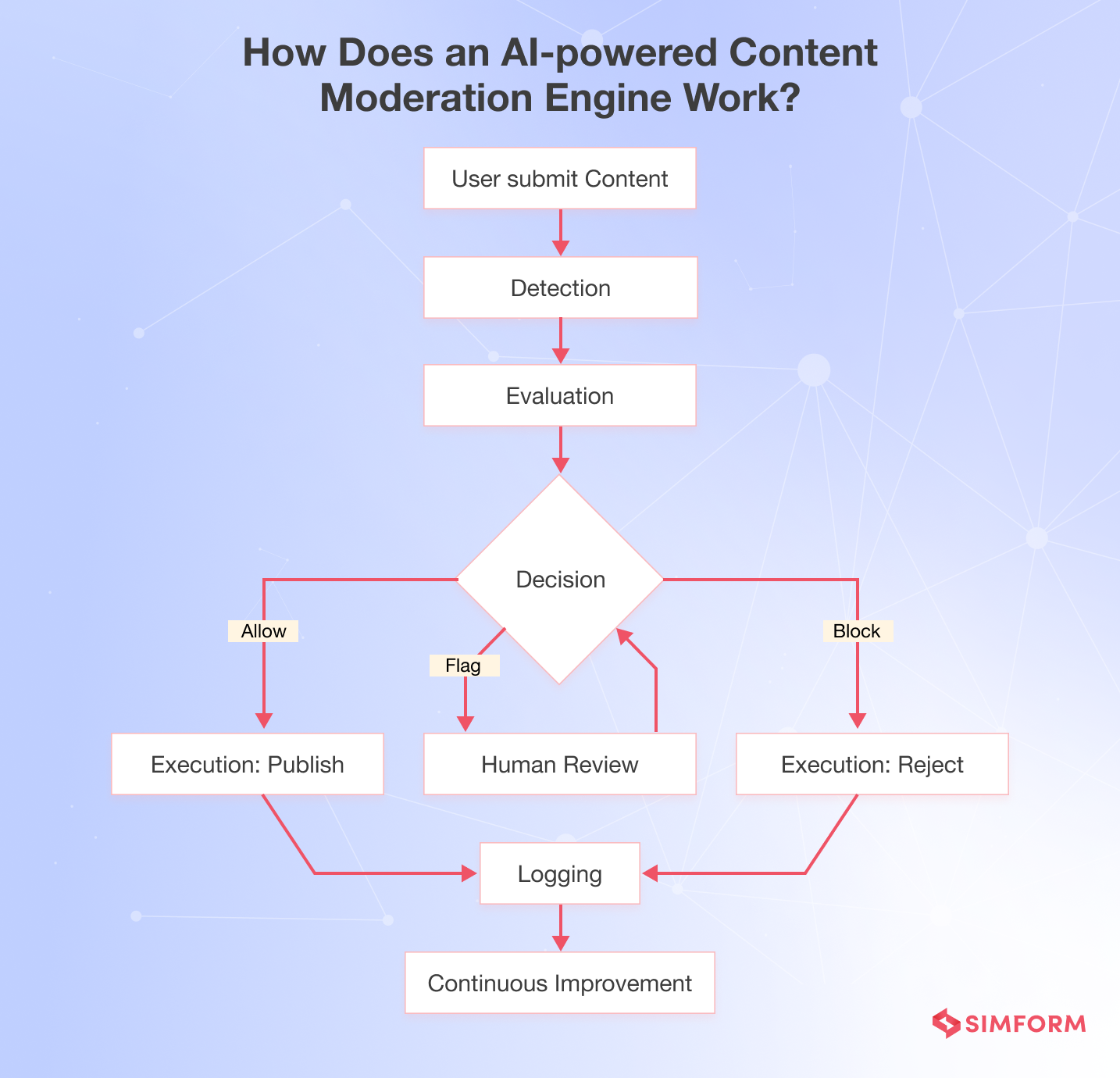

How does an AI-powered content moderation engine work?

An AI-powered content moderation engine processes user-generated content on a platform through a structured workflow to ensure adherence to community guidelines before publishing. Here’s a breakdown of how this process works:

- Detection

The engine scans the submitted content, a product review for example, using natural language processing (NLP) and machine learning algorithms to identify potentially problematic content. It includes detecting offensive language, hate speech, spam, or other rule violations.

- Evaluation

Detected issues are then evaluated, combining automated analysis and, in some cases, human review. The evaluation considers context, severity, and intent to decide whether the content breaches guidelines.

- Decision

Based on the evaluation, the AI engine determines the appropriate action. It could allow the review to be published, flag it for further review, or block it if it violates the rules.

- Execution

The decided action is implemented in real-time. If the review passes the checks, it’s published on the platform. If not, the system may reject or send it for human moderation.

- Logging

Every step is logged, documenting the actions taken for each review. This record-keeping is important for transparency, accountability, and continuous improvement of the moderation system.

This moderation process relies on various technologies working in concert—from machine learning models to data processing pipelines. Cloud providers like AWS make it easy to piece these components together with their suite of services. Let’s walk through the essential steps for building your own AI-powered content moderation engine using various AWS AI services.

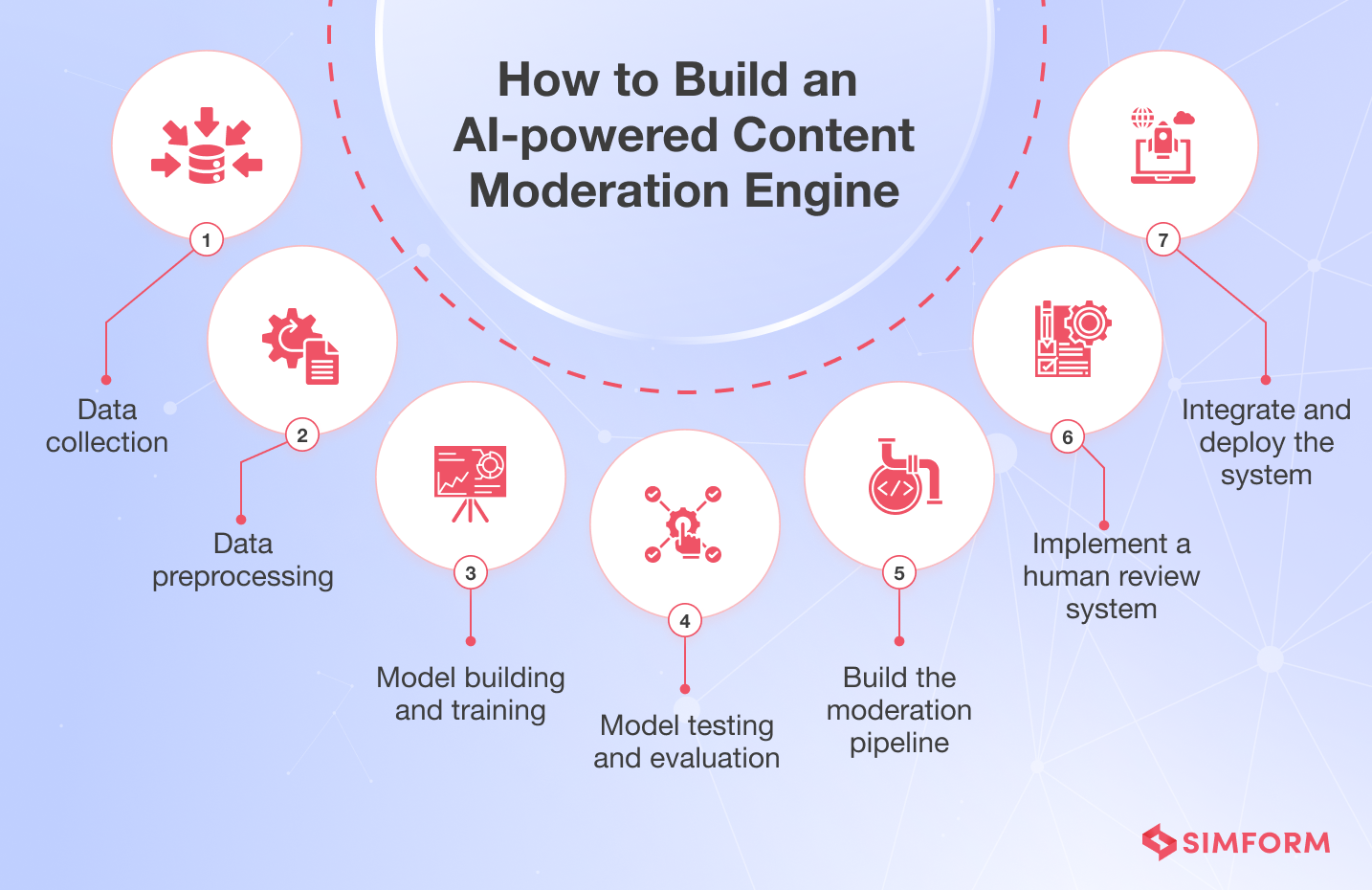

How to build an AI-powered content moderation engine using AWS

Step 1: Data collection

For an AI content moderation engine focused on maintaining trust and safety, collect user-generated content (UGC) that aligns with your platform’s specific moderation needs.

This includes text posts, comments, images, and videos that may contain policy violations such as hate speech, explicit content, harassment, or misinformation. Gathering guideline-violating and acceptable content from your platform is important to ensure balanced training and reduce false positives.

Once collected, you can securely store this UGC data in Amazon S3. Organize your storage by creating separate buckets for different content types (text, images, videos) and implement appropriate access controls. This structured approach in S3 will streamline subsequent data processing and model training stages.

Step 2: Data preprocessing

Cleaning is the first step in preprocessing the collected data. Data cleaning involves removing noise, correcting errors, and standardizing formats for consistency across the dataset. Since the data is in various formats, different preprocessing techniques are required for each.

Text preprocessing techniques

| Technique name | Meaning | Example |

| Tokenization | Splitting text into individual words or tokens | “This is great!” → [“This”, “is”, “great”, “!”] |

| Lowercasing | Converting all text to lowercase | “Hello World” → “hello world” |

| Stopword removal | Removing common words that add little meaning | “This is a test” → [“test”] |

| Lemmatization | Reducing words to their base or root form | “running” → “run” |

Image preprocessing techniques

| Technique name | Meaning | Example |

| Resizing | Adjusting image dimensions to a standard size | 1920×1080 → 256×256 |

| Normalization | Scaling pixel values to a specific range | Pixel values from [0, 255] to [0, 1] |

| Augmentation | Creating variations of images to enhance training | Flipping, rotating images |

Video preprocessing techniques

| Technique name | Meaning | Example |

| Frame extraction | Breaking video into individual frames | Extracting every 30th frame |

| Compression | Reducing video file size while maintaining quality | Using codecs like H.264 |

After preprocessing:

- Label each piece of content with the appropriate moderation category, ensuring label quality through multiple reviewers or expert validation.

- Use Amazon SageMaker Ground Truth for efficient labeling.

- Prepare the dataset by splitting it into training, validation, and test sets, with test sets reserved for later model testing.

Step 3: Model building and training

When building an AI-powered content moderation engine using AWS, the core of your system will be machine learning models designed to classify and filter content. These models include text classifiers to detect inappropriate language, hate speech, or sensitive information, and image classifiers to identify explicit visual content.

For model training, you have two main options using AWS services:

- Custom model training: Using Amazon SageMaker, you can train custom models on your specific dataset. This approach is ideal when you have unique content policies or specialized moderation needs. You will need a large, diverse, and accurately labeled dataset of both acceptable and prohibited content. SageMaker Ground Truth can assist with data labeling. It’s important to train multi-label classifiers that categorize content into classes like “safe,” “violent,” “adult,” or “hate speech,” etc.

- Fine-tuning existing models: Alternatively, Amazon Bedrock offers pre-trained foundation models that can be fine-tuned for content moderation tasks. This approach can be more efficient, especially when working with limited datasets. You can adapt these models to understand the context and nuances specific to your content moderation requirements.

To enhance the model’s moderation capabilities, you can integrate Amazon Comprehend for text analysis and Amazon Rekognition for image and video analysis. Comprehend offers powerful natural language processing to detect sentiment, extract key phrases, and identify potentially inappropriate language. Rekognition provides pre-built capabilities to detect objects, scenes, and inappropriate visual content.

The model training process also involves iterative refinement, continuously improving the model’s accuracy and reducing false positives/negatives.

Step 4: Model testing and evaluation

With your model trained, it’s time to put it to the test. This step is important to understand your model’s real-world performance and identify areas for improvement in your trust and safety system. We recommend using your held-out test set to evaluate the model’s performance on unseen data.

While testing the model, focus on these key metrics:

- Accuracy: Overall correctness of predictions

- Precision: Proportion of true positives among all positive predictions

- Recall: Proportion of true positives identified correctly

- F1 score: Harmonic mean of precision and recall

- Response time: How quickly the model makes predictions

Pay special attention to false positives (acceptable content flagged as violating) and false negatives (violating content not caught by the model). These errors can significantly impact user experience and platform safety.

In addition to these metrics, test the model’s filters thoroughly:

- Test keyword and regex filters with various inputs to ensure they’re not overly broad or narrow.

- Evaluate semantic filters on edge cases and subtle policy violations.

- To identify potential weaknesses, perform adversarial testing to “trick” the model with content designed to evade detection.

Throughout this process, you can use Amazon SageMaker Model Monitor to track your model’s performance over time. This helps you identify when retraining might be necessary.

Based on these results, you may need to iterate on your model, adjust architectures and hyperparameters, or even revisit your data collection and processing steps.

Step 5: Moderation pipeline development

After training and evaluating your models, the next step is to build a moderation pipeline that can process content in real-time. This pipeline will integrate your trained models into a system that can efficiently handle incoming content, make moderation decisions, and take appropriate actions.

- Content ingestion

The moderation pipeline begins with a content ingestion system. Set up endpoints to receive incoming content from your platform like text, images, and videos. To manage traffic spikes and decouple components, you can use Amazon Kinesis or Amazon MSK (Managed Streaming for Kafka). These services are the most efficient choice for high-throughput, low-latency requirements of a real-time content moderation system.

- Pre-processing steps

Once content is in the queue, it moves to preprocessing. Apply the same techniques used during model training to ensure consistency. This might include tokenization for text or resizing for images. This stage prepares the content for accurate classification by your model.

- Model integration

Next comes model integration for content classification. Set up a system that can quickly pass preprocessed content through your trained model and receive predictions. Using Amazon SageMaker endpoints can facilitate low-latency, high-throughput real-time inference. We also recommend configuring auto-scaling to handle varying loads efficiently.

- Post-processing logic

After getting predictions from your model, implement post-processing logic. This stage refines the raw model outputs into actionable decisions. Create threshold-based decision rules—for example, content with a toxicity score above 0.8 might be automatically removed, while scores between 0.5 and 0.8 could be flagged for human review.

- Pipeline orchestration

To tie all these components together, use AWS Step Functions. This service allows you to define your workflow as a series of steps so it can easily manage the progression from content ingestion through to final action. Step Functions can handle error cases, retries, and parallel processing, ensuring your pipeline operates reliably and efficiently.

Step 6: Human review system implementation

AI excels at handling the bulk of moderation tasks, but it still can’t eliminate the need for human oversight, especially for managing edge cases. To implement a human review system in your AI content moderation engine, start by designing an intuitive interface for human moderators using AWS Amplify to review flagged content efficiently.

Additionally, develop a workflow that addresses edge cases and potential false positives, including an escalation system for particularly challenging situations. Then, integrate Amazon Augmented AI (A2I) into your system to seamlessly blend human review with AI workflows.

This allows efficient human review of machine learning predictions wherever necessary. Notably, it establishes a feedback loop where human decisions continuously inform and improve your AI model, improving its accuracy over time.

Step 7: Integration and deployment

The final step is to integrate your moderation engine into your existing platform, deploy it, and set up systems for ongoing monitoring and improvement.

You can first use Amazon API Gateway to create, publish, and manage APIs for your moderation service. Then, for easy scalability and management, deploy your solution using AWS Elastic Beanstalk or Amazon ECS.

Comprehensive logging and monitoring using Amazon CloudWatch will help you track key metrics like moderation accuracy, response times, and throughput and set up alerts for anomalies or performance issues.

Even after deployment, moderation decisions and user feedback should be reviewed regularly to identify areas for improvement. So, continuously update your training data with new examples, focusing on edge cases and evolving content trends. Retrain your model periodically to maintain high performance as content patterns evolve.

| Note: Amazon API Gateway is primarily designed for managing HTTP/HTTPS APIs. For event-driven, serverless architectures, AWS Lambda and AWS EventBridge could be more suitable options for triggering moderation workflows and integrating with other services.

You can also use AWS Lambda and AWS Fargate for serverless and managed container deployments, respectively, which can simplify the deployment process and reduce operational overhead. |

While the steps outlined above offer a solid framework for building an AI-powered content moderation engine, implementing such a system in the real world presents challenges.

From handling nuanced content to keeping up with new standards, each barrier requires careful consideration and practical solutions. Let’s explore some of these challenges and what you can do to address them effectively.

Challenges and solutions in AI content moderation

1. Handling nuanced cases

AI models often struggle with subtleties. Sarcasm, cultural references, and context-dependent content can slip through or trigger false alarms.

Solution:

- Create a hybrid approach. Though AI can handle most moderation tasks, you can use human moderators for tricky, nuanced cases.

- Use Amazon Augmented AI (A2I) to integrate human review into your AI workflows seamlessly.

This way, you get both: AI efficiency and human discernment.

2. Keeping up with evolving standards

Online community norms are as dynamic as the internet itself. What’s acceptable today might be taboo tomorrow.

Solution:

- Implement a continuous learning pipeline.

- Use Amazon SageMaker to retrain your models with fresh data regularly.

- Set up a feedback loop where human moderators can flag new trends or shifting norms, ensuring your AI stays culturally aware and up-to-date.

3. Tackling multimodal content

Users don’t just post text; they share images, videos, and audio too. Moderating this mix of content types is like juggling while riding a unicycle.

Solution: Build a multimodal moderation powerhouse. Use Amazon Rekognition for image and video analysis, Amazon Transcribe for speech-to-text conversion, and Amazon Comprehend for text analysis. Combine these services to create a comprehensive moderation system that can handle whatever users throw at it.

4. Balancing accuracy and scale

You need to moderate millions of posts with high accuracy. AI can help automate the process, but the models must be highly efficient and parallelizable to keep up with the influx of content.

Solution: Use Amazon SageMaker to fine-tune your models for accuracy and speed. Implement a tiered system where clear-cut cases are handled automatically while borderline cases are escalated for human review. You can use AWS’s scalable infrastructure to handle traffic spikes.

5. Protecting moderator well-being

Reviewing disturbing content can be stressful for human moderators as it is psychologically risky.

Solution: Train your models to handle the most disturbing content, reserving human review for less traumatic edge cases. Implement rotation systems and provide mental health support for your moderation team.

Build an intelligent content moderation engine with Simform

Developing an AI-powered content moderation engine on AWS requires expertise and experience. Simform uses AWS services to create robust, scalable moderation solutions tailored to your needs.

Our team of AWS-certified architects and ML experts can help you:

- Optimize your AWS architecture for content moderation using services like Amazon S3, SageMaker, Comprehend, and Rekognition.

- Develop and fine-tune custom AI models for your specific content types and policy guidelines.

- Implement scalable moderation pipelines that integrate seamlessly with your existing systems.

- Set up human-in-the-loop workflows using Amazon Augmented AI (A2I) for handling complex cases.

We make sure your moderation system evolves to meet your needs with continuous improvement processes and content moderation regulations. Contact us today for a free consultation and discover how we can protect your brand with an AWS-powered moderation solution.