Despite the immense competition in today’s financial market, many banking enterprises still work with slow mainframes and traditional technologies. However, standing out amongst the rest is – Capital One, which has evolved into a full-grown digitized banking organization. And yet remains a true tech company at heart! A modern tech infrastructure has enabled the company to develop apps and websites that respond to the evolving needs of its users.

Founded in 1988, Capital One considers itself a start-up in the banking community after all these years! Right from the start, the executive team has been committed to running the bank more like a tech company than a typical financial institution. Down the line, the company decided to adopt a DevOps strategy as a solution to combat inconsistent releases, multiple handoffs, and manual errors in development that took ages to resolve.

Enterprises that want to deliver quality software faster can learn valuable lessons from the Capital One DevOps case study. Read on to discover how Capital One became an early adopter of the technologies and made a name in the DevOps world.

The DevOps journey of Capital One: the when and the why

In 2010, Capital One started recognizing their customers’ inclination towards online and mobile options to carry out their banking activities. Given that, the senior management decided to develop the company’s technical infrastructure and set up a culture to attract and retain talented people.

Capital One focused on hiring engineers and aligning them with the appropriate business-centric decision-makers in the team. Soon, the company adopted agile software development, laying the foundation of the DevOps implementation.

Quick response to customer feedback has always been the immediate priority at Capital One. Therefore, DevOps was a natural choice for the concerned teams to achieve faster development and deployment cycles.

From 2012 to 2020, Capital One underwent the following changes:

- Adoption of agile practices

- Building automated test cases

- Automating deployments and tests

- Migration to the public cloud

All the above changes led the bank to become an open source-first organization. Not to mention, in 2020, Capital became the first U.S. bank to announce the movement of its legacy on-premises data centers to the public cloud.

The success story: the how

Though Capital One had a small team in their early days of adopting DevOps, they wanted to implement an enterprise-wide strategy. Eventually, the company approached its DevOps initiatives in three phases, as shown in the image below.

Creating cross-functional SWAT teams

Capital One initiated the transition towards DevOps by assigning dedicated and cross-functional “SWAT” teams to two of its legacy applications. These teams successfully implemented configuration management, automated critical processes, and improved the workflow of each app. Post that, the respective team of each app would retain ownership of its software delivery process. The process was repeated for four more applications before allowing the teams to use the best practices identified by the SWAT teams.

Having a cross-functional SWAT team from the early stages helped Capital One create shared goals. It also helped developers and operation teams learn the necessary DevOps disciplines.

Leveraging microservices architecture

Capital One, like other companies, followed a monolithic architecture. However, gradually, their projects started growing large enough to justify the overhead required down the line. And so, the bank started investing more time and effort in studying the microservices architecture and its relevance to their company.

The main goal at Capital One was to increase the speed of delivery without compromising quality. The development team decided to leverage automated deployments compliant with their general quality standards. They created rigorous and explicit guidelines for software delivery and changes in production.

The Capital One team introduced immutable stages in their pipeline execution, such as

- Source control mechanisms

- Secure storage of application binary

- Access-controlled application environment

- Quality and security checks.

Each application team had to meet these imperatives before transferring their code to production.

Ultimately, the implementation of microservices provided Capital One with benefits such as,

- Asymmetric service scaling,

- Intelligent deployment,

- Zero downtime

- Separation of logic and responsibilities

- Error handling

- Resiliency design patterns

Building an on-demand infrastructure on AWS

Based on user feedback at this point, product managers at Capital One focused on upgrading the banking and financial services to a top-notch experience. That’s precisely why the company had a cloud-first policy and architects deployed the new applications on the cloud.

Capital One using on-demand infrastructure on AWS

The below AWS tools enabled Capital One’s development team to obtain valuable user insights and respond faster:

- Amazon Virtual Private Cloud (Amazon VPC)

- Amazon Simple Storage Service (Amazon S3)

- Amazon Elastic Compute Cloud (Amazon EC2)

- Amazon Relational Database Service (Amazon RDS)

Automating delivery pipelines using Jenkins

Capital One uses a variety of pipelines to scan and run tests on its code to achieve a company-wide quality. Also, the same process is undertaken for faster delivery. Every code update undergoes a series of rigorous automated tests, including integration tests, unit tests, security scanning, and quality checks. The pipeline automatically deploys a release once the code passes all the tests. This way, users do not experience downtime, and teams can release updates without any disruption.

The development team leveraged Jenkins, the industry-standard tool for building continuous integration and delivery pipelines. This way, Capital One did not have to reinvent its development wheel in-house. The Jenkins- based pipeline helped break down the entire process into stages like “application build,” “integration testing,” and “deployment,” which are further divided into additional steps. Capital One uses code generation tools to generate Jenkinsfiles for different applications.

Jenkins enabled Capital One to automate its software delivery, increase operational stability and improve the developer experience as well.

Check out how to implement enterprise DevOps for a large-scale organization

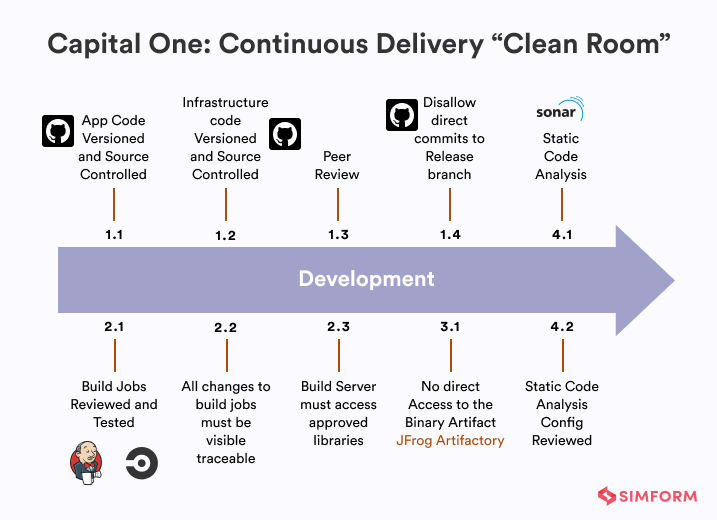

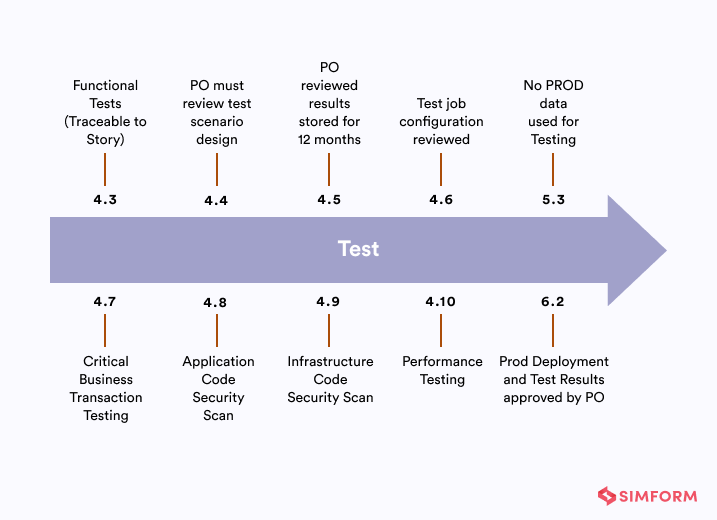

Governance with “Clean Rooms”

Capital One aspired to achieve “no fear releases” to support innovation. However, this also required them to adopt a “you build it, you own it” approach to ensure everyone is accountable for their actions and roles played in software delivery. The renowned Strategist and DevOps Evangelist, Tapabrata “Topo” Pal and his team borrowed this “clean room” concept for Capital One. They adapted it for the software development lifecycle to brace this combination of fearlessness and accountability.

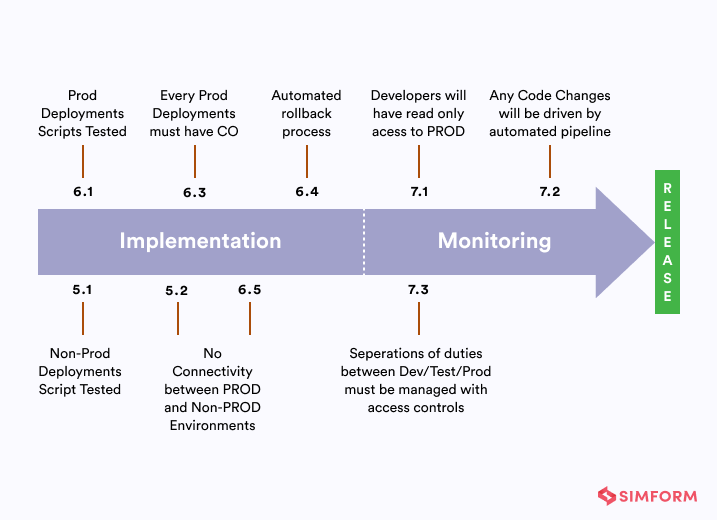

The company’s virtual development “clean room” can be defined by a set of well-defined guidelines to ensure there is code quality before its release. Such guidelines are for the processes like identifying and registering all product pipelines, testing and scanning every code change, controlling access to production servers, etc.

In simple words, “clean room” focuses on defect prevention rather than defect removal.

Ultimately, a “clean room model” enabled Capital One to identify issues under various product pipelines and ensure quality from scratch.

Introducing chaos engineering into DevOps practices

Despite having various access controls and safeguards in place, situations can often go out of control in software deployment. Cloud failures are unpredictable, unavoidable, and of course, risky in some cases of zone blackouts. It wouldn’t be wrong to say that “continuous delivery” also introduces the probability of “continuous chaos.” Capital One has a team that works on solving that very problem.

Traditional approaches cannot predict all failure modes due to complex request patterns, volatile data conditions, etc. In 2017, Capital One followed the example of Netflix and implemented its own version of chaos engineering.

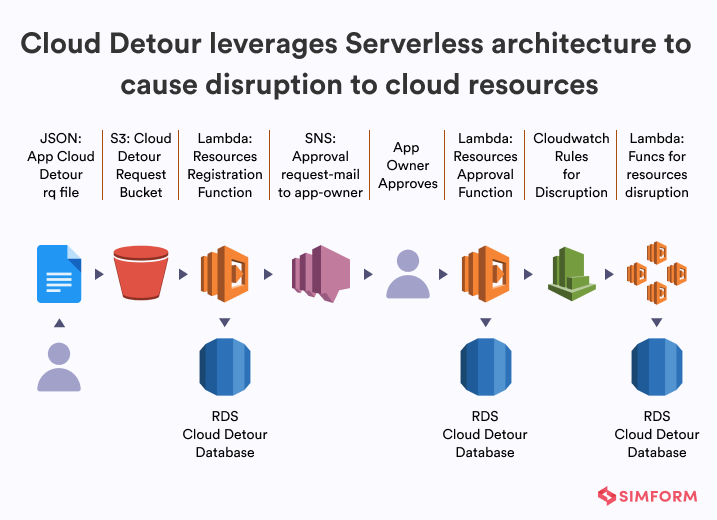

The company introduced a disruption tool called “Cloud Detour” to test the resilience of the applications they build. Here, mission-critical applications are tested against different failure scenarios. This helps build solutions that ensure adequate resiliency and serve as a robust disaster recovery strategy.

“Cloud detour addresses the need for a chaos engineer automation tool by providing failure-as-a-service for applications. You discover so many things.”

– Sathiya Shunmugasundaram, Former Lead Software Engineer, Capital One

Enforcing security in DevOps

Initially, Capital One followed a manual and a lengthy security certification process. However, the company soon realized the importance of securing container environments to boost its business encryption in all its services.

As a result, Capital One embedded automated security checks into its DevOps pipeline. It helped them accelerate the assessment of misconfigurations and vulnerabilities in its containers and virtual machine images.

The DevOps team soon had API access to vulnerability management and policy compliance tools. It enabled them to run necessary tests, obtain reports, and start rectifications without involving the security team.

What can organizations learn from Capital One DevOps strategy?

- Speed is the new currency essential for responding to evolving user demands. Collaboration between internal teams and automation of various processes help you achieve just that.

- DevOps practices and team collaborations encourage you to keep trying new ideas. So, embrace a fail-fast mindset, and you’ll soon find a solution that works.

- Adopting continuous monitoring practices leads your organization to obtain quality results and achieve scalability even if your processes had a slow-paced start.

- The centralization of delivery tooling eliminates the need to develop and manage each team’s tech-stack in different silos. In turn, it reduces duplicate efforts and increases reuse of resources.

- Cloud infrastructure enables flexible use of resources. Subsequently, you can avoid the scarcity of possibilities and scale with ease when the need arises.

- Analyze all the existing development processes, and then set a quality bar to achieve maximum results. Then, automate quality control based processes to minimize human error to simplify necessary DevOps compliance.

Simform’s solution

Yes, DevOps implementation can undoubtedly boost your business by enhancing the productivity of your developers and operation teams. However, DevOps is also one of the most challenging fields today, given the depth of collaboration it requires. And, the desired results may not be achieved when an organization rushes into the implementation without knowing how DevOps work.

You’ll need a reliable partner who can successfully drive your organization through all the required transformations. Our extended engineering team can help your organization automate tasks, collaborate better, and ship product updates rapidly. We understand that every business is unique. And so are its challenges! So let us join hands to find your organization-specific solutions so that you can sail any troubled water smoothly.