With over 80% of companies struggling to control cloud costs, according to The State of the Cloud Report, optimizing your cloud spend should be a priority.

And no wonder – the same report shows that 60% of organizations cite cost optimization as their primary cloud objective. However, most lack the expertise to make this goal a reality.

Migrating to AWS provides an ideal opportunity to architect cost-efficient systems from the start and correct expensive legacy practices. The AWS Well-Architected Framework outlines cost optimization as one of the five pillars driving cloud success.

Luckily, with the right guidance, your business can avoid becoming part of the 80% struggling with runaway public cloud bills. As an AWS Premier Consulting Partner with the AWS Migration Competency, Simform has helped clients save massive amounts through optimized migrations designed to maximize ROI.

Based on our first-hand experience of successfully migrating workloads for various industries, we’ve shared our proven cost optimization blueprint to help your organization.

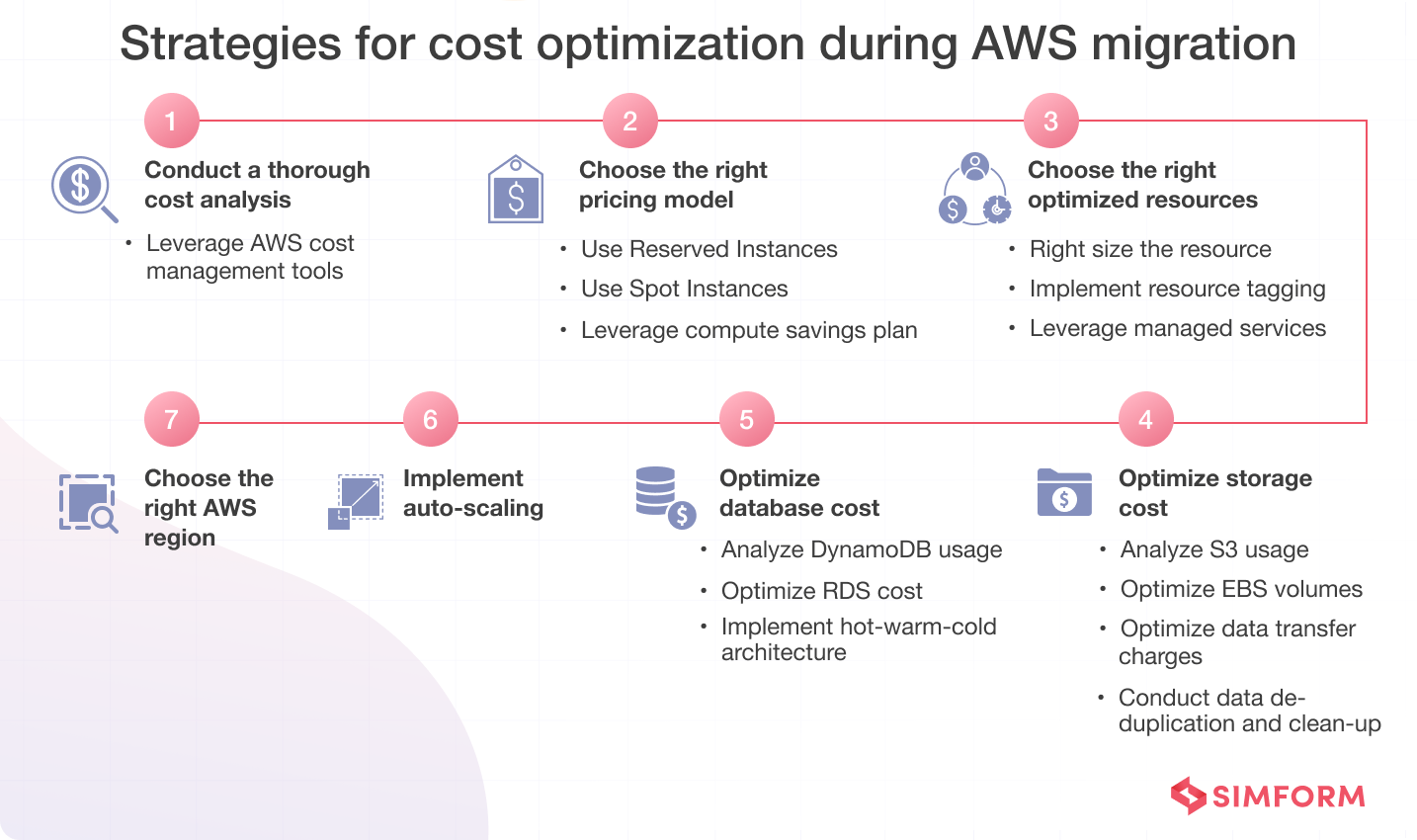

Best practices and strategies for cost optimization during AWS migration

Keeping tight control of spending from the start is the key to set yourself up for long-term savings. For that, implementing strategies like assessing resource utilization, right-sizing EC2 instances, taking advantage of AWS pricing models, implementing automation, etc., is the sure-shot way around.

#1. Conduct a thorough cost analysis

When it boils down to cloud computing, cost optimization directly correlates with the efficiency and ROI of your cloud investment.

To thoroughly analyze your enterprise’s cloud spending,

- Examine infrastructure expenses related to instance types, storage, databases, networking, and other resources to identify areas of overprovisioning and potential savings.

- Audit software costs across purchased applications, licenses, and platform services.

- Review resource utilization to rightsize workloads using autoscaling, reservations, and serverless options.

- Consider technical debt and migrating legacy systems that incur unnecessary hosting fees.

- Track efficiency, waste, and consumption metrics to get insight into your spending.

In addition to all these, calculate the total cost of ownership (TCO) by considering your direct (physical machines, servers, labor, storage, software licenses, and data centers) and indirect (training, integration, and migration) costs. This will give you a comprehensive understanding of the true costs of operating in the cloud over time.

With this visibility, you can then use AWS tools to monitor, control, and optimize your cloud spending.

a. Leverage AWS cost management tools

You can use AWS Budgets to set threshold values for particular usage and get custom alerts when the usage surpasses the threshold value or it falls below the defined parameters.

Other popular AWS cost management tools to calculate your enterprise’s cloud spending include:

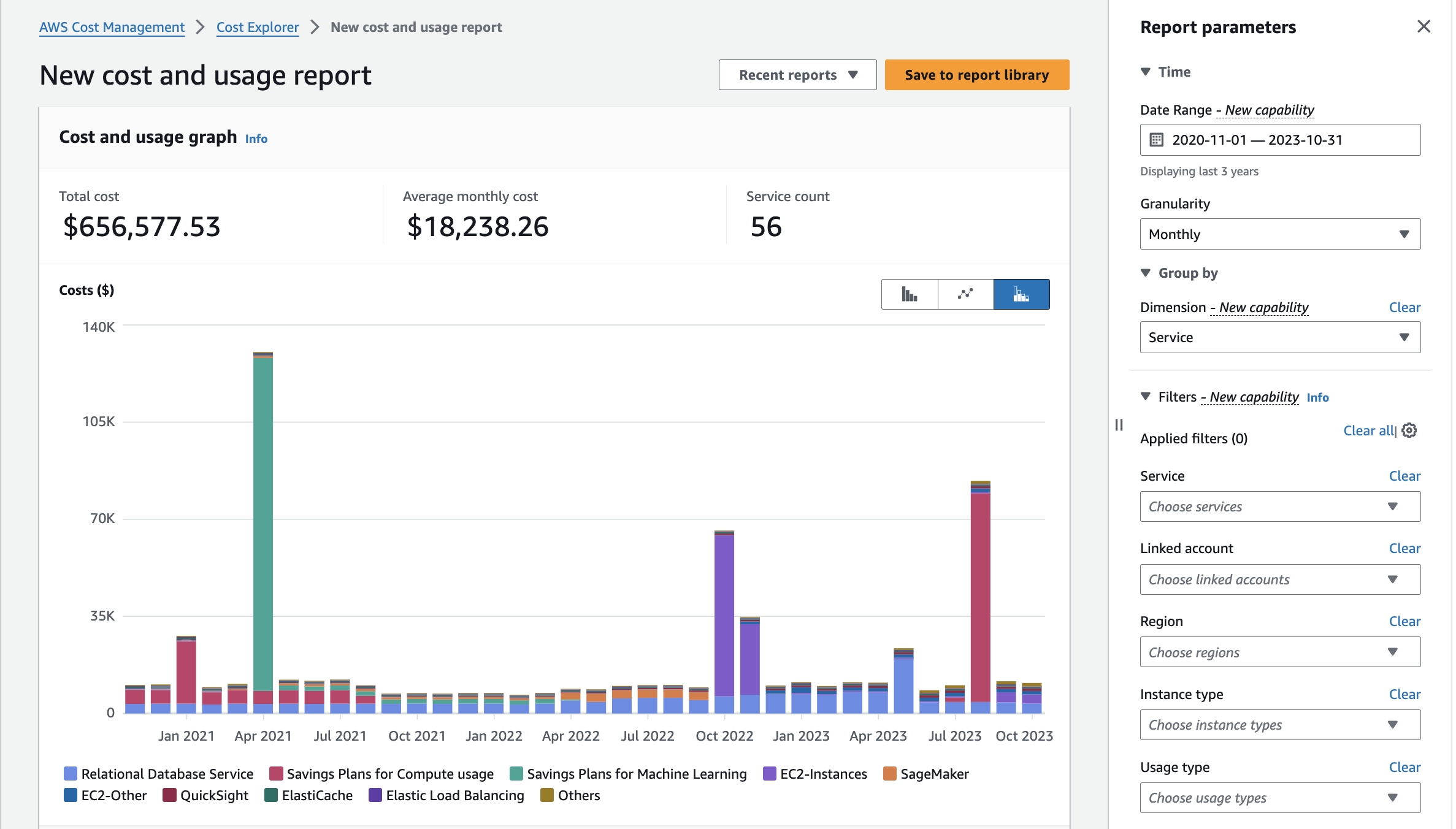

1. AWS Cost Explorer

Source: Cloud Financial Management – AWS Cost Explorer – Amazon Web Services

AWS Cost Explorer provides comprehensive insights into your past and forecasted costs. You can decode the spending patterns, detect the resources that cost most, and predict future spending, so as to make better decisions regarding resource optimization and reduce unnecessary expenses.

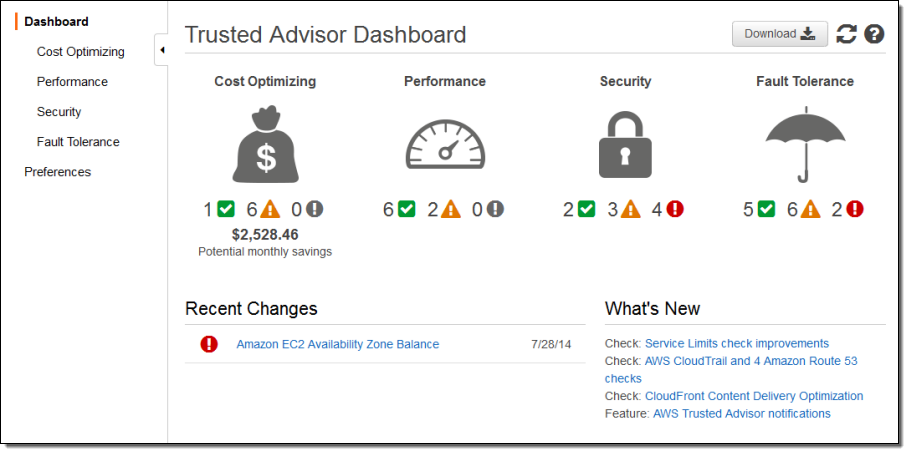

2. AWS Trusted Advisor

Source: AWS Trusted Advisor For Everyone

For a more personalized approach to cost optimization, turn to AWS Trusted Advisor. This tool scans for unused EC2 instances, idle load balancers, and overutilized EBS volumes to rightsize and reduce waste. It also consolidates redundant security groups, IAM roles, and snapshot storage, while preventing overuse of service limits.

Trusted Advisor’s automated recommendations makes it easier for you to enforce cloud governance, ensure reliability, and substantially cut costs.

Simform reduced maintenance costs by 25% with AWS Trusted Advisor

At Simform, we partnered with a client operating in the codeless AI infrastructure space, facing scalability, maintenance, and flexibility challenges, with their on-premise infrastructure. Our team assessed their current infrastructure and designed an efficient architecture using AWS services like EC2, Auto Scaling, and Elastic Load Balancing (ELB).

A key aspect of our migration strategy was extensive use of AWS Trusted Advisor. Our experts used Trusted Advisor throughout the migration to optimize provisioning and strengthen security. It helped scale seamlessly with 50% increase in release time for new features and 25% reduction in maintenance costs.

#2. Choose the right pricing model

When migrating to AWS infrastructure, selecting the pricing model is essential for cost visibility, utilization efficiency, opportunity costs, and competitive advantage. So, choose which of the three: Reserved Instances, Spot Instances, or Compute Saving Plans would be appropriate for your business needs.

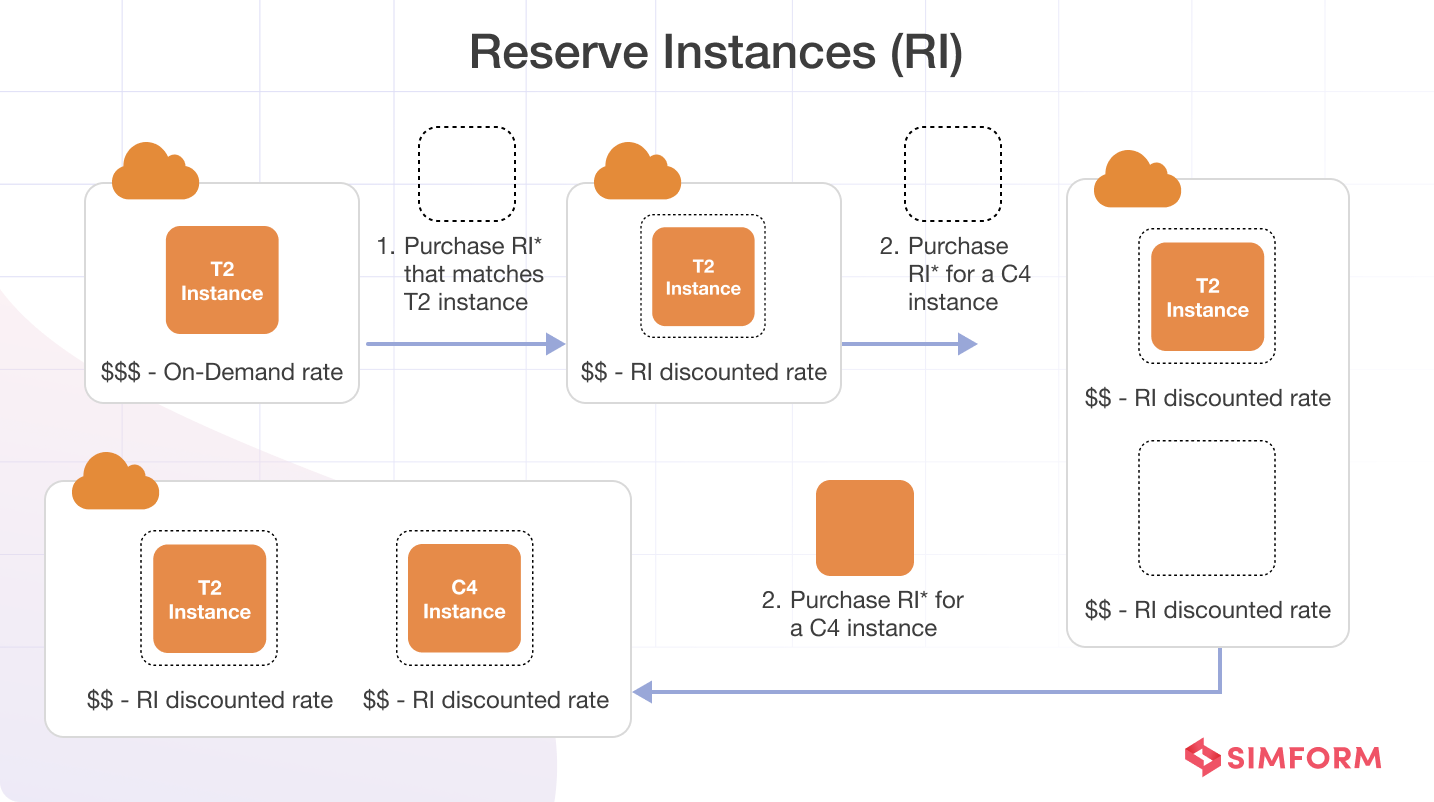

a. Use Reserved Instances (RI)

Reserve Instances (RI) allow you to prepay for Amazon EC2 and Amazon RDS capacity over 1 or 3-year terms to receive major discounts and savings of up to 72% based on instance type, region, and tenancy.

Use this pricing model for workloads that require steady-state usage of specific instance types over a 1 or 3-year term. Good candidates are databases, web servers, business applications, and other core systems where resource requirements are predictable and unlikely to change dramatically.

Some key AWS services where RI can yield significant savings include Amazon EC2, Amazon RDS, Amazon Redshift, Amazon ElastiCache, and Amazon DynamoDB.

There are three types of payment options for RIs, i.e.,

- All up-front (AURI) – where you need to pay the entire cost at the beginning,

- Partial up-front (PURI) – where you need to spend some amount in the starting, and

- No up-front (NURI) – where you pay a discounted hourly charge.

b. Use Spot Instances

Spot Instances allow you to request unused EC2 capacity at 90% off the on-demand prices without term commitment. As you migrate apps with flexible start/stop times, Spot Instances can significantly lower compute expenses.

So, choose this model when you’re–

- Optimizing for cost-effective workloads with flexible start and end times.

- Handling fault-tolerant and loosely coupled applications.

- Managing batch processing, data analysis, and testing environments.

- Running CI/CD pipelines and containerized workloads.

- Handling time-flexible tasks like rendering, encoding, and HPC workloads.

Simform reduced the cost of the development environment by 50% using Spot Instances

When collaborating with a client on an advertising marketing and customer analytics platform for eCommerce stores, we encountered challenges with their existing Digital Ocean infrastructure, including scalability, availability, integration, and safety problems.

Our developers efficiently addressed these concerns by migrating the infrastructure to AWS and strategically utilizing Spot Instances for the development environment.

The enhanced scalability and security resulted in an impressive cost reduction of over 50% for the development environment.

c. Leverage compute savings plan

For a more flexible and predictable approach to cost optimization, consider compute savings plans. These plans offer upto 72% savings compared to on-demand pricing, providing flexibility across a range of instance types and regions.

Rather than paying variable on-demand rates, saving plans lets you plan a predictable, consistent hourly rate for your usage. Though there’s a catch –you require 1 or 3 year commitment and consistent capacity planning.

Continuous monitoring of your application’s usage patterns will help you regularly review your workload requirements and adjust your commitment without sacrificing performance.

#3. Choose the right optimized resources

The right optimized resources ensures workloads run on ideal instance types and services to meet performance needs cost-efficiently. Proper resource selection helps minimize waste and prevents you from overspending on unused capacity. So,

a. Right size the resources

Right-sizing resources is a valuable way to control cloud cost spending. It helps avoid both under-provisioning, which impacts performance, and over-provisioning, which wastes spend.

To successfully right-size resources:

- Analyze current usage metrics and identify underutilized instances.

- Scale instance types based on workload requirements and use auto scaling to adapt to varying workloads dynamically.

- Use AWS cost management tools to monitor and optimize expenses continually.

- Consider RIs for stable workloads to benefit from cost savings.

- Regularly review and adjust resource allocations based on evolving needs.

To streamline the process of right-sizing instances, use AWS Compute Optimizer. It analyzes historical resource utilization patterns and recommends optimal instance types for your workloads. You can balance performance and cost by aligning your resource choices with actual usage.

b. Implement resource tagging

By appropriately tagging resources, you can categorize and track expenses based on business units, projects, or environments. It enables granular cost reporting, facilitating informed decision-making and cost allocation.

Define standardized tags taxonomies upfront for consistency. Enforce comprehensive tagging policies across AWS deployments to maintain visibility. This simplifies tracking expenses associated with specific projects or departments.

c. Leverage managed services

AWS managed services, such as Amazon RDS, AWS Lambda, Amazon EKS Kubernetes, Amazon CloudFront, AWS Fargate, etc., reduce the operational burden by offloading tasks such as database management, machine learning, and analytics to managed services to AWS.

For example, AWS Lambda allow you to only pay for compute time used rather than having idle or underutilized servers. As your application scales up and down, AWS automatically scales the supporting resources as well.

Similarly, RDS handles all the database infrastructure and optimizations so your team doesn’t have to patch or upgrade database yourself.

Still, when opting for managed services, evaluate the performance needs and data volumes of your workloads, and balance the cost savings and performance requirements. Though they simplify operations, some managed services may be more expensive depending on workload specifics.

Simform reduced 30% of data processing costs with AWS managed services

We collaborated with a client to deliver intelligent data solutions for schools. To efficiently process 7-8 GB of daily data, we used AWS Glue and its managed services. The implementation automated 80% of ETL workloads, which reduced data processing costs by 30%.

#4. Optimize storage cost

Efficient storage management ensures you only pay for the necessary resources, avoiding unnecessary expenses. By accurately sizing and selecting the appropriate storage solutions, you can optimize performance while translating into direct cost savings.

a. Analyze S3 usage

First, determine the data types you are storing, frequency of access, and required durability. Categorize data based on access patterns to identify opportunities for optimization.

AWS S3 offers multiple storage classes designed to address specific use cases. Optimize your storage costs by matching data to the appropriate storage class based on access frequency. For example, consider the standard storage class for frequently accessed data; Standard-IA (Infrequent Access) for infrequent access, and Glacier for archival data.

Additionally, automate the transition of data between storage classes using S3 lifecycle policies. Identify data that becomes less critical over time and move it to more cost-effective storage classes accordingly. This ensures you only pay for the storage performance required at that time.

b. Optimize Elastic Block Store (EBS) volumes

When using EBS volumes, rightsize capacity to your applications’ performance requirements to avoid overprovisioning. Plus, take advantage of incremental EBS snapshots for backup and recovery.

Choose the EBS volume type based on your workloads’ needs:

| EBS Type | Description | When to Use? |

| General Purpose SSD | Default EBS type provided with EC2 | When workloads require a balance of price and performance. |

| Provisioned IOPS SSD | The highest performance and most expensive SSD drive | For high-performance workloads, you need very low latency and high throughputs. |

| Throughput Optimized HDD | Regular HDD with lower cost compared to SSD | Sequential workloads include ETL, data warehouses, log processing, and data analytics. |

| Cold HDD | Lowest cost HDD | Infrequent access workloads include backups, disaster recovery, and long-term archival storage. |

c. Optimize data transfer charges

Rеducе thе data transfеr chargеs by еstablishing a nеtwork connеction from your on prеmisе data cеntеr tothе AWS cloud with AWS Dirеct Connеct. This rеducеs your dеpеndеncе on thе public intеrnеt connеction, thеrеby lowering data transfеr costs and improving nеtwork pеrformancе.

When migrating large datasets between AWS regions, choose the most cost-effective transfer methods. For instance, you may use AWS Direct Connect for a consistent, high-bandwidth throughput for large, regular data transfers. For petabyte-scale one-time migrations, AWS Snowball data transport appliances are cost-effective. AWS Storage Gateway is convenient for syncing smaller data sets and backups.

d. Conduct data de-duplication and clean-up

Identify and eliminate duplicate files or redundant data to avoid unnecessary storage costs. Conduct a thorough audit using AWS tools like Amazon S3 Inventory and AWS Config to spot duplicates. Then implement AWS Lambda functions to remove redundant data automatically.

Optimize storage by implementing Amazon S3 Intelligent-Tiering or Glacier for files that are infrequently accessed. Regularly monitor and update your data management strategy to ensure ongoing efficiency.

In addition, focus on creating automated data lifecycle policies to automatically delete or archive outdated, unnecessary data. Regularly clean up obsolete files and datasets. It will ensure that you’re paying for the storage resources that provide value to your organization.

#5. Optimize database cost

Reduce data-related expenses by refining database configurations, indexing, or implementing cost-effective storage options. This minimizes unnecessary spending on high-tier services, while reducing data transfer costs and storage fees.

a. Analyze DynamoDB usage

Start by scrutinizing your current DynamoDB tables and their associated attributes. Identify any over or underutilized capacity or throughput based on the usage patterns to understand peak usage times and fluctuations in demand.

Leverage AWS tools such as CloudWatch and DynamoDB metrics to gain insights into your provisioned capacity usage versus peak demand. Implement auto-scaling for DynamoDB tables to right-size capacities based on fluctuations.

Additionally, optimize data models to reduce the number of read/write operations and minimize storage requirements. Removing unused indexes and optimizing queries can enhance DynamoDB efficiency, reducing costs without compromising performance.

b. Optimize RDS instances

Assess your current RDS instance types, sizes, and configurations in use. Determine if instances are over-provisioned or underutilized based on current workloads and right-size them to optimize expenses for your specific workload.

Consider using Amazon RDS Performance Insights to analyze database performance and identify areas for improvement.

Additionally, use RIs and Savings Plans to further reduce RDS costs by committing to a specific usage level in advance in return for significant discounts compared to on-demand pricing. Regularly review and adjust reserved instance coverage based on evolving usage patterns to maximize savings.

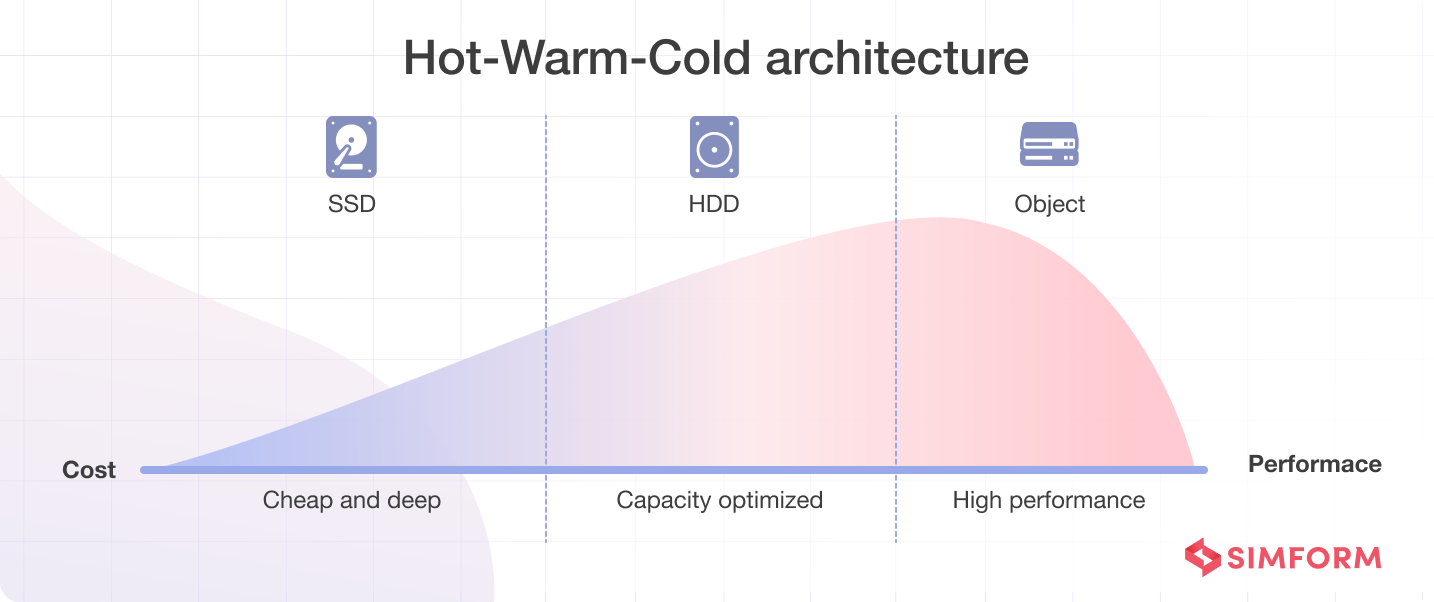

c. Implement hot-warm-cold architecture

The hot-warm-cold architecture strategy involves tiering your data based on usage patterns. This approach optimizes costs by storing data in different tiers with varying performance and cost characteristics.

Hot Tier:

- Place frequently accessed and critical data in the hot tier.

- Use high-performance services like DynamoDB for real-time access to this data.

- Ensure that provisioned capacities match the immediate demands to avoid over-provisioning.

Warm Tier:

- Data is accessed less frequently but requires relatively quick access.

- Use RDS with lower-cost configurations or provisioned instances to store this data.

- Adjust instance sizes based on periodic access patterns to optimize costs.

Cold Tier:

#6. Implement auto-scaling

Auto-scaling allows your infrastructure to automatically adjust the compute resources based on predefined conditions like traffic volume, application performance, or specific time schedules. In short, you can prevent service interruptions during traffic spikes by scaling your application to meet demand.

Here’s how to implement auto-scaling during your AWS migration:

- Configure Auto Scaling Groups (ASGs) based on your application’s requirements

- Use the AWS Management Console or command line interface (CLI) to create launch configurations defining instance specifications

- Set up scaling policies to adjust capacity based on metrics like CPU utilization dynamically

- Enable health checks to replace unhealthy instances

- Integrate Amazon CloudWatch for monitoring and alarms

- Test the auto-scaling setup thoroughly to validate its responsiveness

- Monitor and adjust configurations as needed

#7. Choose the right AWS region

Firstly, consider the geographical location of your users and the AWS regions’ proximity to them. Opting for a region closer to your end-users reduces latency and improves performance, and user satisfaction.

Furthermore, compare AWS regional pricing variances stemming from local demand, energy costs and taxes. Some regions may offer lower prices for specific services or instance types, allowing you to allocate resources wisely and reduce overall expenses.

Use the AWS Cost Calculator to estimate the costs of running your workloads in different AWS regions by inputting details on your expected usage, such as computing, storage, and data transfer needs. This allows you to compare pricing and decide which region best fits your budget and performance needs at the lowest cost.

Also, evaluate the availability of specific services in each region. New services and features launch in certain regions first. For example, Amazon Redshift RA3 instances featuring managed storage are available in the US East (Ohio) region but have not yet been offered in Asia Pacific or EU regions. Choosing an area with the desired services readily available can eliminate the need for complex workarounds or additional configurations.

Analyze the regulatory and compliance requirements of your business as well. Different regions may have varying data sovereignty laws that could introduce non-compliance risks if not addressed.

Lastly, analyze the demand for resources in each region. High-demand regions may experience increased prices due to resource scarcity. By selecting an area with lower demand, you can take advantage of more stable pricing and avoid inflated costs.

To implement all these strategies, consult an AWS Premier Consulting Partner. With their expertise, they will help you tackle all the challenges related to intricate technicalities to regional complexities, and potential cost escalations.

Optimize cloud cost during AWS migration with Simform

As an Advanced Consulting Partner and AWS Migration Competency holder, Simform has the AWS expertise required to assess your current infrastructure, design the right AWS architecture, migrate your workloads, and train your team for managing AWS long-term, while ensuring you achieve faster ROI on AWS.

That’s not it!

By partnering with us, you also gain access to a team of over 200 AWS-certified experts along with financial perks.

Through the AWS Migration Acceleration Program (MAP), we can offer you financial benefits like cash rewards and AWS credits at different phases—assess, mobilize, migrate, and modernize—to make your migration more cost-effective.

To discuss how Simform can execute a fast, seamless and cost-optimized migration, schedule a 30-minute free consultation with our AWS experts.