How do babies learn to walk? Through baby steps. That’s Microproductivity! Or breaking down a task into smaller manageable chunks. The Microservice approach follows the same core concept.

Theoretically, microservice seems the right choice for most organizations. It allows organizations to break down apps into a suite of services.

The monolith==bad thinking is simplistic, advanced by someone who doesn’t understand the pattern. OTOH, the notion that most monolith implementations in the wild are bad is just accurate observation. Many implementations of most architectures are bad, even microservices 🙂.

— Allen Holub (@allenholub) January 23, 2020

However, everything boils down to the implementation of microservices. If you get it right, the results are excellent. On the other hand, if implementation goes wrong, microservices will only be a failed experiment.

So, how to get your microservices implementation right?

A surefire way is to learn from peers! First adopters and market leaders are already leveraging microservices for their development needs. Here we have cherry-picked the top microservices examples to take inspiration from –

Top microservice examples and lessons learned

#1. Lyft introduced localization of development & automation for improved iteration speeds

Lyft moved to microservices with Python and Go in 2018, by decomposing its PHP monolith. The migration from a monolith to microservices allowed the company to deploy hundreds of services each day through separation of concerns.

However, the problem began when the services scaled to more than 1000 engineers and hundreds of services. Lyft’s productivity took a hit, and it needed a solution that could help achieve,

- Efficient localized development

- Isolated testing capabilities

- Testing automation

So, the Lyft engineering team decided to look at critical touchpoints in the development process instead of relying on the environments. They identified three workflows that needed investments and maintenance for improvements.

The first critical workflow was the dev loop.

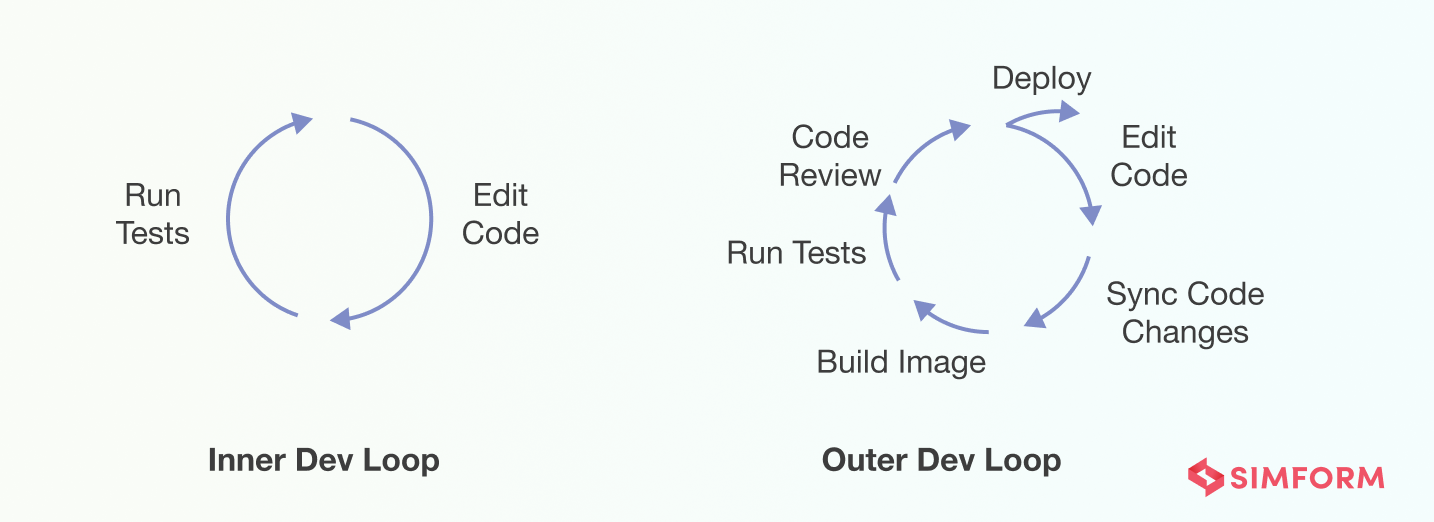

There were two types of dev loops,

Inner dev loop, a quick iteration cycle for making code changes and testing. A developer will use the same iterative cycle several times before finding a release-ready build.

The outer dev loop involves developers syncing code changes with a remote repository and running tests on the CI to review the code before deployment.

Ideally, an outer dev loop takes more time than an inner dev loop due to the address of code review comments. So, for efficient iterative development, Lyft focussed on improving the inner dev loop through execution on an isolated environment located on the developer’s laptop.

The team used an in-house proxy app to enable users to compose a request through Typecast code editor and send it to the local service. Lastly, Lyft automated end-to-end testing for quicker shipment of code changes.

Lessons from Lyft’s microservice implementation

- Enable development localization and an efficient developer’s loop to improve iteration time.

- Enable testing automation to improve delivery time for code changes.

#2. Twitter leveraged a decoupled architecture for quicker API releases

Twitter ran its public APIs on the monorail (a monolithic ruby-on-rails application), which became one of the largest codebases in the world. As a result, it was challenging to update Twitter teams, so the company migrated to 14 microservices running on Macaw (An internal Java Virtual Machine (JVM)-based framework ).

Though migration to microservices helped the teams improve deployment times, it also created a disjointed and scattered public API for Twitter.

Due to a decoupled architecture, the services were created individually, with teams working on separate projects with little coordination. This practice led to fragmentation and slower productivity for the development team.

Twitter needed a solution that could help them iterate quickly and cohesively. Therefore, in 2020, the company decided to release a new public API, Subsequently, a new architecture was created to use GraphQL-based internal APIs and scale them to large end-points.

Twitter also allocated dedicated infrastructure for core services and endpoint business logic. So, when a user requests data from core services, it renders UI, while for Twitter API, the data query will have a JSON response. What makes the entire architecture an efficient solution for Twitter is pluggable platform components like resource fields and selections.

Resource fields are atomic data such as tweets or users.

Selections are ways to find an aggregate resource field, like finding an owner of the tweet through a user ID.

Developers at Twitter can use such pluggable components, and the platform helps with the HTTP needs of the APIs. As a result, developers at Twitter can quickly release new APIs without creating new HTTP services.

Lessons from Twitter’s microservice implementation

- Manage microservice fragmentation through internal APIs scaled to large end-points of the system.

- Leverage the independent microservice approach by using dedicated resources making the entire architecture efficient.

- Optimize API release by using pluggable components.

How is microservice architecture making a difference in various business sectors?

#3. Capital One migrated to AWS and enabled containerization of services

Capital One is a leading financial services provider in the US that offers intelligent and seamless user experiences. However, despite being the cloud-first banking service, Capital One needed a reliable cloud-native architecture for quicker app releases and integrated different services that include,

- Cloud-based call center

- Data analytics

- Machine learning algorithms

For cloud migration, Capital One chose AWS services. It not only migrated the infrastructure but integrated several AWS services like,

- AWS Connect for cloud-based call center

- Amazon S3 to handle intensive workload needs for Machine Learning integrations

- Amazon ECS to manage docker containers without hassle

Capital one reduced the time needed to build new application infrastructure by 99% with the migration to AWS services. Similarly, with the help of containerization of microservices, Capital One solved its decoupling needs.

However, it was a complex route. Initially, they used open-source tools like Consul, Nginx, and Registrar for dynamic service discovery and context-based routing of services. Unfortunately, it added complexity instead of simplifying deployments.

Eventually, they used Docker and Amazon ECS to containerize the microservices. Docker helped them with application automation which simplified the containerization of microservices. At the same time, ECS provided a platform to manage all the containers.

Thanks to this new arrangement, Capital One teams delivered applications within 30 minutes with ECS and Application Load Balancers.

Lessons learned from Capital One’s microservice implementation

- Employ microservice containerization to improve time-to-market, flexibility, and portability.

- Utilize Docker to further manage containers and automate deployments

- Leverage Amazon ECS as a platform to manage, scale, and schedule container deployments.

#4. Uber’s DOMA architecture helped improve productivity.

One of the early adopters of microservices, Uber, wanted to decouple its architecture to support the scaling of services. The company scaled to 2200 critical microservices with decoupled architecture, improving the system’s flexibility. However, the decoupled architecture had its tradeoffs.

When Uber’s team grew to 1000s of engineers, finding the sources of errors became difficult. Engineers had to skim through 50 services and 12 engineering teams to find the root cause for a single problem leading to slower productivity. Even a simple feature required engineers to work across multiple teams and services.

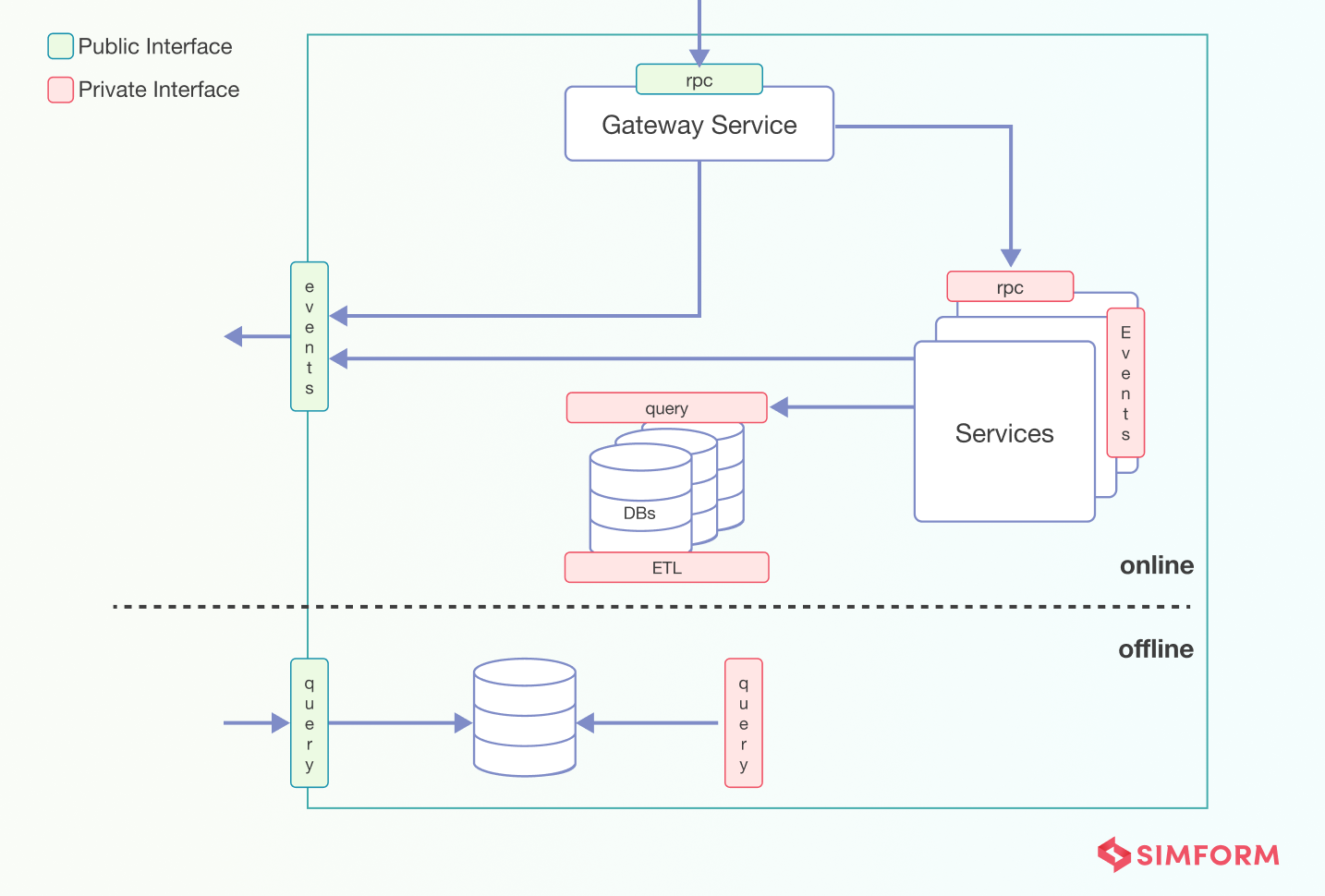

Therefore, Uber used Domain-Oriented Microservice Architecture(DOMA) to build a structured set of flexible and reusable layered components. The architecture had five different components,

- Domain is a collection of microservices with shared business logic. For example, Uber has a maps domain, fare service domain, and matching platform domain, each with several microservices, but the logical part remains the same for the entire domain.

- Layer design is dependency management at scale. It allows Uber to decide what service can call what service.

- Gateways act as a single point of entry into domains. Their performance depends on the API design and provides excellent upstream services benefits. It internalizes the domain-specific details like ETL pipelines, data tables, services, etc. Other domains are exposed only to APIs, messaging events, and queries.

- Extensions are a mechanism that extends the functionality of an underlying service without making any changes to its implementation.

By implementing the DOMA architecture, Uber reduced the feature onboarding time by 25-30% and classified 2200 microservices into 70 domains.

Lessons learned from Uber’s microservice implementation

- DOMA architecture can help reduce the feature onboarding time with dedicated microservices based on the feature domain.

- Integrate a shared business logic by defining a gateway for each domain.

#5. A two-layer API structure that helped Etsy improve rendering time

Etsy’s teams were struggling to reduce the time it takes for the User’s device screen to update. From boosting the platform’s extensibility for mobile app features to boosting the processing time, the company needed a solution to provide a seamless user experience.

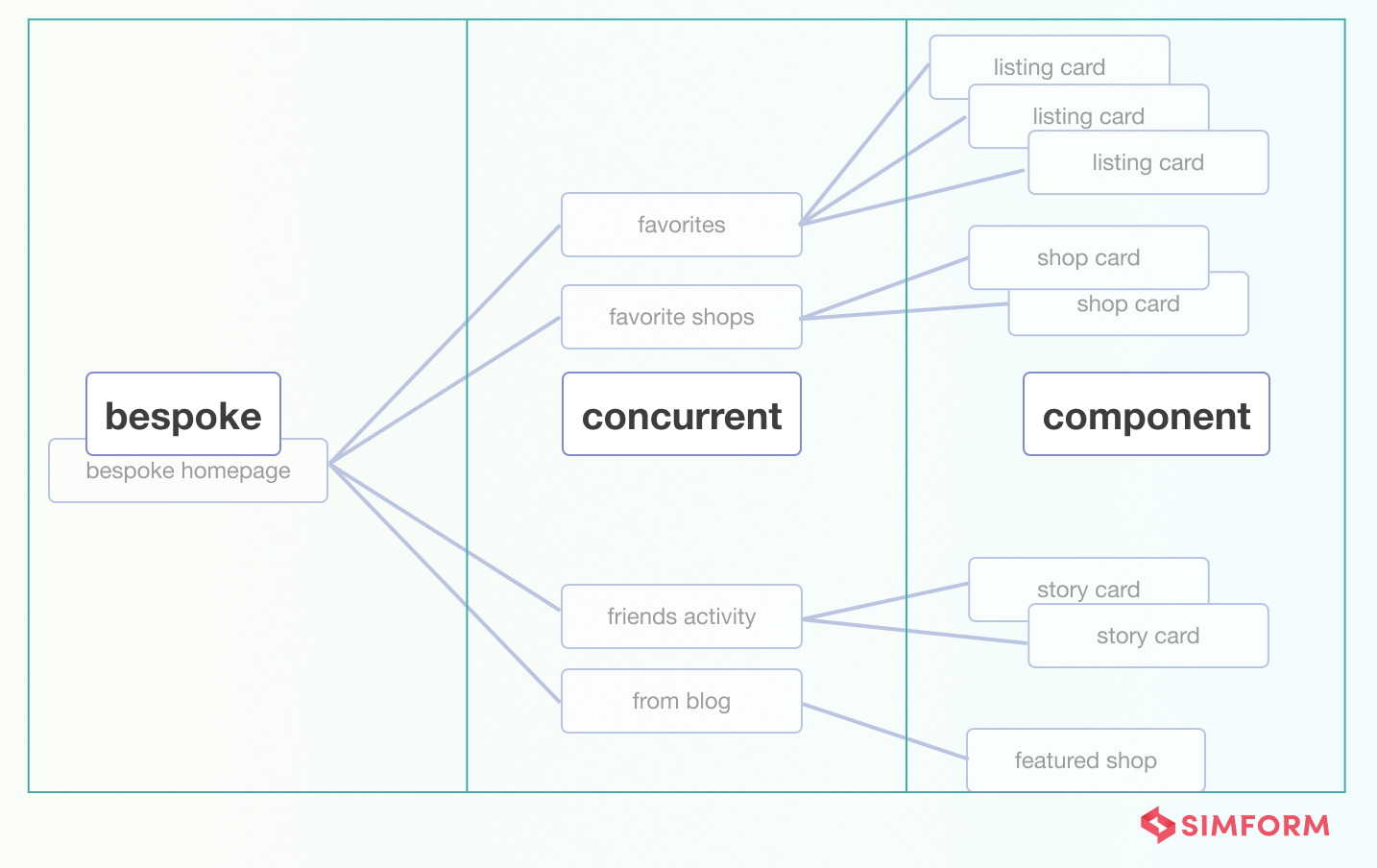

It implemented a two-layer API with meta-endpoints for better concurrency and processing time.

The upper API layer included the server-side composition of view-specific sources, which enabled the creation of multi-level tree architecture. The concurrent meta-endpoints help compose websites and mobile apps in a custom view.

These meta-endpoints call the atomic component endpoints. Database communication is only facilitated through non-meta endpoints at the lowest levels. However, this architecture was not enough, and the concurrency problem for Etsy remained unresolved.

So, they used the CURL requests in parallel for HTTPS calls with a custom Etsy lib curl patch to build a hierarchy of request calls across the network.

The multi-level tree architecture has a microservices approach as its base, where several endpoints are aggregated into decoupled meta-endpoints. Some meta-endpoints handle the server-side components, and non-meta endpoints interact with the database to fetch or store data.

This solution helped Etsy achieve 1000 ms time to glass. Simply put, Etsy’s website is rendered within 1 second and is visible within a second.

Lessons learned from Etsy’s microservice implementation

- API-first architecture improves processing time for user requests.

- Combination of microservices with decoupled meta-endpoints in the architecture to improve server-side performance.

#6. PayPal built open-source framework to accelerate microservices adoption

In 2007, Paypal’s teams were facing massive issues with monolithic applications. They were deploying it once every month.

Some of the issues they faced were,

Releases were only possible during off-peak hours

Debugging was difficult.

Applications needed to be all deployed at once

First, they started structuring the releases to optimize deployments and developed small apps that could be deployed faster. However, with the increase in applications, it became difficult to manage them even with smaller sizes.

Paypal accelerated its microservices adoption in 2009 by developing an open-source framework called “Kraken.” It was created based on the Express.Js framework that enabled the teams to split up the configurations and keep the code organized.

Kraken.Js helped PayPal develop microservices quickly, but they needed a robust solution on the dependency front. So, they introduced “Altus,” which provided tools to push deployment-ready applications without the hassle of dependency management.

Finally, Paypal created a common platform for all of its services through Paypal as a Service(PPaaS). It allowed them to use REST for all the communication between microservices, internally and externally.

Rather than using a different set of internal and external APIs, PPaaS enabled REST APIs for all the communications. With the PPaaS, PayPal published more than 700 APIs and 2500 microservices.

Lessons learned from Paypal’s microservice implementation

- Individual services and automation can help improve release time for services

- Building ingenious tools can accelerate microservice implementations that can split configurations and execute code organizations.

#7. Goldman Sachs chose containerization for deployment automation and higher availability

While speed was the critical objective for Goldman Sachs, another essential aspect was monitoring containers and data exchanged between different services. Also, with the software-centric business operations, Goldman Sachs required higher availability and performance for its systems.

Goldman Sachs leveraged containers as a lightweight alternative to virtual machines and enabled deployment automation. Containers are highly available and horizontally scalable microservices that have an environment with server agnostic characteristics.

With containers, Goldman Sachs could rapidly make new software iterations and reduce the provisioning time from hours to seconds. It also helped them optimize infrastructure utilization, automate business continuity, improve DevOps efficiency, and manage infrastructure updates.

While containers were an excellent solution for higher performance, quicker releases, and higher availability, they needed a reliable tool for monitoring microservices. Therefore, they used a telemetry-type tool that helped monitor network connections across clouds, regions, data centers, and entities.

It also enabled Goldman Sachs to monitor and identify which containers interact with each other the most. This data helped them isolate applications and observe network connections. They were also able to identify any anomaly in the network or a rogue connection, troubleshoot them, and maintain availability.

Lessons learned from Goldman Sachs’s microservice implementation

- Containerization of microservices for deployment automation and reduced downtime is a good practice.

- You can build a custom telemetry-like tool to monitor communications between containers for higher security.

How Microservices are Transforming Industries: Use Cases from Capital One, Facebook, Uber, and more

#8. Reddit applied deduplication approach to solve its caching problems

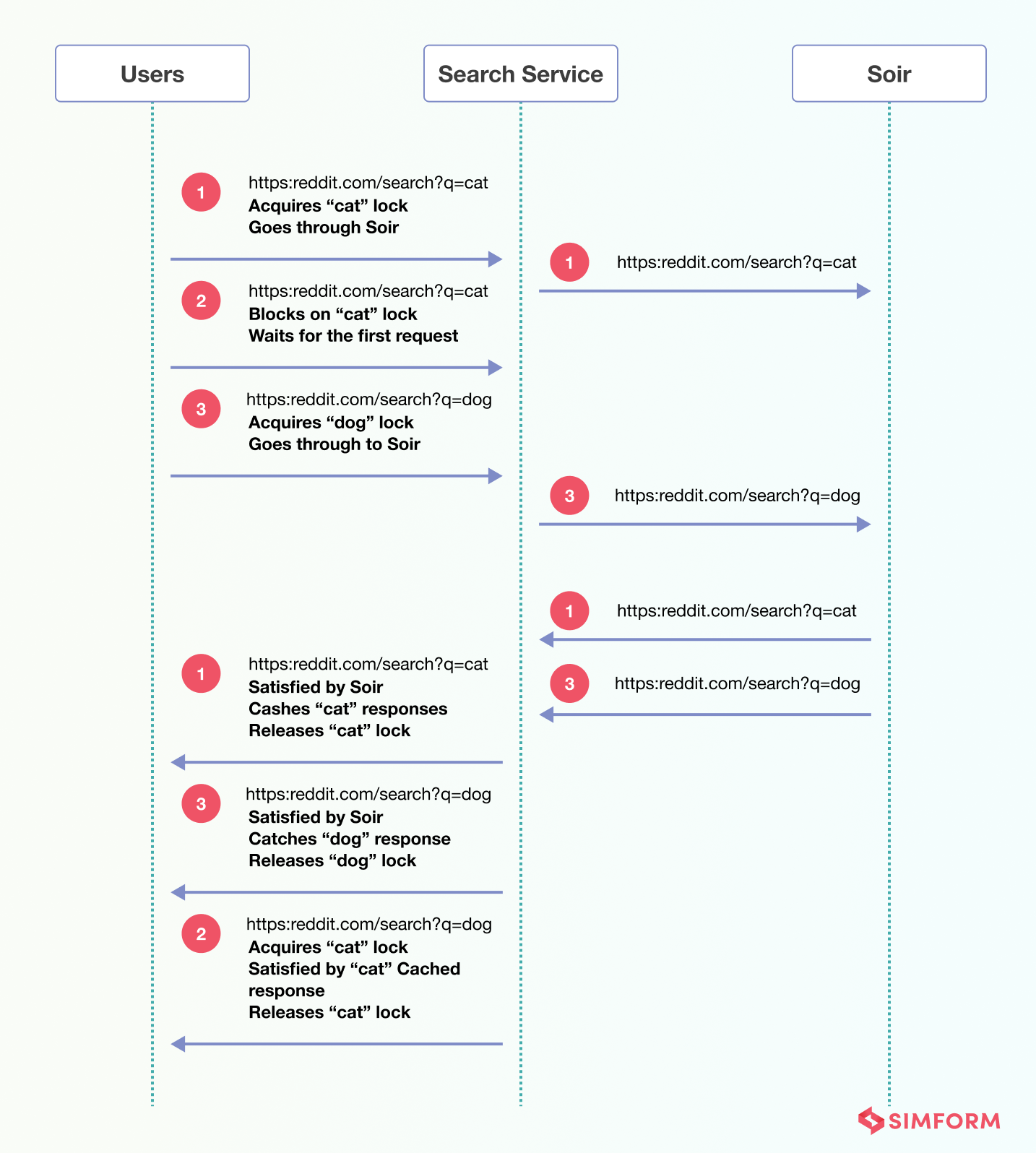

Reddit has a response cache at the response gateway level with a TTL(Time-to-live). Imagine Reddit is down longer than the pre-defined TTL (Time-to-live), and flushed the response cache. When the site recovers from this failure, it gets overwhelmed with several duplicate requests as there is no response cache due to flushing.

These requests hit the underlying databases, microservices, and search engines simultaneously, creating a three-stooges problem. As a result, the underlying architecture gets flooded with several requests, otherwise served through cache during normal operations.

The Reddit team used a solution to deduplicate requests and cache responses at the microservices level. First, they used the deduplication process, which means reordering the requests to be executed one at a time. It is also known as the collapsing or coalescing of requests.

This approach was aimed at reducing the concurrent request execution, otherwise overwhelming the underlying architecture. However, though Reddit reduced concurrent requests execution, they still need a web stack to handle concurrency. Here, Reddit used Python 3, Baseplate, and gevent -a Python library.

The first step towards deduplication is creating a unique identity for each request which Reddit achieved through hashing.

Further, Reddit built a decorator which ensures that no two requests are executed concurrently. Finally, it used a caching decorator that uses the request hash as a cache key and returns the response if it hits. Conversely, the cached response is stored for subsequent requests if the hash value is missed .

Lessons learned from Reddit’s microservice implementation

- Deduplication of requests and caching of reponse at microservice level can reduce load on the underlying architecture.

- Reduce concurrency of request processing locally by creating a unique identity of each user request through hashing.

#9. Lego went serverless with a set-pieces approach to microservices

Everyone loves Lego, and just like their block-based toys, their backend needed a solution with two primary advantages,

- Value acceleration- Lego was looking to accelerate their iterative development and scaling of business value delivery.

- Technology acceleration- They are looking for a resilient and fault-tolerant architecture to power their website.

Apart from this, Lego also wanted to have technical agility, which meant the architecture should provide higher extensibility, flexibility, and possibility of upgrade.

Lego decided to go serverless for higher value and technology acceleration. However, the adoption of serverless for Lego was not a piece of cake as they needed to make sure it infuses technical agility, engineering clarity, and business visibility.

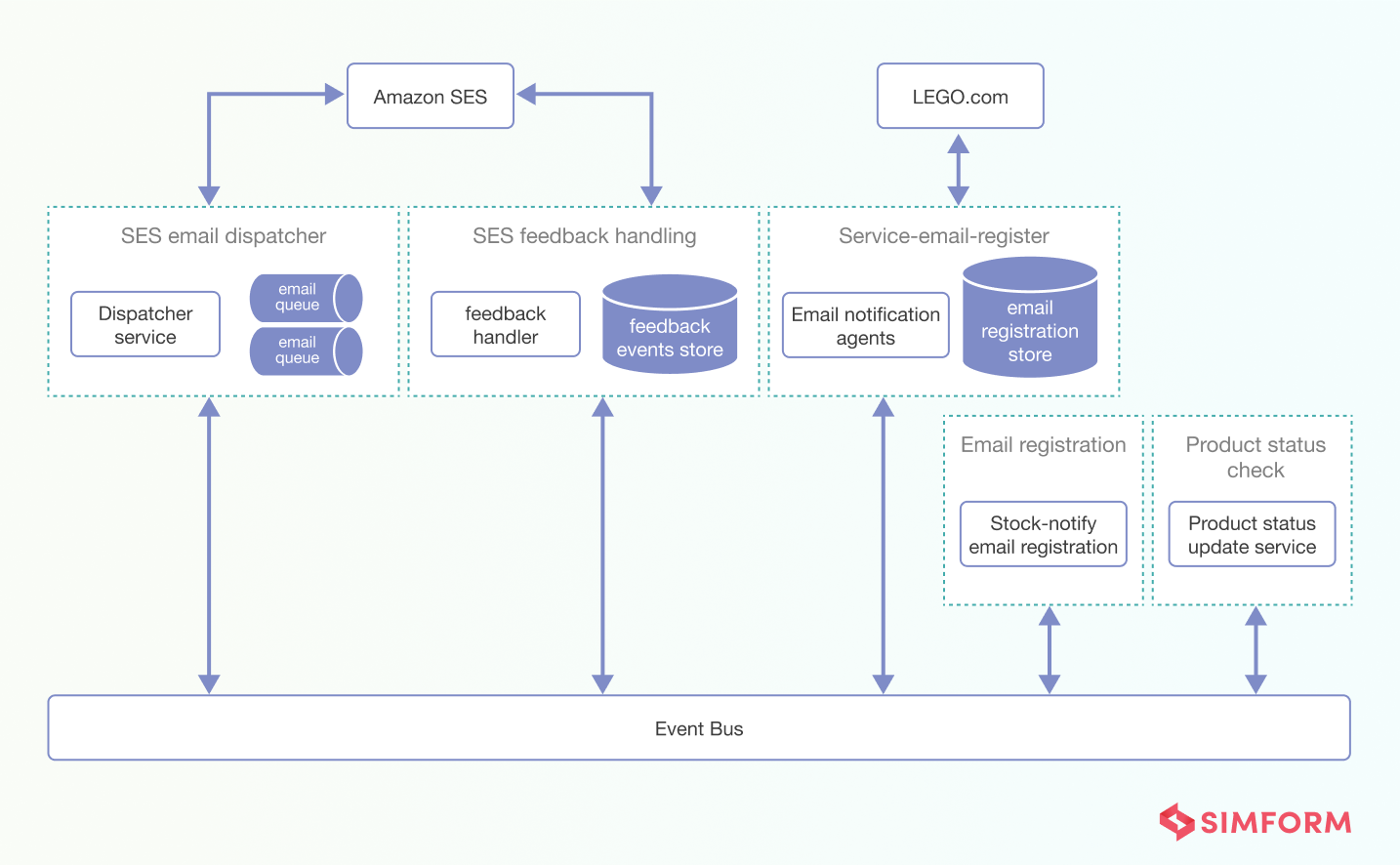

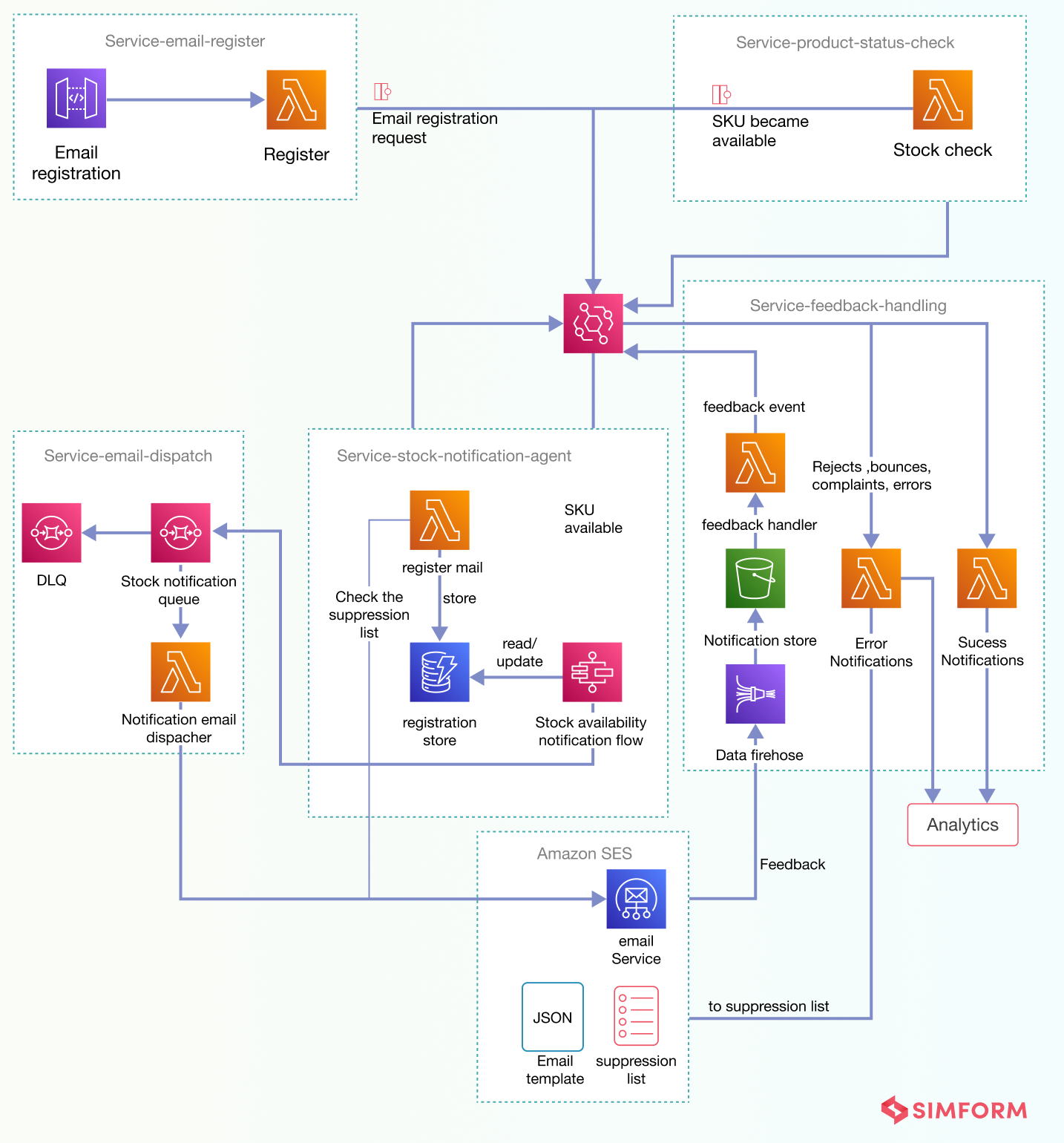

So, they used an approach known as “Solution Design,” which helps with the translation of products into architectural visualization of granular microservices. They designed a serverless event-driven application that uses Amazon EventBridge as an event bus with this approach.

With an event-driven architecture, applications are triggered by events managed through an event bus. Events are evaluated by the event bus according to the predefined rules, and if it matches the criteria, the trigger is executed.

Event bus allows Lego to handle each type of event in the environment required for downstream analytical service.

Lessons learned from Lego’s microservice implementation

- Adopt serverless with the Lego’s ‘set-pieces’ approach to build an agile system.

- Use the solutions design approach for granular microservice visualizations for improved implementation.

#10. Gilt mitigated overloaded database and long integration cycles with Java Virtual Machine (JVM)

Gilt is one of the major eCommerce platforms that follow the flash sale, business model. Its initial web app was created with Ruby on Rails, Postgres, and a load balancer.

In 2009, Gilt realized that it needed a solution to solve several problems with its architecture, which could not cope with massive traffic. During this time, Gilt faced dealing with 1000s of Ruby processes, an overloaded Postgres database, 1000 models/controllers, and a long integration cycle.

Coping with the peak traffic daily, development monoliths, and deployment delays for Gilt were difficult. As a result, the company chose to move towards microservices based on JVM(Java Virtual Machine).

The reason behind adopting JVM was the compatibility and acquaintance of in-house developers with the Java language.

Gilt used microservices along with Postgres and Voldemort within the JVM environment. It helped the company improve the stability and concurrency of the system, but development issues were still prevalent.

So, Gilt teams decided to double down on the microservices adoption, taking the ten services to 400 for their web apps. It also solved 90% of its scaling problem during the flash sale with JVM-based microservices.

Lessons learned from Gilt’s microservice implementation

- Choose an environment which is familiar for the in-house teams to deploy microservices.

- Use microservice deployments with object-relational database system like Postgres to solve 90% of the scaling problems.

#11. Nike’s configurational and code management issues

Nike had several problems with its architecture where they had to manage 4,00,000 lines of code and 1.5 million lines of test code. In addition, the development cycle had a delay of 5-10 days and database configuration drift.

The company was also facing the issues of snowflake servers where manual configurations were needed that took more time and effort. Further minor changes in the architecture or database were causing a high impact on the operations.

Nike first switched to the phoenix server pattern and microservice architecture to reduce the development time. In addition, Nike used immutable deployment units with the phoenix server pattern to reduce configuration drift with the phoenix pattern. It allows Nike teams to create a new server from the common image rather than modifying the original server.

Furthermore, Nike chose Cassandra to leverage their database’s share-nothing design and data clustering.

Shared Nothing Architecture (SNA) helps with distributed systems where microservices have no dependencies, and each service is self-sufficient to operate even if either of them fails.

This helped Nike create a fault-tolerant system where a single modification cannot affect the entire operation. Nike reduced the 4,00,000 code lines to 700-2000 lines within a project due to the deployment of immutable units.

The data clustering approach with SNA-based microservices helped Nike avoid a single point of failure and create a fault-tolerant system.

Lessons learned from Nike’s microservice implementation

- Leverage the share-nothing design and create a fault-tolerant system for your business.

- Build a distributed system with a data clustering approach and immutable units to reduce the codebase size.

#12. Groupon built a reactive microservices solution to cope with the peak traffic

The platform developed by Groupon for outreach was a monolithic application that used Ruby on Rails which was further overhauled and built on Java. This step presented a new set of challenges for Groupon, like slower updates, poor scalability, and error-prone systems.

Especially during the flash sales like Black Friday or Cyber Monday, such a platform could not cope with peak traffic.

Groupon teams decided to break their monoliths into Reactive microservices. It offers isolation and autonomy of services, which is impossible in a monolithic architecture.

In addition, reactive microservices have a single responsibility and can be upgraded more frequently without disturbing the system’s operations.

The best part of Reactive microservices is adding resources or removing instances as per scaling needs. Further, Groupon leveraged Akka and Play frameworks to achieve the following objectives,

- Handle millions of concurrent requests in a stateless manner.

- Use underlying microservice architecture with asynchronous application layer support for higher uptime and better scalability.

- Integration with other database technologies like NoSQL, messaging systems, and others.

- Reduced time to market with higher reliability.

Groupon was able to handle more than 600,000 requests per minute regularly. Mission-critical marketing campaigns can now be delivered within hours, even during the flash sale with 7-10X peak traffic.

Lessons learned from Groupon’s microservice implementation

- Use the single responsibility principle with reactive microservices for enhanced concurrency and scalability.

- Leverage the underlying microservice architecture with an asynchronous layer for higher app uptime.

Optimize & scale your microservices with Simform!

Every organization has a different set of engineering challenges. It’s not just about achieving higher availability or scaling resources as per peak traffic; your architecture should be agile and flexible to cope with the ever-changing market.

While these examples are a great inspiration, you need practical solutions to overcome your engineering challenges. Simform’s advanced engineering teams can help you,

- Break monolithic apps into microservices

- Enhanced load balancing and orchestration of services]

- Autonomous services which can be deployed independently

- Flexibility to upgrade without downtime

- Quicker iterations without dependency management

So, if you are looking to adopt a microservices architecture, get in touch with us for tailor-made solutions for your organization.