While on the journey to modernization, businesses can encounter various technical, cultural, and process-oriented challenges. Meanwhile, such projects tend to require significant resources and time investments – which is why many organizations often hesitate to get started.

However, outdated infrastructure and legacy applications are susceptible to various security, compliance, and privacy issues, making modernization a crucial step forward.

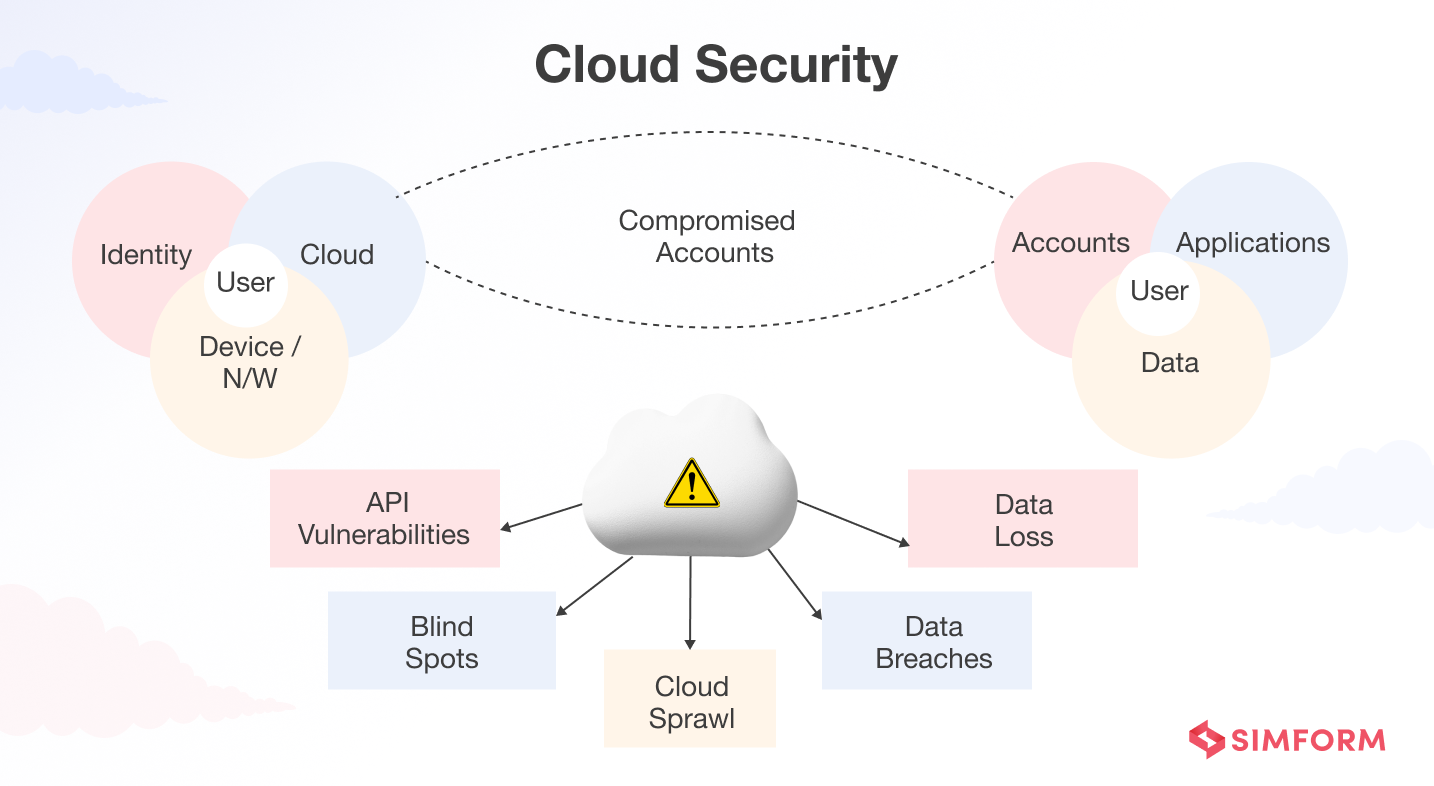

But again, the process of modernization itself may induce new security issues as different parameters and technologies, such as new frameworks, resources, cloud, microservices, containers, etc., have to be considered. For example, 60% of security professionals believe misconfiguration of the cloud platform/wrong setup to be the biggest security threat in public clouds.

This article discusses all these security constraints and the solutions so your business can become more vigilant and have a thoughtful and strategic approach toward modernization.

The cloud has become a non-negotiable part of application modernization, but

Cloud users’ struggle with managing security aspects is real

On-premise servers can be expensive as approximate upfront costs associated with launching 2-3 servers would be around $25,000. Then, as the business grows, these physical servers would limit its ability to upgrade or upscale.

Cloud migration provides an opportunity to upgrade outdated systems. However, the process requires careful planning as it is vulnerable to several attacks due to the complexity of cloud architecture and its distributed nature.

Read the Guide to Simplify Cloud Migration!

Public clouds have their own unique security threats that can make the entire environment vulnerable. Here, organizations may not have full control over the security measures in place or may lack the ability to audit the environment in order to detect and prevent malicious activity. The woes can be endless! And some of the common issues are –

- Moving some applications to the cloud and keeping other on-premise legacy platforms leads to inconsistent security policies and practices

- The failure to update the underlying architecture after moving the legacy applications to the cloud

- Aging hardware approaching its end of life becomes vulnerable to malfunctions. Also, such hardware may find it difficult to receive OS upgrades or patches for the latest exploits.

Let’s understand other important security concerns in detail.

API vulnerabilities

Being a key part of cloud architecture, APIs allow applications to communicate with each other. However, without the proper security measures in place, attackers may be able to access sensitive information or manipulate the data within an application.

How can we forget the massive LinkedIn breach of 2021, where hackers used LinkedIn’s official API and compiled a user database of 700 million LinkedIn accounts!?

So, how to secure API vulnerabilities?

- Ensure that all applications are correctly configured with encryption protocols, including authentication, authorization, and logging mechanisms.

- Implement a zero-trust strategy to remove any implicit trust for devices, services, users, or clients.

- Facilitate declarative authorization policy and authentication scopes as code by decoupling user identity from the API’s design through an abstraction method.

Blind spots

Blind spots are likely to be created when using a collection of open-source components or cloud services, as often, the updates received by those open-source projects are not propagated to your system. This can also lead to increased supply chain risk, such as the SolarWinds breach that affected thousands of organizations, including the U.S. government. Here, hackers installed the malicious code into a new batch of Orion software distributed by SolarWinds as a patch or an update.

The solution to eliminating blind spots is to create a comprehensive security strategy that includes –

- Creating and enforcing least privilege policies for user accounts

- Protecting sensitive data with encryption

- Monitoring suspicious activity on the cloud using network monitoring software such as Nagios XI, Datadog, LogicMonitor

- Using multi-factor authentication for access control

Protecting your company against blind spots is also essential to have your company in compliance with regulations like GDPR or HIPAA.

Cloud sprawl – Unchecked growth of cloud resources

You can never be done with cloud migration in a single go; no one can, in fact. After migrating applications to the cloud, you might want to consume new cloud services or add more resources. Once applications start running on the cloud, it’s common to use additional SaaS applications. A recent study found that 89% of businesses use SaaS applications. And 66% of them agree that security is the biggest challenge.

It’s easy to get caught in a cloud sprawl when a business lacks visibility or control of the spread of its cloud services, instances, or providers across the organization.

So, after identifying the cause of your organization’s cloud sprawl, you need to have a few things in check to minimize the security risks and unnecessary costs.

- Identify user controls, access, and migration policies specific to your business needs and use case.

- By partnering with a major cloud provider, you can access the automation tools that can help prevent cloud resources from proliferating across your organization.

What challenges can CTOs face while implementing cloud-based solutions?

Now, one of the biggest motivators behind cloud adoption is – data storage. Therefore, we’ll address security challenges related to data separately.

A world of data security risks and challenges

No industry, from the healthcare industry to the financial sector, from the gaming industry to the academic sector, can claim to have remained safe from data risks. Even the most advanced and secure enterprises like Facebook, LinkedIn, Alibaba, Accenture, and more have borne the brunt of cloud security breaches.

Data breaches

A data breach in the cloud can have far-reaching consequences resulting in extreme financial, client, and reputational damage. Also known as slow and low data theft, data leakage is a common hazard in the cloud. According to IBM Security, in 2022, the average cost of a data breach in the U.S. was $9.44M, which is the highest, with the global average total cost of a data breach standing at $4.35M.

General targets of data breaches are – personally identifiable information (PII), personal health information (PHI), intellectual property, and trade secrets. The main reasons behind data theft are –

- Weak or easily guessable passwords.

- Lack of proper encryption protocols to protect data in transit and at rest.

- Unsecured wireless networks, such as those without a password or WPA2 encryption enabled.

- Poorly configured access controls that allow users to access privileged or sensitive data outside their role or responsibility level.

Digital Pix & Composites left a huge trove of extremely sensitive student data on exposed servers

Digital Pix & Composites, a U.S.–based photography firm specializing in graduation, fraternity, and sorority composites, misconfigured an Azure Blob Storage container, leaving it publicly accessible. As a result, 469 text files containing personal details for 43,000 students from 222 U.S. universities (including full names, home addresses, and the institutions they attended) were exposed to anyone on the internet.

This incident highlights how an unsecured Azure Blob can leak massive volumes of sensitive PII when default “public” settings aren’t locked down. Continuous auditing of storage account permissions and strict “private by default” configurations are essential to prevent such data exposures.

Here’s how to avoid data breaches when migrating an application to the cloud –

- Start with a thorough discovery process to map out existing application resources, related data sources, and the overall system architecture. This will provide an in-depth understanding of potential risks associated with migrating the application to the cloud.

- Create multiple backups of the application and its data before transitioning them to the cloud. Additionally, secure these backups by encrypting them with a strong encryption method such as AES-256 standard encryption algorithm.

- Utilize cloud security best practices when designing your cloud migration plan. These include authentication and authorization measures for access control, using different isolation layers for segmenting sensitive data, and monitoring your systems for any suspicious behavior or activities.

- After migration, ensure regular scans/auditing of your cloud environment are conducted to detect any changes or vulnerabilities that could put your data at risk. To get alerted against any unauthorized activity or malicious threats against your system, utilize automated tools and services.

Data loss

Unlike data breach, data loss occurs when data is accidentally deleted or corrupted by human error or technical issues such as faulty hardware or software.

When transferring data between an on-premise database and the cloud, if not enough time or resources are allocated to complete the transfer, some data may be left behind or corrupted during transit. Additionally, if permissions are not properly configured for the cloud application, some data may not be accessible after migration.

Meanwhile, Software-as-a-Service (SaaS) applications are also a potential source of massive mission-critical data loss. A report by Oracle and KPMG revealed that 49% of SaaS users have previously lost data. These apps hold and constantly update large data sets. Chances are, new information may overwrite old information and cause data sets to be partially overwritten in the process. When overlooked, SaaS security can become one of the most overlooked threats in the enterprise.

So, how not to lose your critical data?

- Encrypt the data before transferring it to the cloud. It will prevent unauthorized access and keep it safe from attackers.

- Design a sound backup plan to protect against any data loss during the migration process. Backup plans should include regular backups of important files, redundant storage systems, and disaster recovery plans that allow for a quick recovery if something goes wrong during the migration process.

- Monitor and audit your applications using specialized software or services.

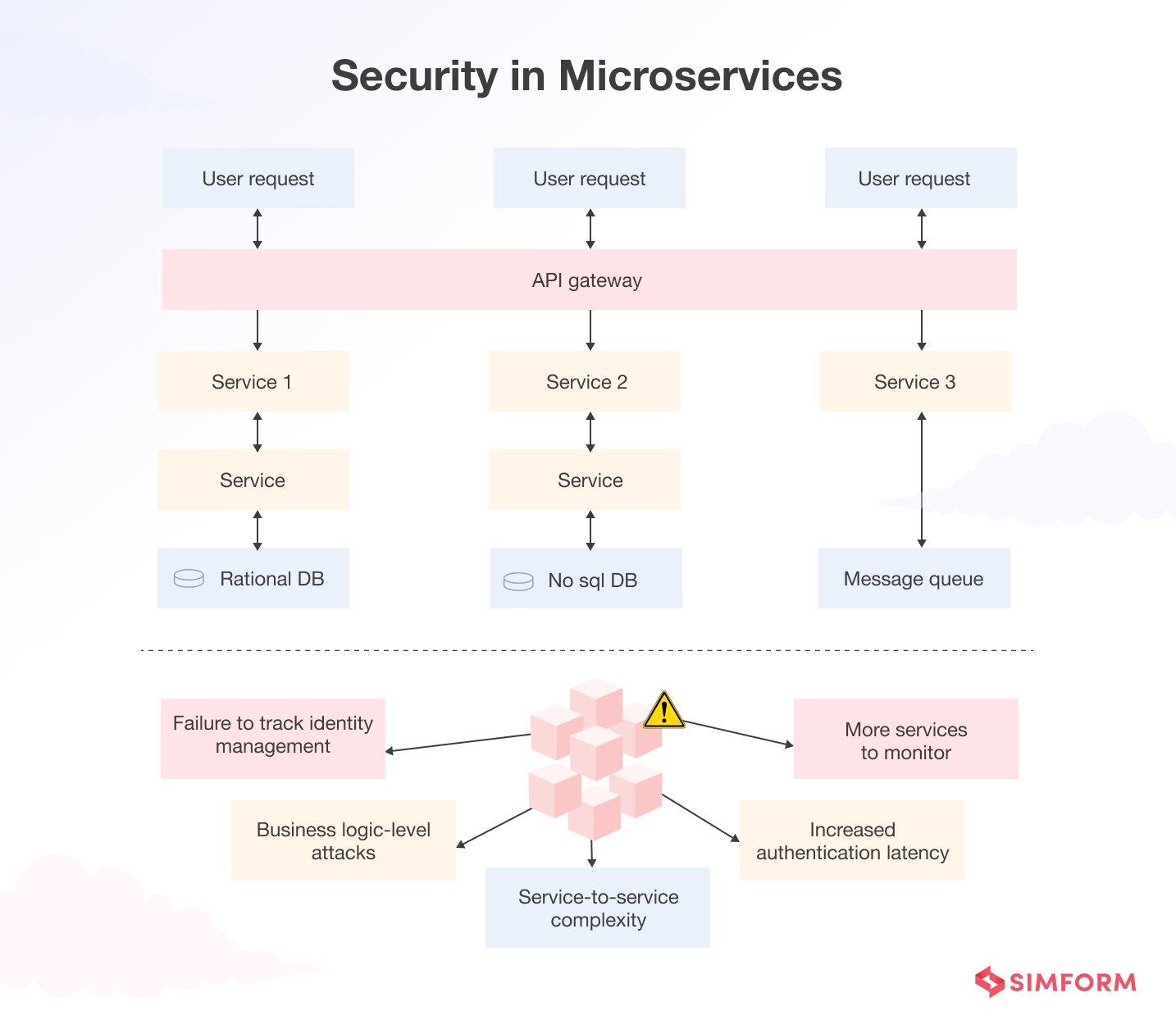

Monolith to Microservices is evolutionary yet equally demanding

Over time, your legacy application would be held back by slow deployments, scalability issues, poor performance, and an overall lack of agility. Of course, your modern-day businesses would need you to turn to the shining star of new-age IT infrastructure – microservices.

Read how adoption of microservices has transform the industries

But again, you’d be concerned as migrations to microservices don’t necessarily go as planned. Here, knowing the essential differences between the two architectures will help ensure new risks are not inadvertently introduced.

Failure to track identity management in microservices

Due to the distributed nature of microservices, it can be difficult to track who within the system has access to each specific component. As multiple services are interconnected, a lack of consistent identity management across these services can cause data and authorization leaks, presenting easy backdoor access to confidential data and system controls.

So, how to have strong and frictionless identity management?

- Use an API gateway(placed behind a firewall) to provide a single point of entry for traffic.

- Have a token-based approach to user authentication to determine which microservices the user can access and to what degree. Tokens such as JSON Web Token (JWT) store information regarding user sessions and provide particular details about the issuer, levels of access, and a refresh token.

- Implement streamlined authentication tools like Single Sign-On (SSO) to let the users log in just once to access any service they might need without remembering multiple passwords.

- Then add a layer of security with Adaptive Multi-Factor Authentication to evaluate the risk and context of the access request, including time, location, device, IP address, anomalies in customer behavior, and more.

- Alongside centralized access and control, have an administrative interface to manage applications, groups, users, devices, and APIs from one central location to know what’s happening in your environment.

Business logic-level attacks

Web application firewalls provide a first line of defense, tracking what is requested and verifying credentials. Monolithic applications add an extra layer of security by using a servlet that runs logical checks before passing requests to their respective components. This allows monolithic app components to operate with the confidence that requests have been validated at the API level by the servlet, allowing it to monitor incoming calls and reject any abnormal request attempts.

In contrast, microservices are designed for independent operation and lack knowledge about other microservices; this makes business logic-level security attacks more easily achievable.

To manage business logic security – The development process should have guidelines that include secure coding practices, data validation rules for each request, input validation and output encoding, and secure network access control lists.

Service-to-service complexity

Many developers mistakenly believe that communications between services behind a common firewall are secure, but due to the dynamic nature of microservices, messages can sometimes be passed over larger networks.

What can be done?

- To protect against data breaches, teams should encrypt data when it is in transit between services and when it is stored at rest.

- For this encryption to work, each service must be provisioned with its own private key and public certificate.

- There must also be a process to revoke certificates and generate new ones when services are decommissioned or compromised.

Increased authentication latency

In monolith applications, API security services only need to secure one link from client to server. When using microservices, organizations have to take into account additional authentication steps to secure their API. This can lead to increased latency and poorer performance unless they engineer the process efficiently.

Some organizations may opt to use a secure virtual private network, but this can increase app vulnerabilities should the network become compromised. To optimize API security, organizations should follow the practices discussed earlier.

More services to monitor

Traditional infrastructure-based monitoring was designed to provide endpoint and server security. However, microservices generate more traffic between services at a finer level of granularity, which can make it harder to automatically enable application observability through metrics, logs, and traces. This is difficult enough just for measuring operational health and even harder for tracking security posture.

Another challenge confronting security designers is ensuring consistency for data observability generated across microservices. At the least, security requires good logging and integration into incident response systems to alert security analysts to the possibility of subtle and slow-moving breaches. More sophisticated approaches can combine these richer data sets with machine learning to help detect and block attacks without burdening teams with false positives.

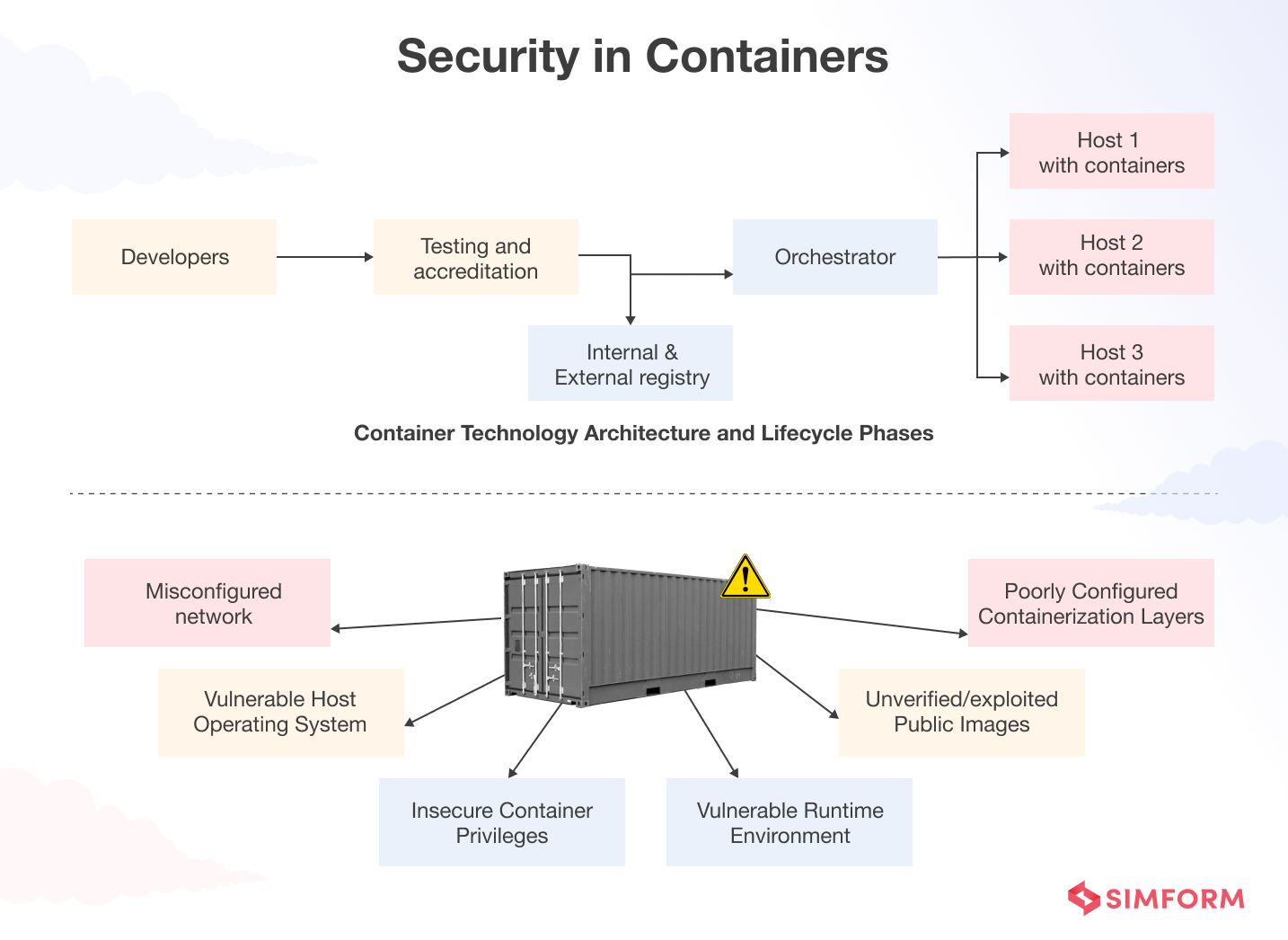

Container security in different ecosystems

The combination of microservices and containers is as good as that of peanut butter and jelly! However, like every new technology, container-first development can fall prey to security vulnerabilities across multiple layers of the software architecture, such as

- The container image

- The host operating system

- Container networking and storage repositories

- The interaction between the containers, and that of containers and host operating system

- The runtime environment, often in Kubernetes clusters

So, how can these layers be vulnerable to attacks?

Container Malware

Malicious software can target containerized applications in many ways, such as

- Attackers can tamper with container images by modifying the code, adding malicious payloads or backdoors, or replacing legitimate images with tempered ones. This attack can happen at any point in the container’s lifecycle, from the development phase to the runtime phase.

- By exploiting a vulnerability in the container runtime or the host system, an attacker may access the host system and potentially other containers running on the same host, leading to data exfiltration, privilege escalation, or the ability to launch further attacks.

- Misconfigured networks can allow attackers to connect to the container from external sources, bypassing network security controls.

To protect against container malware, it’s important to

- Isolate containers from each other and the host system to limit the damage caused by malware. Use network policies and firewalls to control traffic between containers and between the host system and the network.

- Implement runtime security controls like configurable security policies, real-time monitoring, and automated incident response to detect and contain malware before it spreads.

Insecure container privileges

When containers are allowed to run with more privileges than they strictly require,

- A malicious user can override established security policies and access confidential information such as passwords and system files.

- Configuration errors can arise when running with too many privileges, leaving the system vulnerable to attack.

Insecure privileges are often the result of erroneous configurations with the container orchestrator. For instance, when Kubernetes security contexts and network policies are not properly defined, the containers it orchestrates may have more privileges than they should.

Ideally, containers should run in the unprivileged mode so they can’t access any resources outside the containerized environment they directly control. Not only that, but unless they have a reason to communicate with each other, communications between the containers should also be restricted.

Insecure configuration of other components

Hackers also target poorly configured containerization layers alongside vulnerabilities in the operating system at large. Therefore, you should consider all other aspects of your environment to ensure the utmost security of containers. This includes –

- Updating and configuring the host OS securely

- Strengthening containerization layers and any orchestration software

Securing container images is a dedicated discipline

You can never be too vigilant when thousands of container images are available to choose from. Threat actors may even hide malware in legitimate-looking images stored on widely-used sites like Docker Hub. For example, in August 2021, five malicious container images were found that secretly mined cryptocurrency using 120,000 users’ systems.

Meanwhile, in 2022, 61% of all container images pulled came from public repositories, indicating an increased risk of exposure to malicious images.

Here are the tips for hardening your container image security –

- Have a curated internal registry for base container images and designate who can access public registries.

- Scan container images with static and dynamic malware scanning, as many attackers can obscure data at rest.

- Define policies before adding images to the internal registry. Always look at the publisher’s profile, read comments, and vet images to ensure you’re downloading from a trusted source and with the right name. Cybercriminals often name tempered images with malware after popular open-source software, hoping the trick would work.

- Keep integrity records for software supply chains to ensure that the container images being used are the ones vetted and approved earlier.

The good point is 74% of organizations scan container images in the build process, indicating that container security is shifting left. However, most images are still excessively permissive, with 58% of containers running as root. Therefore, while shifting left might help catch vulnerabilities earlier, we still need runtime scanning to identify configuration errors.

Common security issues with application modernization

Modernization is a multi-step process for every organization. In addition to technical challenges, organizations need to overcome a few cultural and process-oriented challenges that may indirectly affect the security of the entire transition.

Silos between developers and security teams

A lack of integration and communication between the two teams can lead to suboptimal solutions for modernization. Security measures performed too late in the development cycle can create more cybersecurity vulnerabilities.

It’d also be more difficult for development teams to stay up-to-date on the latest security trends, technologies, and compliance changes without access to resources from the security teams.

In addition to joint reviewing of requirements, security personnel must be involved throughout the modernization process. You can also utilize Unified Threat Management (UTM) solutions to enable continuous monitoring and communication between both sides of the organization’s IT operations.

Homegrown application development tools

There are some severe drawbacks to using homegrown tools that have the potential to become the Achilles heel of corporate IT security. These tools can create tech debt and have difficulty integrating with existing infrastructure or APIs in the stack.

When homegrown tools are not evaluated thoroughly and don’t provide the right monitoring components, they can create more problems than they solve. These tools require development teams to access different platforms and test solutions for individual use cases.

Now, as the access is configured and managed across various tools, security often gets shuffled to the latest stage in the software lifecycle, resulting in impaired software quality, delayed delivery, and increased compliance risks and security vulnerabilities.

Unique challenges to the new world of DevOps

Despite being a modern necessity, a DevOps environment is complex owing to the rich set of tools like build servers, code/image repositories, and container orchestrators. This huge complexity entails building security into each step of the modernization project.

Developers are often focused on velocity—not security. With the focus on faster code production, DevOps teams may adopt insecure practices beyond the horizon of security teams. Such practices can include:

- Reusing third-party code without enough scrutiny

- Adopting new tools without vetting them for potential risks

- Leaving embedded secrets and credentials in configuration files and applications

- Inadequately protecting DevOps tools and infrastructure

DevOps processes require using human and machine-privileged credentials that are highly susceptible to cyber attacks, making privileged access management one of the main security risks in DevOps environments.

Here’s what should be done to achieve DevOps security at scale

- Adopt a DevSecOps model to break down the traditional divide between security professionals and developers.

- Run penetration tests of your development environments to know the main security gap and automated security testing to detect data breaches, defects, and vulnerabilities introduced into development pipelines.

- Establish a set of clear policies and procedures for configuration management, code reviews, access control, vulnerability testing, and security tools.

Let security be a continuous process

Security is of the utmost importance to maintain the trust of your customers and protect your organization from cyber threats. Therefore, while modernizing applications, it is crucial to integrate security into the process from the start. However, application modernization can be complex, requiring the management of a wide range of technical and organizational issues.

An experienced tech partner understands the latest security best practices and can help navigate the complexities of the modernization process. Simform has unmatchable skills and experience with all the necessary technologies to help you implement measures to protect your applications and data.