Virtual machines (VMs) and containers are new-age IT efficiency superheroes. They enable organizations to get more out of infrastructure and facilitate process isolation. However, there is more than what meets the eye regarding VMs vs. Containers. This is why there is always a debate on whether containers are a suitable alternative to VMs or not.

Because both provide isolation of resources, organizations ponder over the thought “which one is the right option?” The reality is that both follow an inherently different structure. This is why knowing the key differentiators between containers and VMs is vital.

Let’s first start with an overview of both technologies.

VMs vs. containers: An overview

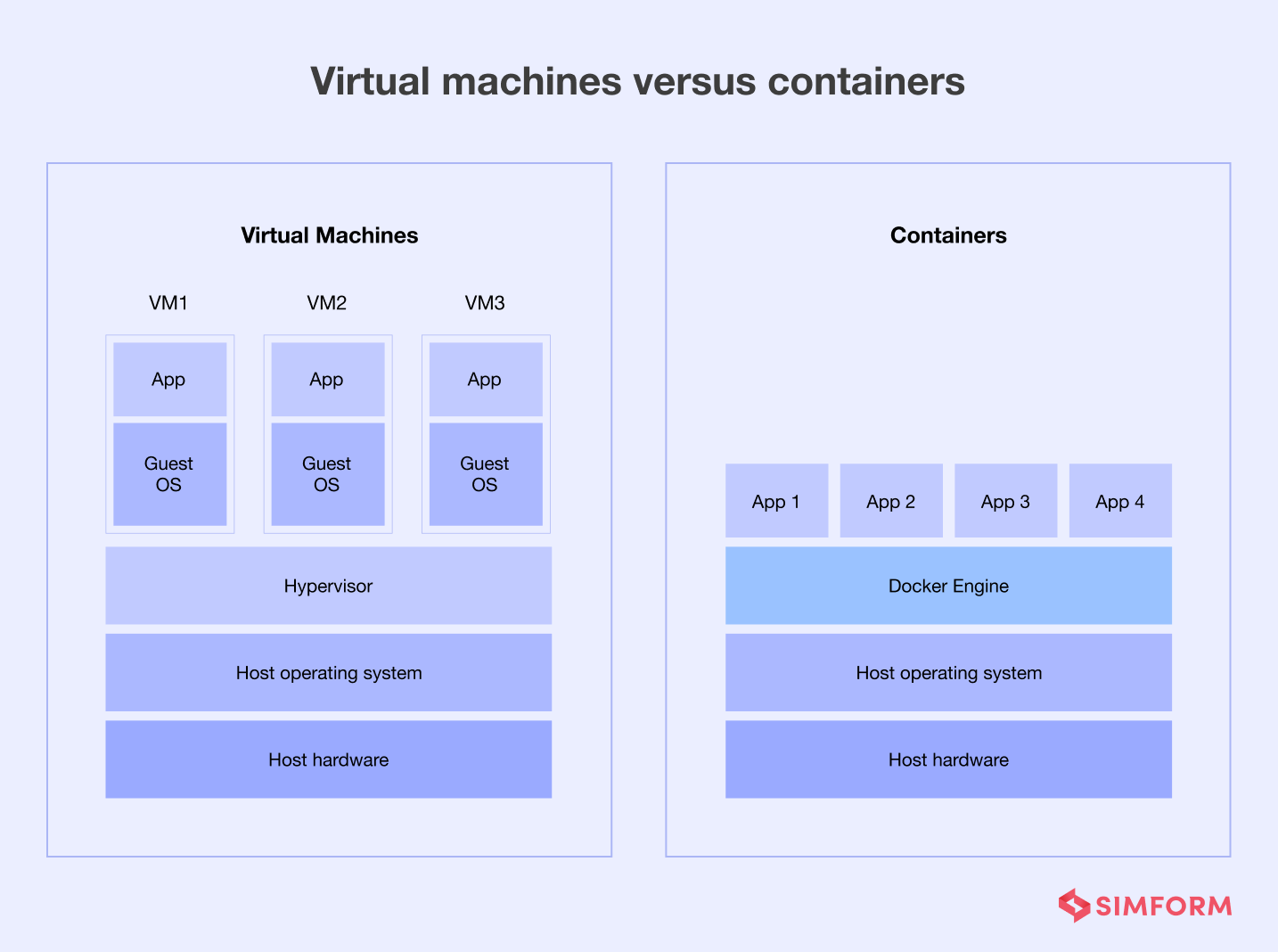

If virtualization is a lock, then containers and VMs are two different sets of keys that open the same lock. The difference lies in the level at which they achieve virtualizations. For example, VMs achieve virtualization at the hardware level with a hypervisor that virtualizes the processor, RAM, storage, network, etc.

Containers achieve OS-level virtualization. There is a kernel on top of the hardware, which helps communicate hardware with software. A host operating system hosts several containers through a container engine. When a user requests for a specific function or service, the container engine pulls an image stored in the repository by developers and executes it.

A container image has everything needed to execute user requests, including configuration settings, workloads, system utilities, and libraries. Another key difference between containers and VMs is the isolation of resources. A virtual machine is isolated from a server or a machine, and containers are isolated forms of processes.

Apart from these differences, there are several pros and cons of using either containers or VMs.

Pros of VMs

- Enables the creation of multiple OS environments on the same server and reduces the need for more physical hardware.

- VMs allow you to use the Instruction Set of Architecture (ISA), an abstract model that defines how software controls the CPU. It acts as an interface between hardware and software.

- The crashing of a single virtual machine does not affect the host machine making it efficient for businesses looking to improve availability.

- VMs allow you to use the guest OS to deploy test applications and those with security issues. This isolation of the OS environment allows higher security for the system and better monitoring of apps.

- Eliminates the OS compatibility issues by facilitating the hosting of multiple environments on the same server.

Cons of VMs

- The software part runs on top of the host OS through a hypervisor to access hardware data and memory, increasing latency.

- VMs need more resources, with each partition having an entire stack of OS environments.

- Finding the source of failure in a VM system is difficult as there are multiple local area networks.

- Routing rules and Virtual Local Area Networks (VLAN) for multiple OS make the entire system complex.

- Higher resource consumption, latency, and need for individual OS environments make it more expensive than containers.

Pros of containers

- Containers are lightweight, isolated processes with minimal libraries, configurations, and workloads needed for running them.

- Migrating your application across multiple environments is easy as everything an application needs to run is inside the container.

- Containers have a small memory footprint, less than 100 MB, compared to the gigabytes of storage that virtual machines need.

- They provide higher consistency across platforms due to shared kernel and operating systems.

- The software runs in a vacuum with containers, allowing it to remain unaffected by external changes.

- Lower memory footprint, need for fewer resources, and ability to operate consistently across platforms make it cost-effective.

Cons of containers

- Due to the Linux core, there can be compatibility issues with some operating systems.

- A compromised container can become a security threat to the entire system because of the shared OS and kernel.

- Without proper backup, the container data can be lost on termination, and data recovery is also tricky.

We have discussed an overview of VMs vs. containers, and now it’s time to discuss some key differences between them.

Minutes versus milliseconds

When it comes to success in business, the timing of a product or service launch is everything. It can help your business have a competitive advantage. So, if you compare VMs vs. containers for your business operations, time is an essential factor.

Virtual machines need a minute or two to boot up an entire copy of the operating system, customize and run as per the host environment. At the same time, the container takes milliseconds to run. They only need to run minimal configurations required for an app rather than the entire OS reducing the startup time.

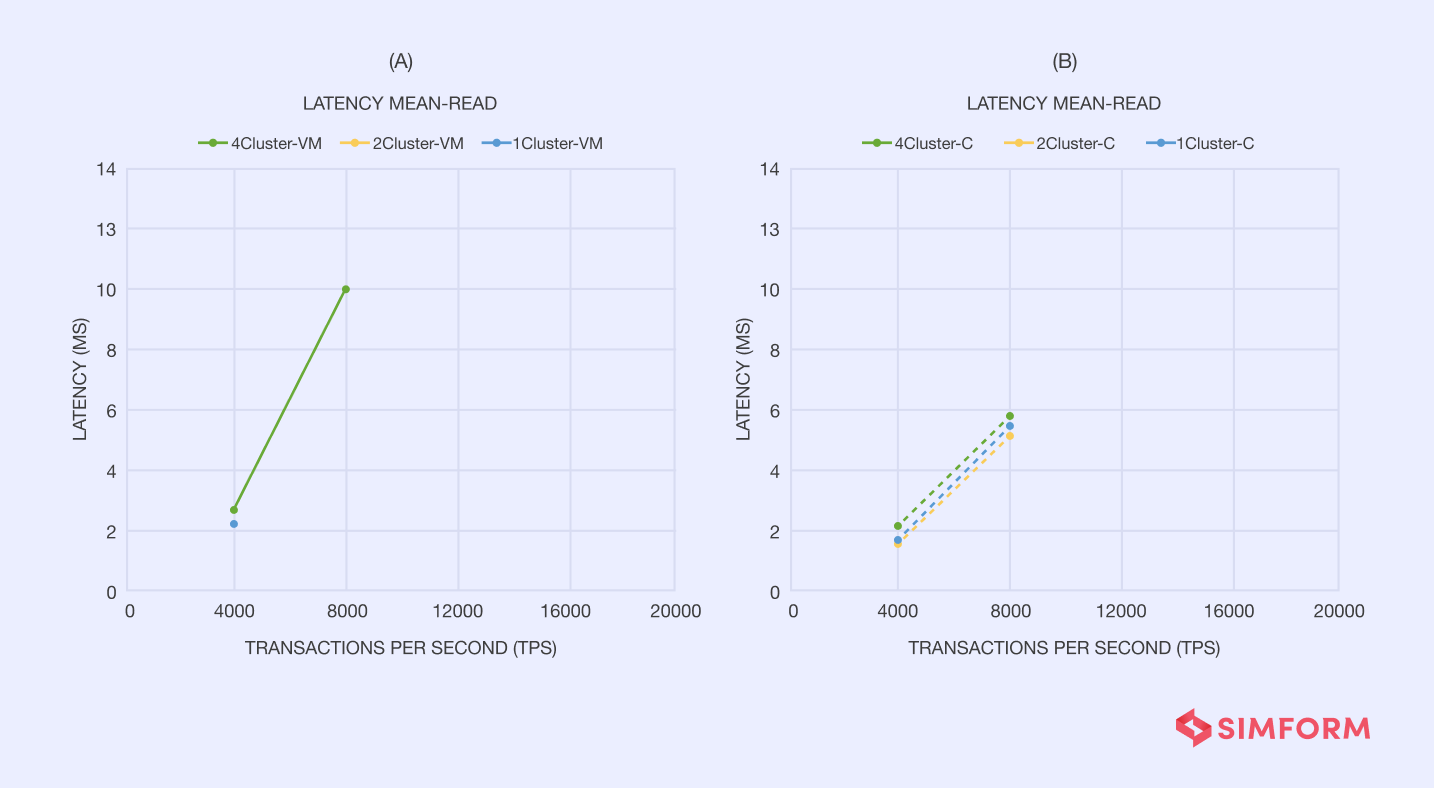

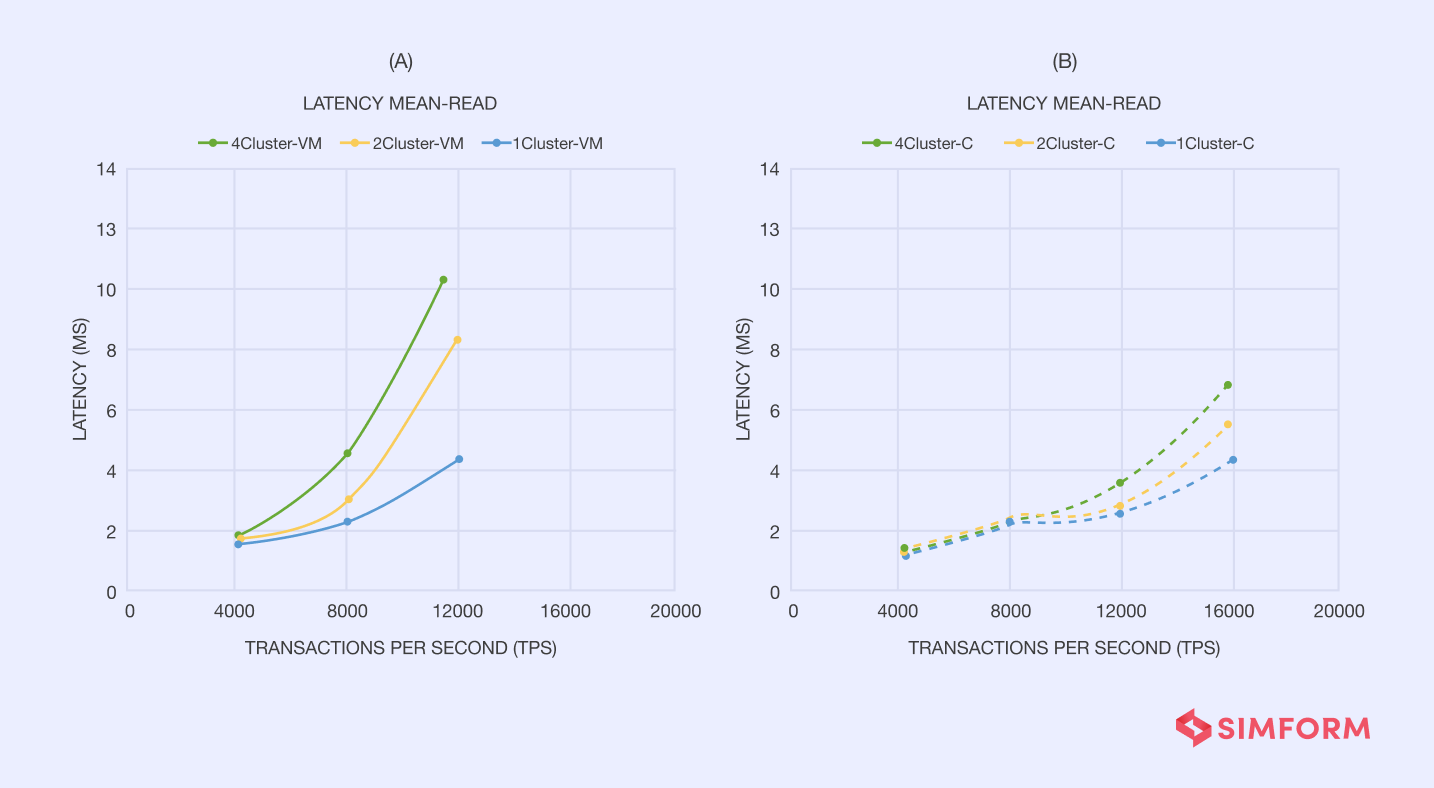

According to a study conducted jointly by Swedish and Italian universities, VMs have higher latency than write and read operations containers. For this study, three Cassandra clusters are deployed on virtual machines and Docker containers for comparison on write operations.

As you can observe from the graphical representation above, latency for writer operations of containers is around 6 ms, and VMs have a latency of 10 ms for 80,000 transactions. With a similar setup, the results for read operations indicated that VMs have a latency of 11 ms and containers have a latency of 7 ms.

This study indicates that VMs need more time than containers for read and write operations. So, when it comes to startup time and lower latency, containers have an advantage over VMs.

However, startup time is not the only factor that matters for business operations; portability is also essential. Especially when you need flexibility across environments and platforms, portability takes center stage.

Portability across environments

Organizations employ different approaches to optimize operations. For example, the multi-cloud strategy is a great way to overcome the shortcomings of one cloud service and make the most of cloud-based technology. However, moving from one environment to another or migrating from bare metal to a multi-cloud environment needs higher portability.

Virtual Machines provide the virtualization of hardware, and that is why it has less portability. They also have less mobility due to individual OS, apart from the hardware mobility issues. So, if you want to move VMs across the public cloud, private or multi-cloud, you need to mobilize the entire stack of individual OS environments. It is like carrying a massive trolley bag across the airport.

At the same time, containers are more of a handbag that you can carry anywhere easily. This is because containers pack microservices and all their dependencies inside a small package that is portable across environments.

Namespaces provide an isolation layer that refrains containers from affecting the host system. Each container runs in different namespaces, and its access is limited to the namespace.

Different types of namespaces are,

- Process ID

- User

- Mount

- Network

- Interprocess communication

VMs can move within environments connected to a hypervisor. On the other hand, if you consider a Docker container, it can be run on a local server, on the developer’s laptop, AWS cloud, or Azure environment. So, containers are no denying the ideal solution for portability compared to VMs.

Portability of apps is desirable, but infrastructure management is at the core of VMs vs. containers. There are several reasons for businesses to look for virtualization, and one of them is infrastructure optimization.

How Etsy implemented a DevOps strategy to stay ahead of the curve

Infrastructure management

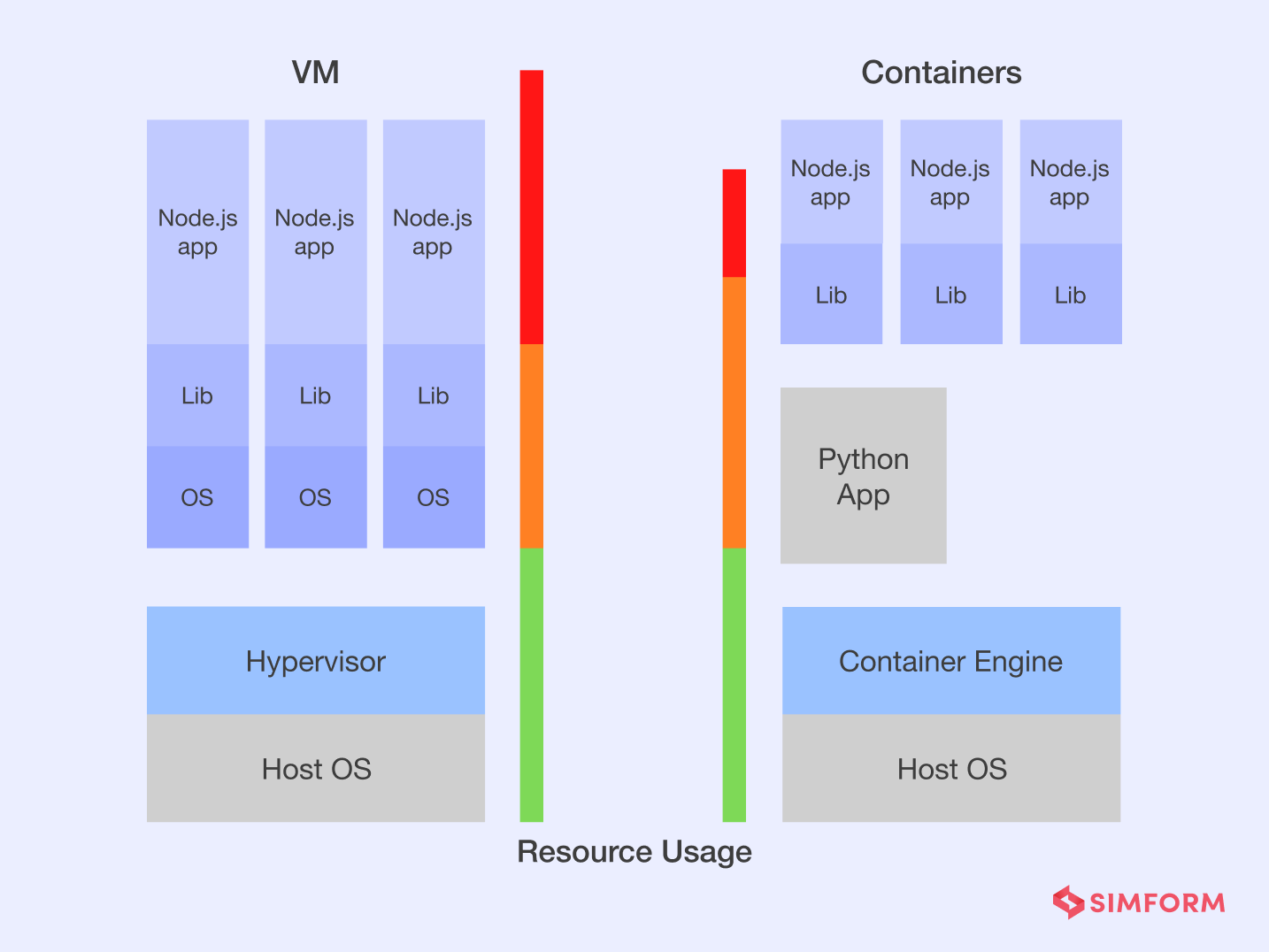

Infrastructure optimization and resource management hold the key to efficient IT operations. Virtual machines help with the virtualization of hardware and enable the deployment of several OS on a single server. However, this does not mean that VMs provide an optimized virtualization solution.

With VMs, there are several copies of OS which consume a lot of RAM, CPU, and disk space. Take an example of a Node.Js application you want to deploy on virtual machines. The structure of VMs will have a host OS on top of hardware and a hypervisor. To push the Node.Js app into the production environment, we need a Linux VM.

When we push the app into production, there is massive consumption of resources due to OS duplication. If you want to integrate third-party service APIs through a Python app, you may consider creating a VM from the Node.Js and Python apps.

It will allow you to use identical VMs for Python and Node.Js applications. However, you can’t scale them individually as they run in the same VM. Further, using the modular approach for individual app deployment is also challenging.

This is where containers can help you with minimal resource usage, maximum scalability, and a modular architecture. You can deploy both an individual Python app and Node.Js in containers on top of the container engine.

Furthermore, due to the modularity of container architecture and lower memory footprint, scaling either the Python app or Node.Js app individually is easy. Another essential benefit of choosing containerization is optimal resource allocation. Even if some containers are not using the CPU storage, it is automatically allocated among other containers.

Here, containers use cgroups for access control to resources among different processes. So, cgroups control what amount of critical resources like CPU, memory, network, and disk I/O, a process of a set of processes can access.

So, containers help with cloud-native architecture and also enable optimized resource usage. With optimized resource management, you can scale your applications better. However, you will need to process more user requests per second as you scale.

Concurrent workload execution

Scaling your application needs the capability of handling more requests from users. Here, the concurrency techniques can help in handling multiple requests at the same time. It is similar to a multi-core computer chip running various programs simultaneously.

Concurrency becomes critical for businesses with a constantly growing user base. For example, Instagram needs to process millions of queries per second. When you compare VMs vs. containers for executing concurrent requests, containers have the advantage of process isolation.

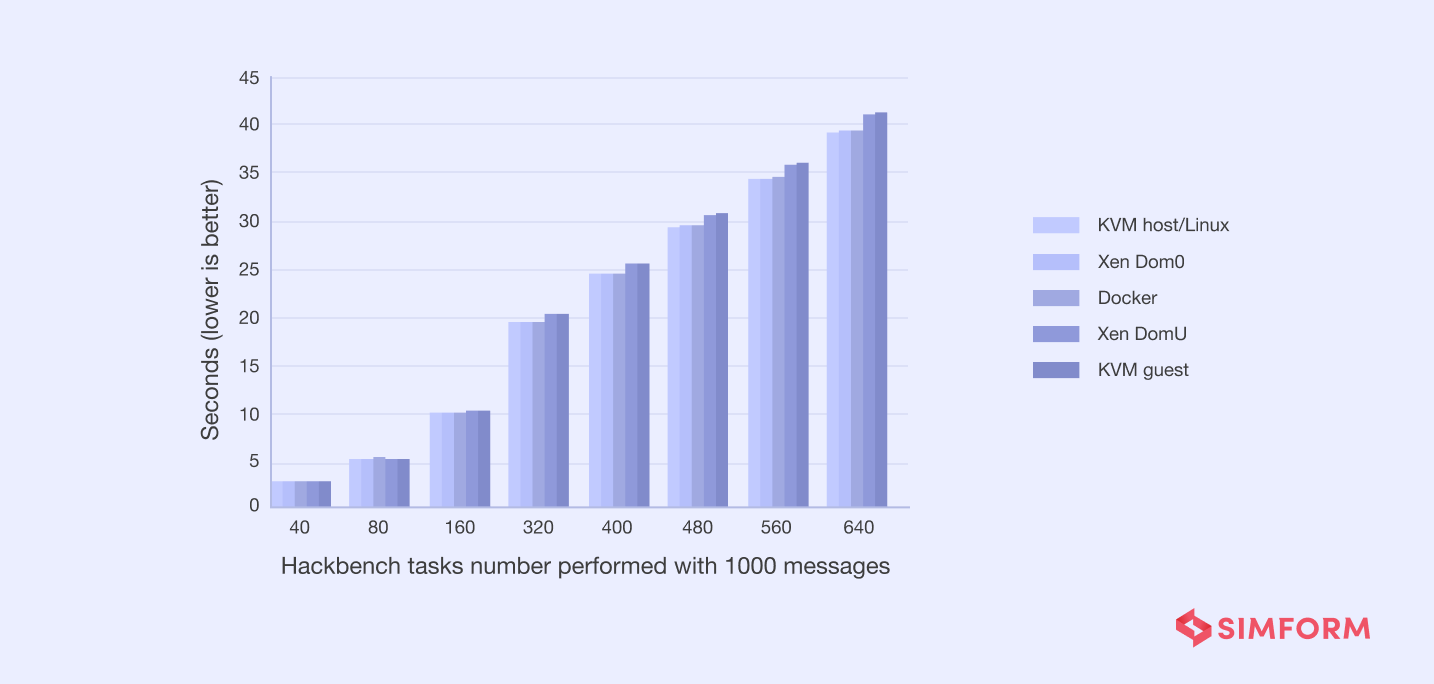

Containers allow you to process concurrent requests simultaneously with the isolation of each service. It also has less overhead compared to VMs for concurrent workload execution. According to a study of Hackbench test on KVM hypervisor, XEN(type 1 hypervisor), and Docker containers, the overhead difference for processing 1000 messages was 5%.

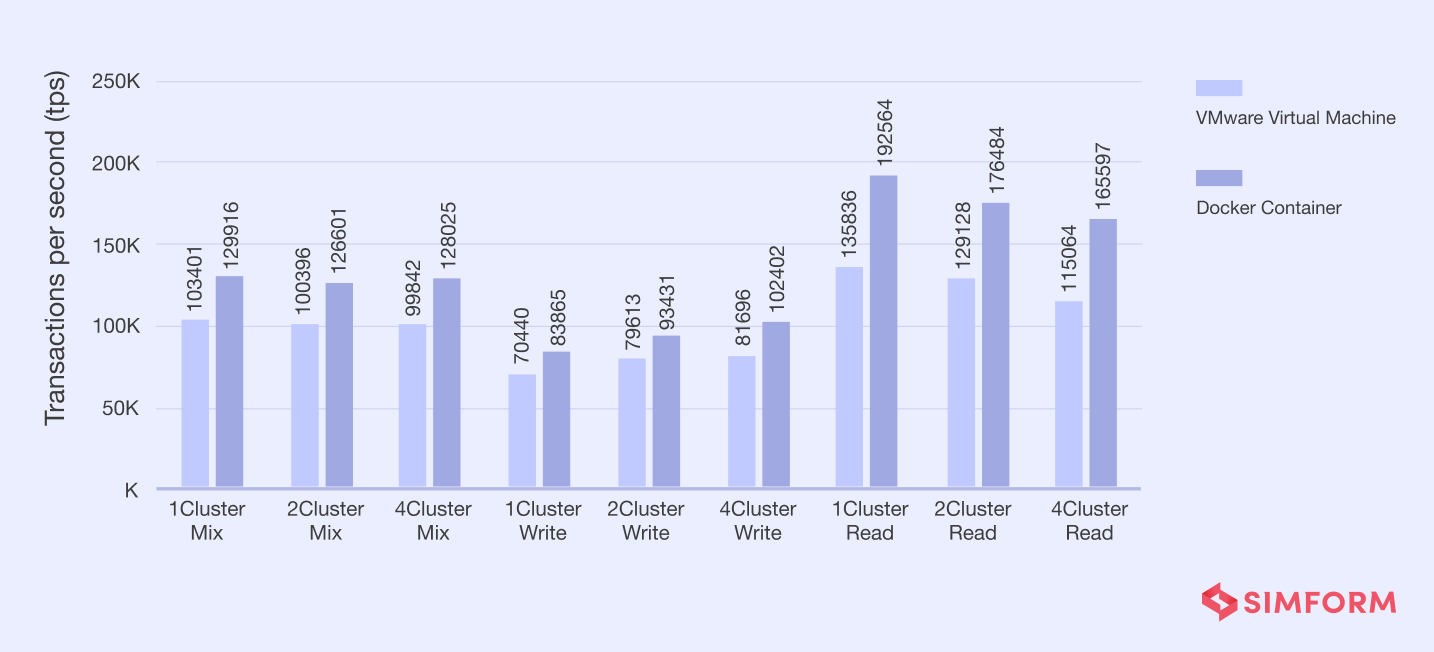

As the tasks increase from 40 to 640, KVM and Xen both see an increase in the overhead while the Docker container has no overheads. Similarly, the read and write operations for mixed workloads for VMs vs. containers also show that containers can process more transactions per second.

The test results show that Docker containers can handle more than 1.9 million transactions per second(tps) compared to 1.3 million tps by VMware VMs. In addition, these results showcase how containers offer better concurrent workloads processing than virtual machines.

There is no denying that handling millions of queries per second is essential, but maintaining security for each transaction becomes key to success.

Security & compliance

Security and compliance with data regulations are quintessential for businesses in the current era of higher cyber attacks. Every minute major companies lose $25 due to a data breach, so cybersecurity is a crucial concern. So, it is critical to compare VMs vs. containers on the security front.

Virtual machines have isolated OS environments, so data security is higher. However, applications running on VMs are susceptible to cyberattacks due to old packages. The lack of regular security patches for VMs leads to vulnerabilities and cyber attacks.

On the other hand, containers share OS, so infections to one container can affect the entire system. However, despite having a shared OS, containers have less exposure than VMs. This is due to short-lived workloads, which typically last for days or hours, and frequent security updates.

Here are some tips on improving the security of containers,

- Scan containers during the application runtime and harden the surfaces exposed to external services

- Use the micro-segmentation approach to isolate application clusters based on risk factors.

- Keep the container host updated along with extensive security testing

Practice continuous logging with different monitoring tools like Splunk, Nagios, LogDNA, Elastic Stack, etc. - Enable all the container-specific security policies like registry scanning, specific data access, and container privileges.

Ensuring security for your applications is critical, and this is where you also need to think about risk assessment and management.

Risk management

Ensuring security needs proper risk management. This means tracking, monitoring, and logging each container or VM for risk assessment. When comparing VMs vs. containers for risk management, containers do a better job of leaving a data trail for effective monitoring. Every container engine executes push and pulls of container images from the registry, leaving a trail that can be logged and monitored for risk assessment.

Further, risk management also involves reducing the risks associated with system failure. Here, VMs lack fault tolerance. Failure of VMs on one server in the cluster triggers restart of OS on a new server affecting the entire app. Apart from this, VMs have a single point of failure due to shared OS.

On the other hand, if a cluster node fails in containerization, an orchestrator will create another and avoid system downtime. Orchestration of clusters is not just beneficial for risk management but has several benefits like,

- Better productivity

- Simplified container deployments

- Less resource consumption

- Centralized monitoring

- Reduced release cycles

Orchestration tools like Kubernetes have been at the forefront of managing containerized workloads. The most significant advantage of Kubernetes is the ability of automated rollouts and rollbacks.

So, if a container does not meet the desired state, it will automatically create new containers and drop risky ones by absorbing all the resources into the new container. Kubernetes affects the development and operational aspects of web app development. It improves productivity and iterative speed.

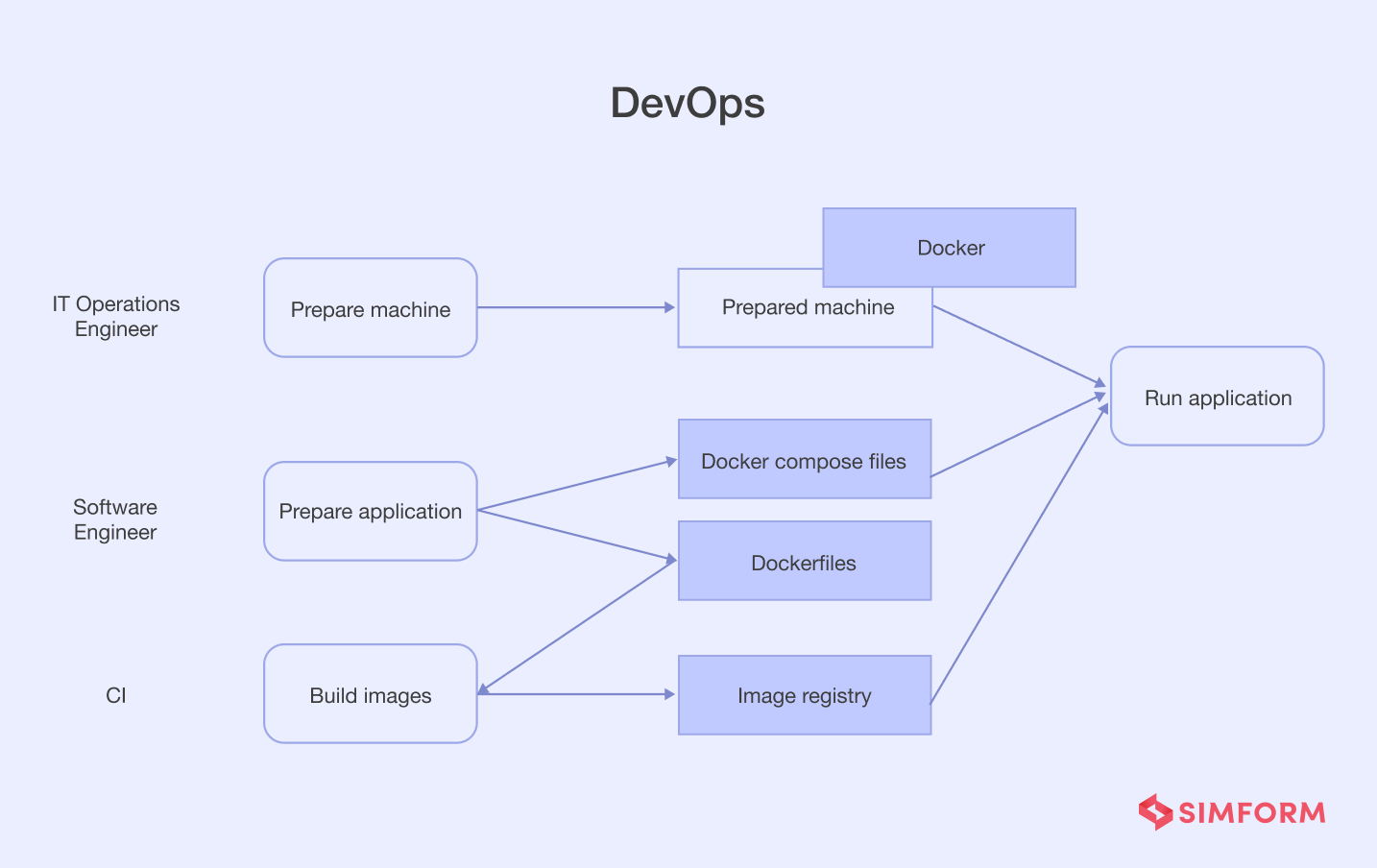

Similarly, DevOps culture also helps organizations with higher efficiency and faster iterations. Next, let’s discuss the impact of VMs and containers on DevOps.

DevOps impact

Organizations dealing with the problems like app instability, the need for enhanced scalability, workflow errors, and more choose a DevOps culture. It helps companies collaborate better between the development and operations teams to improve apps’ scalability, flexibility, and stability.

Virtualization is one of the essential parts of the DevOps transition. It enables teams with consistency and agility of systems for optimal app performance. In addition, VM virtualization allows teams to maximize gains through parallelism. For example, teams can test features in real-time while developing them parallelly.

On the other hand, containerization improves test performance for DevOps teams through simulated environments. Containers provide process isolation through a lightweight image that contains app binaries. It allows application testing in real-time across environments with minimum resources.

So, there is no denying that both VM virtualization and containerization impact the DevOps transition for any organization. Though comparing the intensity of impact on DevOps for VMs vs. containers, containerization has the edge over virtualization.

Containers reduce the use of resources for parallel testing compared to VMs. Another critical aspect of using containers for virtualization is fault tolerance, essential for systems stability. One of the reasons many organizations adopt DevOps culture is improved stability, making containers a more obvious choice.

However, the introduction of containers to your development and operational teams needs consideration of the learning curve. How easy or hard it gets to acclimatize to the new architectural changes can affect your team’s efficiency.

Learning curve

The introduction of virtual machines did not impact the developer’s practices. So, the learning curve for VMs was not that steep. VMs are the primary management unit allowing developers to focus only on managing virtualization, snapshotting, and migrating to new abstractions.

At the same time, the learning curve for containers is steep as it completely changes the app architecture and developer practices. While VMs just virtualize the machines or hardware, containers bring microservice architecture into action. So, developers need to learn,

- Packaging of containers with build instructions

- Service discovery and binding to dependencies

- Security key management and access control across services

- Logging and monitoring data from different containers, applications, and nodes

- Planning for computing resources and requirements of multiple containers

- Management of network within the Kubernetes clusters.

- Snapshotting data at a container level

- Mapping and managing namespaces across environments

So, we can deduce that containers have a steep learning curve than virtual machines. However, containerization benefits attract many organizations despite the steep learning curve.

Now that you know the critical differences between VMs and containers, it’s time to learn when to use either of them!

Get 30-min, no-cost advice from top talents

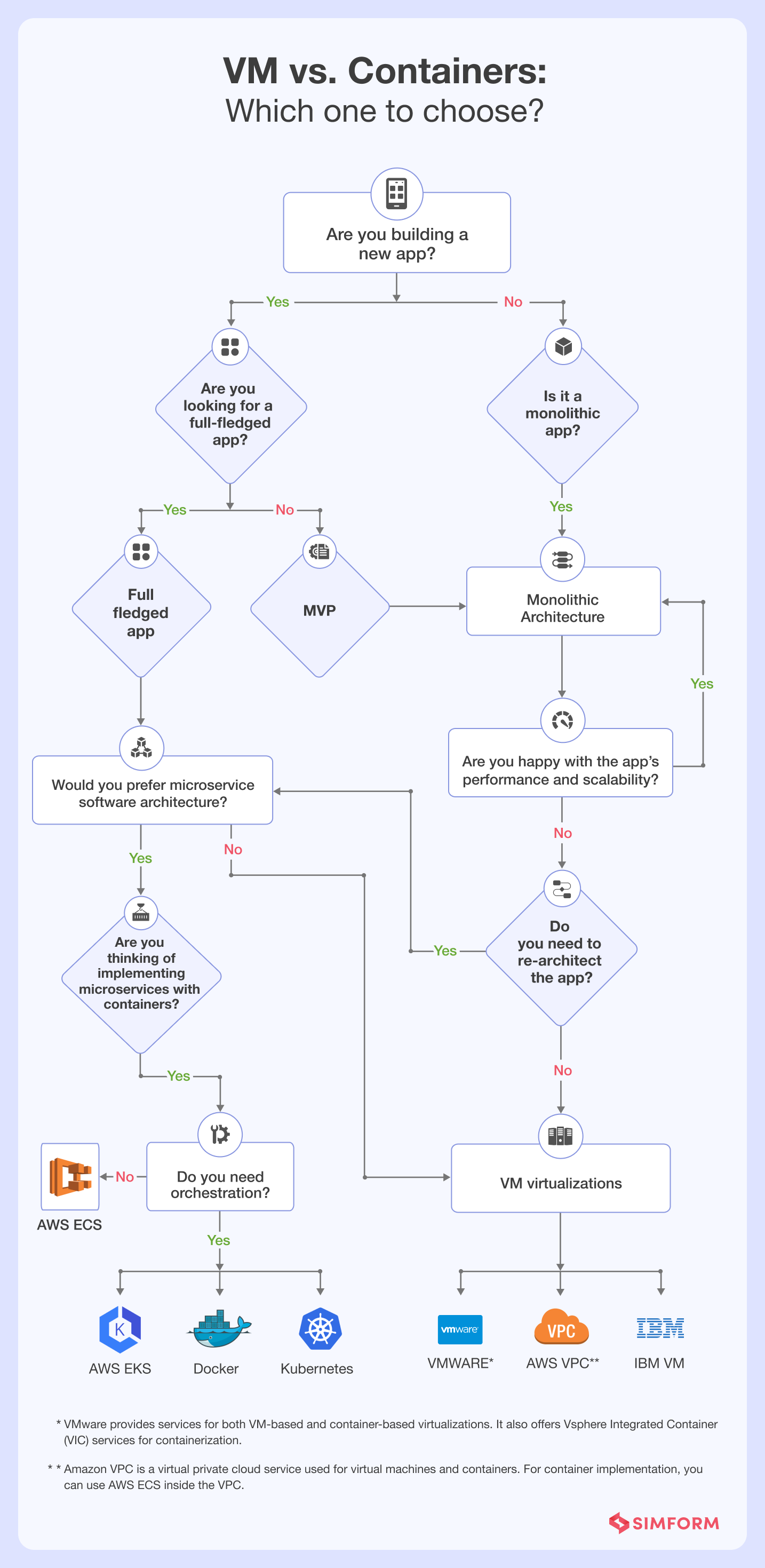

When to use VM or container?

Deciding between VMs vs. containers can be tricky. You need to consider security, scalability, infrastructure needs, portability, etc. However, every organization has a different structure and problem. So, the solution will be specific.

So, here are some examples for VMs and containers to understand when to use what!

Wego’s on-site SAP app scaling issue: One of the leading travel search engines, Wego, added 30 servers to its infrastructure stack. Further, they virtualized the servers with 100 VMs.

However, maintaining a resource-heavy SAP app on their data center was challenging. So, Wego decided to migrate to AWS services for hybrid virtualization.

They now use 159 Amazon EC2 instances to run the software and applications. In addition, all the apps and software are running with Docker-based containers and virtual machines. This approach helped them reduce $120,000 per year with 75% deployments within three-week.

Tableau improved visualization speed with AWS VM: Being at the forefront of data visualization, Tableau looked to enhance user experience. In addition, it needed to bring pods near to customers and offer data locality.

Tableau had VM-based virtualization on their existing data center, which was not flexible enough. So, they used the AWS cloud services to improve the visualization speeds. They used AWS VPC for virtualizations and AWS EC2 with Amazon Elastic Block Storage (EBS) to improve throughput. The result was three to four times faster data visualizations.

Splunk packaged its monolithic app into containers: Splunk is at the forefront of offering data ingestion, analysis, and visualization through its enterprise software. However, it had a monolithic architecture for distributed deployments across OS at the backend. Due to tightly coupled functions in monolithic architecture, deployments across OS were slow.

So, they decided to move to a microservice architecture. However, it was not easy for Splunk teams to break down the software they had built over ten years. The process could also be expensive due to the massive time needed.

They choose to containerize the microservices to reduce the time and cost of deployment. Furthermore, they used Kubernetes to fulfill scheduling requirements and availability through orchestration. It helped the Splunk team to make the software more accessible for customers and reduce time-to-value.

Paypal’s 100% containerization for transaction stability: In 2017, PayPal dealt with $10 billion worth of payment processing on their platform, spanned across 200 global markets. With 210 million active users, the risk of transaction failures was high. The monolithic architecture of PayPal was not flexible and fault-tolerant.

So, PayPal decided to go 100% containers for their applications. They used containers to implement microservices. This approach minimized the deployment time across regions within an hour. PayPal also created an auto rollback service for their deployments.

Which one should you choose: containers or virtual machines?

The selection of a virtualization approach for your organization may look intimidating. However, it all comes down to your business needs. For example, VM is the ideal choice if you are looking for hardware-level virtualization. On the other hand, containers help you better scalability, flexibility, and faster deployments with software-level virtualization.

The best practice will be to use both by adopting a hybrid approach. This is where Simform can help! We have an engineering team that can provide you with market-fit solutions and improved app performance. With our solutions, you can,

- Optimize virtualizations on different levels

- Build reliable microservice architectures

- Enable higher business agility through quicker iterations

- Improve scalability and flexibility of applications

- Ensure a secure experience for your customers

So, if you are still wondering which level of virtualization suits your needs and what to choose from VM vs. containers, feel free to contact us.